-

Language:

English

-

Language:

English

Chapter 3. Core Concepts

3.1. Overview

The following topics provide high-level, architectural information on core concepts and objects you will encounter when using OpenShift. Many of these objects come from Kubernetes, which is extended by OpenShift to provide a more feature-rich development lifecycle platform.

- Containers and images are the building blocks for deploying your applications.

- Pods and services allow for containers to communicate with each other and proxy connections.

- Projects and users provide the space and means for communities to organize and manage their content together.

- Builds and image streams allow you to build working images and react to new images.

- Deployments add expanded support for the software development and deployment lifecycle.

- Routes announce your service to the world.

- Templates allow for many objects to be created at once based on customized parameters.

3.2. Containers and Images

3.2.1. Containers

The basic units of OpenShift applications are called containers. Linux container technologies are lightweight mechanisms for isolating running processes so that they are limited to interacting with only their designated resources. Many application instances can be running in containers on a single host without visibility into each others' processes, files, network, and so on. Typically, each container provides a single service (often called a "micro-service"), such as a web server or a database, though containers can be used for arbitrary workloads.

The Linux kernel has been incorporating capabilities for container technologies for years. More recently the Docker project has developed a convenient management interface for Linux containers on a host. OpenShift and Kubernetes add the ability to orchestrate Docker containers across multi-host installations.

Though you do not directly interact with Docker tools when using OpenShift, understanding Docker’s capabilities and terminology is important for understanding its role in OpenShift and how your applications function inside of containers. Docker is available as part of RHEL 7, as well as CentOS and Fedora, so you can experiment with it separately from OpenShift. Refer to the article Get Started with Docker Formatted Container Images on Red Hat Systems for a guided introduction.

3.2.2. Docker Images

Docker containers are based on Docker images. A Docker image is a binary that includes all of the requirements for running a single Docker container, as well as metadata describing its needs and capabilities. You can think of it as a packaging technology. Docker containers only have access to resources defined in the image, unless you give the container additional access when creating it. By deploying the same image in multiple containers across multiple hosts and load balancing between them, OpenShift can provide redundancy and horizontal scaling for a service packaged into an image.

You can use Docker directly to build images, but OpenShift also supplies builders that assist with creating an image by adding your code or configuration to existing images.

Since applications develop over time, a single image name can actually refer to many different versions of the "same" image. Each different image is referred to uniquely by its hash (a long hexadecimal number e.g. fd44297e2ddb050ec4f…) which is usually shortened to 12 characters (e.g. fd44297e2ddb). Rather than version numbers, Docker allows applying tags (such as v1, v2.1, GA, or the default latest) in addition to the image name to further specify the image desired, so you may see the same image referred to as centos (implying the latest tag), centos:centos7, or fd44297e2ddb.

3.2.3. Docker Registries

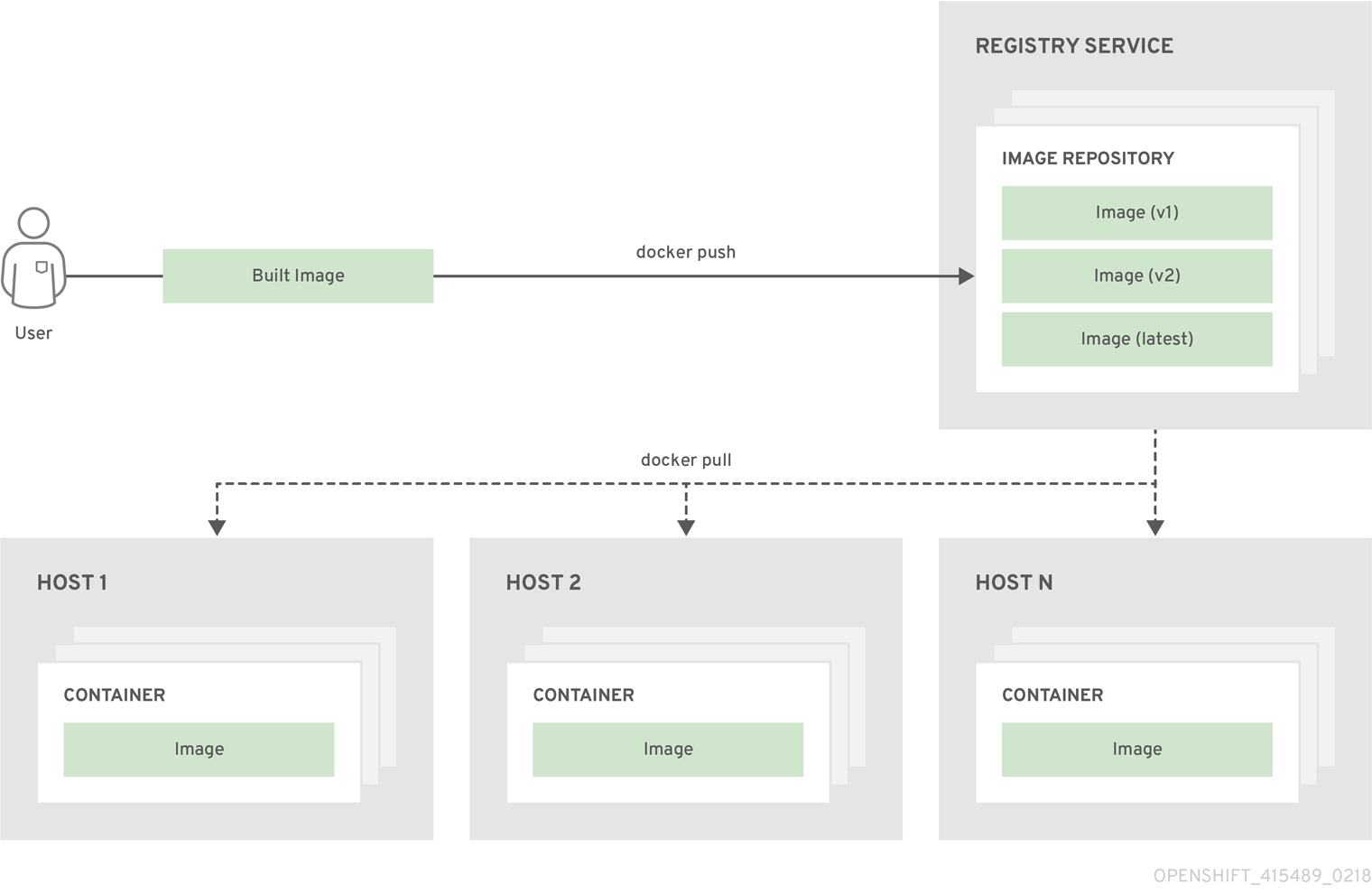

A Docker registry is a service for storing and retrieving Docker images. A registry contains a collection of one or more Docker image repositories. Each image repository contains one or more tagged images. Docker provides its own registry, the Docker Hub, but you may also use private or third-party registries. Red Hat provides a Docker registry at registry.access.redhat.com for subscribers. OpenShift can also supply its own internal registry for managing custom Docker images.

The relationship between containers, images, and registries is depicted in the following diagram:

3.3. Pods and Services

3.3.1. Pods

OpenShift Enterprise leverages the Kubernetes concept of a pod, which is one or more containers deployed together on one host, and the smallest compute unit that can be defined, deployed, and managed.

Pods are the rough equivalent of OpenShift Enterprise v2 gears, with containers the rough equivalent of v2 cartridge instances. Each pod is allocated its own internal IP address, therefore owning its entire port space, and containers within pods can share their local storage and networking.

Pods have a lifecycle; they are defined, then they are assigned to run on a node, then they run until their container(s) exit or they are removed for some other reason. Pods, depending on policy and exit code, may be removed after exiting, or may be retained to enable access to the logs of their containers.

OpenShift Enterprise treats pods as largely immutable; changes cannot be made to a pod definition while it is running. OpenShift Enterprise implements changes by terminating an existing pod and recreating it with modified configuration, base image(s), or both. Pods are also treated as expendable, and do not maintain state when recreated. Therefore manage pods with higher-level controllers, rather than directly by users.

The following example definition of a pod provides a long-running service, which is actually a part of the OpenShift Enterprise infrastructure: the private Docker registry. It demonstrates many features of pods, most of which are discussed in other topics and thus only briefly mentioned here.

Pod object definition example

apiVersion: v1

kind: Pod

metadata:

annotations: { ... }

labels: 1

deployment: docker-registry-1

deploymentconfig: docker-registry

docker-registry: default

generateName: docker-registry-1- 2

spec:

containers: 3

- env: 4

- name: OPENSHIFT_CA_DATA

value: ...

- name: OPENSHIFT_CERT_DATA

value: ...

- name: OPENSHIFT_INSECURE

value: "false"

- name: OPENSHIFT_KEY_DATA

value: ...

- name: OPENSHIFT_MASTER

value: https://master.example.com:8443

image: openshift/origin-docker-registry:v0.6.2 5

imagePullPolicy: IfNotPresent

name: registry

ports: 6

- containerPort: 5000

protocol: TCP

resources: {}

securityContext: { ... } 7

volumeMounts: 8

- mountPath: /registry

name: registry-storage

- mountPath: /var/run/secrets/kubernetes.io/serviceaccount

name: default-token-br6yz

readOnly: true

dnsPolicy: ClusterFirst

imagePullSecrets:

- name: default-dockercfg-at06w

restartPolicy: Always

serviceAccount: default 9

volumes: 10

- emptyDir: {}

name: registry-storage

- name: default-token-br6yz

secret:

secretName: default-token-br6yz

- 1

- Pods can be "tagged" with one or more labels, which can then be used to select and manage groups of pods in a single operation. The labels are stored in key-value format in the

metadatahash. One label in this example is docker-registry=default. - 2

- Pods must have a unique name within their namespace. A pod definition may specify the basis of a name with the

generateNameattribute and random characters will be added automatically to generate a unique name. - 3

containersspecifies an array of container definitions; in this case (as with most), just one.- 4

- Environment variables can be specified to pass necessary values to each container.

- 5

- Each container in the pod is instantiated from its own Docker image.

- 6

- The container can bind to ports which will be made available on the pod’s IP.

- 7

- OpenShift Enterprise defines a security context for containers which specifies whether they are allowed to run as privileged containers, run as a user of their choice, and more. The default context is very restrictive but administrators can modify this as needed.

- 8

- The container specifies where external storage volumes should be mounted within the container. In this case, there is a volume for storing the registry’s data, and one for access to credentials the registry needs for making requests against the OpenShift Enterprise API.

- 9

- Pods making requests against the OpenShift Enterprise API is a common enough pattern that there is a

serviceAccountfield for specifying which service account user the pod should authenticate as when making the requests. This enables fine-grained access control for custom infrastructure components. - 10

- The pod defines storage volumes that are available to its container(s) to use. In this case, it provides an ephemeral volume for the registry storage and a

secretvolume containing the service account credentials.

This pod definition does not include attributes that are filled by OpenShift Enterprise automatically after the pod is created and its lifecycle begins. The Kubernetes API documentation has complete details of the pod REST API object attributes, and the Kubernetes pod documentation has details about the functionality and purpose of pods.

3.3.2. Services

A Kubernetes service serves as an internal load balancer. It identifies a set of replicated pods in order to proxy the connections it receives to them. Backing pods can be added to or removed from a service arbitrarily while the service remains consistently available, enabling anything that depends on the service to refer to it at a consistent internal address.

Services are assigned an IP address and port pair that, when accessed, proxy to an appropriate backing pod. A service uses a label selector to find all the containers running that provide a certain network service on a certain port.

Like pods, services are REST objects. The following example shows the definition of a service for the pod defined above:

Service object definition example

apiVersion: v1 kind: Service metadata: name: docker-registry 1 spec: selector: 2 docker-registry: default portalIP: 172.30.136.123 3 ports: - nodePort: 0 port: 5000 4 protocol: TCP targetPort: 5000 5

- 1

- The service name docker-registry is also used to construct an environment variable with the service IP that is inserted into other pods in the same namespace. The maximum name length is 63 characters.

- 2

- The label selector identifies all pods with the docker-registry=default label attached as its backing pods.

- 3

- Virtual IP of the service, allocated automatically at creation from a pool of internal IPs.

- 4

- Port the service listens on.

- 5

- Port on the backing pods to which the service forwards connections.

The Kubernetes service documentation has more information about services.

3.3.3. Labels

Labels are used to organize, group, or select API objects. For example, pods are "tagged" with labels, and then services use label selectors to identify the pods they proxy to. This makes it possible for services to reference groups of pods, even treating pods with potentially different Docker containers as related entities.

Most objects can include labels in their metadata. Labels can be used to group arbitrarily-related objects; for example, all of the pods, services, replication controllers, and deployment configurations of a particular application can be grouped.

Labels are simple key-value pairs, as in the following example:

Key-value pairs example

labels: key1: value1 key2: value2

Consider:

- A pod consisting of an nginx Docker container, with the label role=webserver.

- A pod consisting of an Apache httpd Docker container, with the same label role=webserver.

A service or replication controller that is defined to use pods with the role=webserver label treats both of these pods as part of the same group.

The Kubernetes labels documentation has more information about labels.

3.4. Projects and Users

3.4.1. Users

Interaction with OpenShift is associated with a user. An OpenShift user object represents an actor which may be granted permissions in the system by adding roles to them or to their groups.

Several types of users can exist:

| Regular users |

This is the way most interactive OpenShift users will be represented. Regular users are created automatically in the system upon first login, or can be created via the API. Regular users are represented with the |

| System users |

Many of these are created automatically when the infrastructure is defined, mainly for the purpose of enabling the infrastructure to interact with the API securely. They include a cluster administrator (with access to everything), a per-node user, users for use by routers and registries, and various others. Finally, there is an |

| Service accounts |

These are special system users associated with projects; some are created automatically when the project is first created, while project administrators can create more for the purpose of defining access to the contents of each project. Service accounts are represented with the |

Every user must authenticate in some way in order to access OpenShift. API requests with no authentication or invalid authentication are authenticated as requests by the anonymous system user. Once authenticated, policy determines what the user is authorized to do.

3.4.2. Namespaces

A Kubernetes namespace provides a mechanism to scope resources in a cluster. In OpenShift, a project is a Kubernetes namespace with additional annotations.

Namespaces provide a unique scope for:

- Named resources to avoid basic naming collisions.

- Delegated management authority to trusted users.

- The ability to limit community resource consumption.

Most objects in the system are scoped by namespace, but some are excepted and have no namespace, including nodes and users.

The Kubernetes documentation has more information on namespaces.

3.4.3. Projects

A project is a Kubernetes namespace with additional annotations, and is the central vehicle by which access to resources for regular users is managed. A project allows a community of users to organize and manage their content in isolation from other communities. Users must be given access to projects by administrators, or if allowed to create projects, automatically have access to their own projects.

Projects can have a separate name, displayName, and description.

-

The mandatory

nameis a unique identifier for the project and is most visible when using the CLI tools or API. The maximum name length is 63 characters. -

The optional

displayNameis how the project is displayed in the web console (defaults toname). -

The optional

descriptioncan be a more detailed description of the project and is also visible in the web console.

Each project scopes its own set of:

| Objects | Pods, services, replication controllers, etc. |

| Policies | Rules for which users can or cannot perform actions on objects. |

| Constraints | Quotas for each kind of object that can be limited. |

| Service accounts | Service accounts act automatically with designated access to objects in the project. |

Cluster administrators can create projects and delegate administrative rights for the project to any member of the user community. Cluster administrators can also allow developers to create their own projects.

Developers and administrators can interact with projects using the CLI or the web console.

3.5. Builds and Image Streams

3.5.1. Builds

A build is the process of transforming input parameters into a resulting object. Most often, the process is used to transform input parameters or source code into a runnable image. A BuildConfig object is the definition of the entire build process.

OpenShift leverages Kubernetes by creating Docker containers from build images and pushing them to a Docker registry.

Build objects share common characteristics: inputs for a build, the need to complete a build process, logging the build process, publishing resources from successful builds, and publishing the final status of the build. Builds take advantage of resource restrictions, specifying limitations on resources such as CPU usage, memory usage, and build or pod execution time.

The OpenShift build system provides extensible support for build strategies that are based on selectable types specified in the build API. There are three build strategies available:

By default, Docker builds and S2I builds are supported.

The resulting object of a build depends on the builder used to create it. For Docker and S2I builds, the resulting objects are runnable images. For Custom builds, the resulting objects are whatever the builder image author has specified.

For a list of build commands, see the Developer’s Guide.

For more information on how OpenShift leverages Docker for builds, see the upstream documentation.

3.5.1.1. Docker Build

The Docker build strategy invokes the plain docker build command, and it therefore expects a repository with a Dockerfile and all required artifacts in it to produce a runnable image.

3.5.1.2. Source-to-Image (S2I) Build

Source-to-Image (S2I) is a tool for building reproducible Docker images. It produces ready-to-run images by injecting application source into a Docker image and assembling a new Docker image. The new image incorporates the base image (the builder) and built source and is ready to use with the docker run command. S2I supports incremental builds, which re-use previously downloaded dependencies, previously built artifacts, etc.

The advantages of S2I include the following:

| Image flexibility |

S2I scripts can be written to inject application code into almost any existing Docker image, taking advantage of the existing ecosystem. Note that, currently, S2I relies on |

| Speed | With S2I, the assemble process can perform a large number of complex operations without creating a new layer at each step, resulting in a fast process. In addition, S2I scripts can be written to re-use artifacts stored in a previous version of the application image, rather than having to download or build them each time the build is run. |

| Patchability | S2I allows you to rebuild the application consistently if an underlying image needs a patch due to a security issue. |

| Operational efficiency | By restricting build operations instead of allowing arbitrary actions, as a Dockerfile would allow, the PaaS operator can avoid accidental or intentional abuses of the build system. |

| Operational security | Building an arbitrary Dockerfile exposes the host system to root privilege escalation. This can be exploited by a malicious user because the entire Docker build process is run as a user with Docker privileges. S2I restricts the operations performed as a root user and can run the scripts as a non-root user. |

| User efficiency |

S2I prevents developers from performing arbitrary |

| Ecosystem | S2I encourages a shared ecosystem of images where you can leverage best practices for your applications. |

3.5.1.3. Custom Build

The Custom build strategy allows developers to define a specific builder image responsible for the entire build process. Using your own builder image allows you to customize your build process.

The Custom builder image is a plain Docker image with embedded build process logic, such as building RPMs or building base Docker images. The openshift/origin-custom-docker-builder image is used by default.

3.5.2. Image Streams

An image stream can be used to automatically perform an action, such as updating a deployment, when a new image, such as a new version of the base image that is used in that deployment, is created.

An image stream comprises one or more Docker images identified by tags. It presents a single virtual view of related images, similar to a Docker image repository, and may contain images from any of the following:

- Its own image repository in OpenShift’s integrated Docker Registry

- Other image streams

- Docker image repositories from external registries

OpenShift components such as builds and deployments can watch an image stream to receive notifications when new images are added and react by performing a build or a deployment.

Example 3.1. Image Stream Object Definition

{

"kind": "ImageStream",

"apiVersion": "v1",

"metadata": {

"name": "origin-ruby-sample",

"namespace": "p1",

"selfLink": "/osapi/v1/namesapces/p1/imageStreams/origin-ruby-sample",

"uid": "480dfe73-f340-11e4-97b5-001c422dcd49",

"resourceVersion": "293",

"creationTimestamp": "2015-05-05T16:03:34Z",

"labels": {

"template": "application-template-stibuild"

}

},

"spec": {},

"status": {

"dockerImageRepository": "172.30.30.129:5000/p1/origin-ruby-sample",

"tags": [

{

"tag": "latest",

"items": [

{

"created": "2015-05-05T16:05:47Z",

"dockerImageReference": "172.30.30.129:5000/p1/origin-ruby-sample@sha256:4d3a646b58685449179a0c61ad4baa19a8df8ba668e0f0704b9ad16f5e16e642",

"image": "sha256:4d3a646b58685449179a0c61ad4baa19a8df8ba668e0f0704b9ad16f5e16e642"

}

]

}

]

}

}3.5.2.1. Image Stream Mappings

When the integrated OpenShift Docker Registry receives a new image, it creates and sends an ImageStreamMapping to OpenShift. This informs OpenShift of the image’s namespace, name, tag, and Docker metadata. OpenShift uses this information to create a new image (if it does not already exist) and to tag the image into the image stream. OpenShift stores complete metadata about each image (e.g., command, entrypoint, environment variables, etc.). Note that images in OpenShift are immutable. Also, note that the maximum name length is 63 characters.

The example ImageStreamMapping below results in an image being tagged as test/origin-ruby-sample:latest.

Example 3.2. Image Stream Mapping Object Definition

{

"kind": "ImageStreamMapping",

"apiVersion": "v1",

"metadata": {

"name": "origin-ruby-sample",

"namespace": "test"

},

"image": {

"metadata": {

"name": "a2f15cc10423c165ca221f4a7beb1f2949fb0f5acbbc8e3a0250eb7d5593ae64"

},

"dockerImageReference": "172.30.17.3:5001/test/origin-ruby-sample:a2f15cc10423c165ca221f4a7beb1f2949fb0f5acbbc8e3a0250eb7d5593ae64",

"dockerImageMetadata": {

"kind": "DockerImage",

"apiVersion": "1.0",

"Id": "a2f15cc10423c165ca221f4a7beb1f2949fb0f5acbbc8e3a0250eb7d5593ae64",

"Parent": "3bb14bfe4832874535814184c13e01527239633627cdc38f18fa186e73a6b62c",

"Created": "2015-01-23T21:47:04Z",

"Container": "f81db8980c62d7650683326173a361c3b09f3bc41471918b6319f7df67943b54",

"ContainerConfig": {

"Hostname": "f81db8980c62",

"User": "ruby",

"AttachStdout": true,

"ExposedPorts": {

"9292/tcp": {}

},

"OpenStdin": true,

"StdinOnce": true,

"Env": [

"OPENSHIFT_BUILD_NAME=4bf65438-a349-11e4-bead-001c42c44ee1",

"OPENSHIFT_BUILD_NAMESPACE=test",

"OPENSHIFT_BUILD_SOURCE=https://github.com/openshift/ruby-hello-world",

"PATH=/opt/ruby/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"STI_SCRIPTS_URL=https://raw.githubusercontent.com/openshift/sti-ruby/master/2.0/.sti/bin",

"APP_ROOT=.",

"HOME=/opt/ruby"

],

"Cmd": [

"/bin/sh",

"-c",

"tar -C /tmp -xf - \u0026\u0026 /tmp/scripts/assemble"

],

"Image": "openshift/ruby-20-centos7",

"WorkingDir": "/opt/ruby/src"

},

"DockerVersion": "1.4.1-dev",

"Config": {

"User": "ruby",

"ExposedPorts": {

"9292/tcp": {}

},

"Env": [

"OPENSHIFT_BUILD_NAME=4bf65438-a349-11e4-bead-001c42c44ee1",

"OPENSHIFT_BUILD_NAMESPACE=test",

"OPENSHIFT_BUILD_SOURCE=https://github.com/openshift/ruby-hello-world",

"PATH=/opt/ruby/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"STI_SCRIPTS_URL=https://raw.githubusercontent.com/openshift/sti-ruby/master/2.0/.sti/bin",

"APP_ROOT=.",

"HOME=/opt/ruby"

],

"Cmd": [

"/tmp/scripts/run"

],

"WorkingDir": "/opt/ruby/src"

},

"Architecture": "amd64",

"Size": 11710004

},

"dockerImageMetadataVersion": "1.0"

},

"tag": "latest"

}3.5.2.2. Referencing Images in Image Streams

An ImageStreamTag is used to reference or retrieve an image for a given image stream and tag. It uses the following convention for its name: <image stream name>:<tag>.

An ImageStreamImage is used to reference or retrieve an image for a given image stream and image name. It uses the following convention for its name: <image stream name>@<name>.

The sample image below is from the ruby image stream and was retrieved by asking for the ImageStreamImage with the name ruby@371829c:

Example 3.3. Definition of an Image Object retrieved via ImageStreamImage

{

"kind": "ImageStreamImage",

"apiVersion": "v1",

"metadata": {

"name": "ruby@371829c",

"uid": "a48b40d7-18e2-11e5-9ba2-001c422dcd49",

"resourceVersion": "1888",

"creationTimestamp": "2015-06-22T13:29:00Z"

},

"image": {

"metadata": {

"name": "371829c6d5cf05924db2ab21ed79dd0937986a817c7940b00cec40616e9b12eb",

"uid": "a48b40d7-18e2-11e5-9ba2-001c422dcd49",

"resourceVersion": "1888",

"creationTimestamp": "2015-06-22T13:29:00Z"

},

"dockerImageReference": "openshift/ruby-20-centos7:latest",

"dockerImageMetadata": {

"kind": "DockerImage",

"apiVersion": "1.0",

"Id": "371829c6d5cf05924db2ab21ed79dd0937986a817c7940b00cec40616e9b12eb",

"Parent": "8c7059377eaf86bc913e915f064c073ff45552e8921ceeb1a3b7cbf9215ecb66",

"Created": "2015-06-20T23:02:23Z",

"ContainerConfig": {},

"DockerVersion": "1.6.0",

"Author": "Jakub Hadvig \u003cjhadvig@redhat.com\u003e",

"Config": {

"User": "1001",

"ExposedPorts": {

"8080/tcp": {}

},

"Env": [

"PATH=/opt/openshift/src/bin:/opt/openshift/bin:/usr/local/sti:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin",

"STI_SCRIPTS_URL=image:///usr/local/sti",

"HOME=/opt/openshift/src",

"BASH_ENV=/opt/openshift/etc/scl_enable",

"ENV=/opt/openshift/etc/scl_enable",

"PROMPT_COMMAND=. /opt/openshift/etc/scl_enable",

"RUBY_VERSION=2.0"

],

"Cmd": [

"usage"

],

"Image": "8c7059377eaf86bc913e915f064c073ff45552e8921ceeb1a3b7cbf9215ecb66",

"WorkingDir": "/opt/openshift/src",

"Labels": {

"io.openshift.s2i.scripts-url": "image:///usr/local/sti",

"k8s.io/description": "Platform for building and running Ruby 2.0 applications",

"k8s.io/display-name": "Ruby 2.0",

"openshift.io/expose-services": "8080:http",

"openshift.io/tags": "builder,ruby,ruby20"

}

},

"Architecture": "amd64",

"Size": 53950504

},

"dockerImageMetadataVersion": "1.0"

}

}3.5.2.3. Image Pull Policy

Each container in a pod has a Docker image. Once you have created an image and pushed it to a registry, you can then refer to it in the pod.

When OpenShift creates containers, it uses the container’s imagePullPolicy to determine if the image should be pulled prior to starting the container. There are three possible values for imagePullPolicy:

-

Always- always pull the image. -

IfNotPresent- only pull the image if it does not already exist on the node. -

Never- never pull the image.

If a container’s imagePullPolicy parameter is not specified, OpenShift sets it based on the image’s tag:

-

If the tag is latest, OpenShift defaults

imagePullPolicytoAlways. -

Otherwise, OpenShift defaults

imagePullPolicytoIfNotPresent.

3.5.2.4. Importing Tag and Image Metadata

An image stream can be configured to import tag and image metadata from an image repository in an external Docker image registry. See Image Registry for more details.

3.5.2.5. Tag Tracking

An image stream can also be configured so that a tag "tracks" another one. For example, you can configure the latest tag to always refer to the current image for the tag "2.0":

{

"kind": "ImageStream",

"apiVersion": "v1",

"metadata": {

"name": "ruby"

},

"spec": {

"tags": [

{

"name": "latest",

"from": {

"kind": "ImageStreamTag",

"name": "2.0"

}

}

]

}

}$ oc tag ruby:latest ruby:2.0

3.5.2.6. Tag Removal

You can stop tracking a tag by removing it. For example, you can stop tracking the latest tag you set above:

$ oc tag -d ruby:latest

The above command removes the tag from the image stream spec, but not from the image stream status. The image stream spec is user-defined, whereas the image stream status reflects the information the system has from the specification. To remove a tag completely from an image stream:

$ oc delete istag/ruby:latest

You can also do the same using the oc tag command:

oc tag ruby:latest ruby:2.0

3.5.2.7. Importing Images from Insecure Registries

An image stream can be configured to import tag and image metadata from an image repository that is signed with a self-signed certificate or from one using plain http instead of https. To do that, add openshift.io/image.insecureRepository annotation set to true. This setting will bypass certificate validation when connecting to registry.

kind: ImageStream

apiVersion: v1

metadata:

name: ruby

annotations:

openshift.io/image.insecureRepository: "true"

spec:

dockerImageRepository: "my.repo.com:5000/myimage"

The above definition will only affect importing tag and image metadata. For this image to be used in the cluster (be able to do docker pull) each node needs to have docker configured with --insecure-registry flag. See Installing Docker in Host Preparation for information on the topic.

3.6. Deployments

3.6.1. Replication Controllers

A replication controller ensures that a specified number of replicas of a pod are running at all times. If pods exit or are deleted, the replica controller acts to instantiate more up to the desired number. Likewise, if there are more running than desired, it deletes as many as necessary to match the number.

The definition of a replication controller consists mainly of:

- The number of replicas desired (which can be adjusted at runtime).

- A pod definition for creating a replicated pod.

- A selector for identifying managed pods.

The selector is just a set of labels that all of the pods managed by the replication controller should have. So that set of labels is included in the pod definition that the replication controller instantiates. This selector is used by the replication controller to determine how many instances of the pod are already running in order to adjust as needed.

It is not the job of the replication controller to perform auto-scaling based on load or traffic, as it does not track either; rather, this would require its replica count to be adjusted by an external auto-scaler.

Replication controllers are a core Kubernetes object, ReplicationController. The Kubernetes documentation has more details on replication controllers.

Here is an example ReplicationController definition with some omissions and callouts:

apiVersion: v1 kind: ReplicationController metadata: name: frontend-1 spec: replicas: 1 1 selector: 2 name: frontend template: 3 metadata: labels: 4 name: frontend 5 spec: containers: - image: openshift/hello-openshift name: helloworld ports: - containerPort: 8080 protocol: TCP restartPolicy: Always

- The number of copies of the pod to run.

- The label selector of the pod to run.

- A template for the pod the controller creates.

- Labels on the pod should include those from the label selector.

- The maximum name length after expanding any parameters is 63 characters.

3.6.2. Deployments and Deployment Configurations

Building on replication controllers, OpenShift adds expanded support for the software development and deployment lifecycle with the concept of deployments. In the simplest case, a deployment just creates a new replication controller and lets it start up pods. However, OpenShift deployments also provide the ability to transition from an existing deployment of an image to a new one and also define hooks to be run before or after creating the replication controller.

The OpenShift DeploymentConfiguration object defines the following details of a deployment:

-

The elements of a

ReplicationControllerdefinition. - Triggers for creating a new deployment automatically.

- The strategy for transitioning between deployments.

- Life cycle hooks.

Each time a deployment is triggered, whether manually or automatically, a deployer pod manages the deployment (including scaling down the old replication controller, scaling up the new one, and running hooks). The deployment pod remains for an indefinite amount of time after it completes the deployment in order to retain its logs of the deployment. When a deployment is superseded by another, the previous replication controller is retained to enable easy rollback if needed.

For detailed instructions on how to create and interact with deployments, refer to Deployments.

Here is an example DeploymentConfiguration definition with some omissions and callouts:

apiVersion: v1

kind: DeploymentConfig

metadata:

name: frontend

spec:

replicas: 5

selector:

name: frontend

template: { ... }

triggers:

- type: ConfigChange 1

- imageChangeParams:

automatic: true

containerNames:

- helloworld

from:

kind: ImageStreamTag

name: hello-openshift:latest

type: ImageChange 2

strategy:

type: Rolling 3-

A

ConfigChangetrigger causes a new deployment to be created any time the replication controller template changes. -

An

ImageChangetrigger causes a new deployment to be created each time a new version of the backing image is available in the named image stream. -

The default

Rollingstrategy makes a downtime-free transition between deployments.

3.7. Routes

3.7.1. Overview

An OpenShift route is a way to expose a service by giving it an externally-reachable hostname like www.example.com.

A defined route and the endpoints identified by its service can be consumed by a router to provide named connectivity that allows external clients to reach your applications. Each route consists of a route name (limited to 63 characters), service selector, and (optionally) security configuration.

3.7.2. Routers

An OpenShift administrator can deploy routers in an OpenShift cluster, which enable routes created by developers to be used by external clients. The routing layer in OpenShift is pluggable, and two available router plug-ins are provided and supported by default.

OpenShift routers provide external host name mapping and load balancing to services over protocols that pass distinguishing information directly to the router; the host name must be present in the protocol in order for the router to determine where to send it.

Router plug-ins assume they can bind to host ports 80 and 443. This is to allow external traffic to route to the host and subsequently through the router. Routers also assume that networking is configured such that it can access all pods in the cluster.

Routers support the following protocols:

- HTTP

- HTTPS (with SNI)

- WebSockets

- TLS with SNI

WebSocket traffic uses the same route conventions and supports the same TLS termination types as other traffic.

A router uses the service selector to find the service and the endpoints backing the service. Service-provided load balancing is bypassed and replaced with the router’s own load balancing. Routers watch the cluster API and automatically update their own configuration according to any relevant changes in the API objects. Routers may be containerized or virtual. Custom routers can be deployed to communicate modifications of API objects to an external routing solution.

In order to reach a router in the first place, requests for host names must resolve via DNS to a router or set of routers. The suggested method is to define a cloud domain with a wildcard DNS entry pointing to a virtual IP backed by multiple router instances on designated nodes. Router VIP configuration is described in the Administration Guide. DNS for addresses outside the cloud domain would need to be configured individually. Other approaches may be feasible.

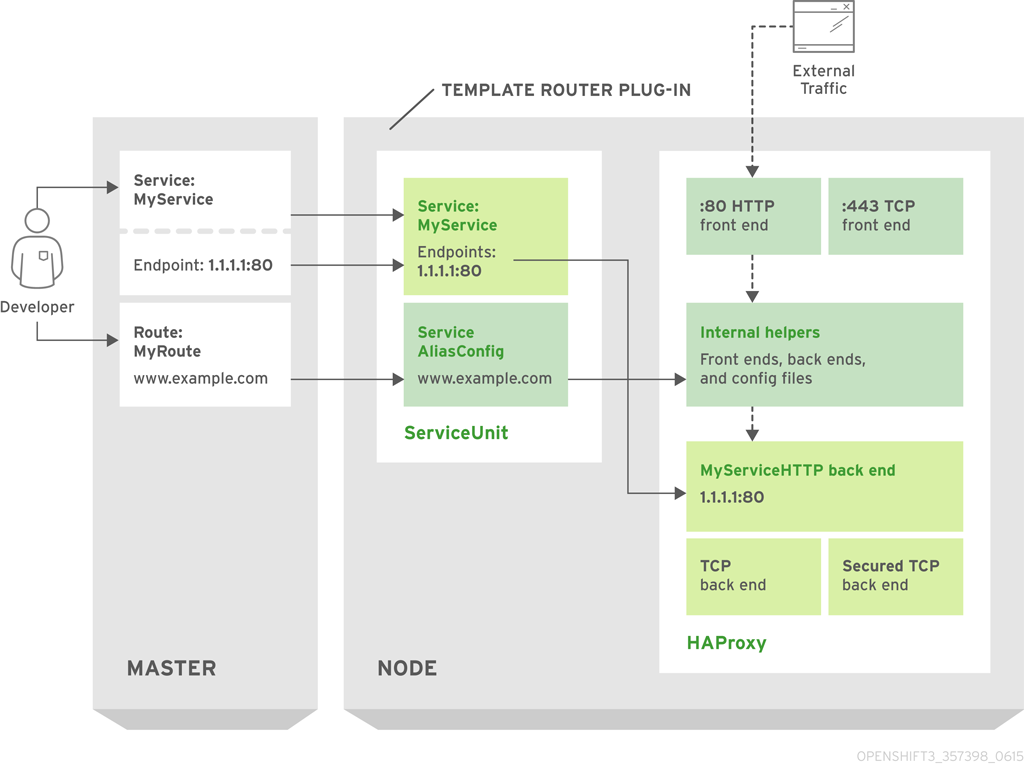

3.7.2.1. Template Routers

A template router is a type of router that provides certain infrastructure information to the underlying router implementation, such as:

- A wrapper that watches endpoints and routes.

- Endpoint and route data, which is saved into a consumable form.

- Passing the internal state to a configurable template and executing the template.

- Calling a reload script.

3.7.3. Available Router Plug-ins

The following router plug-ins are provided and supported in OpenShift. Instructions on deploying these routers are available in Deploying a Router.

3.7.3.1. HAProxy Template Router

The HAProxy template router implementation is the reference implementation for a template router plug-in. It uses the openshift3/ose-haproxy-router repository to run an HAProxy instance alongside the template router plug-in.

The following diagram illustrates how data flows from the master through the plug-in and finally into an HAProxy configuration:

Figure 3.1. HAProxy Router Data Flow

Sticky Sessions

Implementing sticky sessions is up to the underlying router configuration. The default HAProxy template implements sticky sessions using the balance source directive which balances based on the source IP. In addition, the template router plug-in provides the service name and namespace to the underlying implementation. This can be used for more advanced configuration such as implementing stick-tables that synchronize between a set of peers.

Specific configuration for this router implementation is stored in the haproxy-config.template file located in the /var/lib/haproxy/conf directory of the router container.

The balance source directive does not distinguish between external client IP addresses; because of the NAT configuration, the originating IP address (HAProxy remote) is the same. Unless the HAProxy router is running with hostNetwork: true, all external clients will be routed to a single pod.

3.7.3.2. F5 Router

The F5 router plug-in is available starting in OpenShift Enterprise 3.0.2.

The F5 router plug-in integrates with an existing F5 BIG-IP® system in your environment. F5 BIG-IP® version 11.4 or newer is required in order to have the F5 iControl REST API. The F5 router supports unsecured, edge terminated, re-encryption terminated, and passthrough terminated routes matching on HTTP vhost and request path.

The F5 router has additional features over the F5 BIG-IP® support in OpenShift Enterprise 2. When comparing with the OpenShift routing-daemon used in earlier versions, the F5 router additionally supports:

- path-based routing (using policy rules),

- passthrough of encrypted connections (implemented using an iRule that parses the SNI protocol and uses a data group that is maintained by the F5 router for the servername lookup).

Passthrough routes are a special case: path-based routing is technically impossible with passthrough routes because F5 BIG-IP® itself does not see the HTTP request, so it cannot examine the path. The same restriction applies to the template router; it is a technical limitation of passthrough encryption, not a technical limitation of OpenShift.

Routing Traffic to Pods Through the SDN

Because F5 BIG-IP® is external to the OpenShift SDN, a cluster administrator must create a peer-to-peer tunnel between F5 BIG-IP® and a host that is on the SDN, typically an OpenShift node host. This ramp node can be configured as unschedulable for pods so that it will not be doing anything except act as a gateway for the F5 BIG-IP® host. It is also possible to configure multiple such hosts and use the OpenShift ipfailover feature for redundancy; the F5 BIG-IP® host would then need to be configured to use the ipfailover VIP for its tunnel’s remote endpoint.

F5 Integration Details

The operation of the F5 router is similar to that of the OpenShift routing-daemon used in earlier versions. Both use REST API calls to:

- create and delete pools,

- add endpoints to and delete them from those pools, and

- configure policy rules to route to pools based on vhost.

Both also use scp and ssh commands to upload custom TLS/SSL certificates to F5 BIG-IP®.

The F5 router configures pools and policy rules on virtual servers as follows:

When a user creates or deletes a route on OpenShift, the router creates a pool to F5 BIG-IP® for the route (if no pool already exists) and adds a rule to, or deletes a rule from, the policy of the appropriate vserver: the HTTP vserver for non-TLS routes, or the HTTPS vserver for edge or re-encrypt routes. In the case of edge and re-encrypt routes, the router also uploads and configures the TLS certificate and key. The router supports host- and path-based routes.

NotePassthrough routes are a special case: to support those, it is necessary to write an iRule that parses the SNI ClientHello handshake record and looks up the servername in an F5 data-group. The router creates this iRule, associates the iRule with the vserver, and updates the F5 data-group as passthrough routes are created and deleted. Other than this implementation detail, passthrough routes work the same way as other routes.

- When a user creates a service on OpenShift, the router adds a pool to F5 BIG-IP® (if no pool already exists). As endpoints on that service are created and deleted, the router adds and removes corresponding pool members.

- When a user deletes the route and all endpoints associated with a particular pool, the router deletes that pool.

3.7.4. Route Host Names

In order for services to be exposed externally, an OpenShift route allows you to associate a service with an externally-reachable host name. This edge host name is then used to route traffic to the service.

When two routes claim the same host, the oldest route wins. If additional routes with different path fields are defined in the same namespace, those paths will be added. If multiple routes with the same path are used, the oldest takes priority.

Example 3.4. A Route with a Specified Host:

Example 3.5. A Route Without a Host:

apiVersion: v1

kind: Route

metadata:

name: no-route-hostname

spec:

to:

kind: Service

name: service-nameIf a host name is not provided as part of the route definition, then OpenShift automatically generates one for you. The generated host name is of the form:

<route-name>[-<namespace>].<suffix>

The following example shows the OpenShift-generated host name for the above configuration of a route without a host added to a namespace mynamespace:

Example 3.6. Generated Host Name

no-route-hostname-mynamespace.router.default.svc.cluster.local 1- 1

- The generated host name suffix is the default routing subdomain router.default.svc.cluster.local.

A cluster administrator can also customize the suffix used as the default routing subdomain for their environment.

3.7.5. Route Types

Routes can be either secured or unsecured. Secure routes provide the ability to use several types of TLS termination to serve certificates to the client. Routers support edge, passthrough, and re-encryption termination.

Example 3.7. Unsecured Route Object YAML Definition

apiVersion: v1

kind: Route

metadata:

name: route-unsecured

spec:

host: www.example.com

to:

kind: Service

name: service-nameUnsecured routes are simplest to configure, as they require no key or certificates, but secured routes offer security for connections to remain private.

A secured route is one that specifies the TLS termination of the route. The available types of termination are described below.

3.7.6. Path Based Routes

Path based routes specify a path component that can be compared against a URL (which requires that the traffic for the route be HTTP based) such that multiple routes can be served using the same hostname, each with a different path. Routers should match routes based on the most specific path to the least; however, this depends on the router implementation. The following table shows example routes and their accessibility:

Table 3.1. Route Availability

| Route | When Compared to | Accessible |

|---|---|---|

| www.example.com/test | www.example.com/test | Yes |

| www.example.com | No | |

| www.example.com/test and www.example.com | www.example.com/test | Yes |

| www.example.com | Yes | |

| www.example.com | www.example.com/test | Yes (Matched by the host, not the route) |

| www.example.com | Yes |

Example 3.8. An Unsecured Route with a Path:

apiVersion: v1

kind: Route

metadata:

name: route-unsecured

spec:

host: www.example.com

path: "/test" 1

to:

kind: Service

name: service-name- 1

- The path is the only added attribute for a path-based route.

Path-based routing is not available when using passthrough TLS, as the router does not terminate TLS in that case and cannot read the contents of the request.

3.7.7. Secured Routes

Secured routes specify the TLS termination of the route and, optionally, provide a key and certificate(s).

TLS termination in OpenShift relies on SNI for serving custom certificates. Any non-SNI traffic received on port 443 is handled with TLS termination and a default certificate (which may not match the requested hostname, resulting in validation errors).

Secured routes can use any of the following three types of secure TLS termination.

Edge Termination

With edge termination, TLS termination occurs at the router, prior to proxying traffic to its destination. TLS certificates are served by the front end of the router, so they must be configured into the route, otherwise the router’s default certificate will be used for TLS termination.

Example 3.9. A Secured Route Using Edge Termination

apiVersion: v1 kind: Route metadata: name: route-edge-secured 1 spec: host: www.example.com to: kind: Service name: service-name 2 tls: termination: edge 3 key: |- 4 -----BEGIN PRIVATE KEY----- [...] -----END PRIVATE KEY----- certificate: |- 5 -----BEGIN CERTIFICATE----- [...] -----END CERTIFICATE----- caCertificate: |- 6 -----BEGIN CERTIFICATE----- [...] -----END CERTIFICATE-----

- 1 2

- The name of the object, which is limited to 63 characters.

- 3

- The

terminationfield isedgefor edge termination. - 4

- The

keyfield is the contents of the PEM format key file. - 5

- The

certificatefield is the contents of the PEM format certificate file. - 6

- An optional CA certificate may be required to establish a certificate chain for validation.

Because TLS is terminated at the router, connections from the router to the endpoints over the internal network are not encrypted.

Passthrough Termination

With passthrough termination, encrypted traffic is sent straight to the destination without the router providing TLS termination. Therefore no key or certificate is required.

Example 3.10. A Secured Route Using Passthrough Termination

apiVersion: v1 kind: Route metadata: name: route-passthrough-secured 1 spec: host: www.example.com to: kind: Service name: service-name 2 tls: termination: passthrough 3

The destination pod is responsible for serving certificates for the traffic at the endpoint. This is currently the only method that can support requiring client certificates (also known as two-way authentication).

Re-encryption Termination

Re-encryption is a variation on edge termination where the router terminates TLS with a certificate, then re-encrypts its connection to the endpoint which may have a different certificate. Therefore the full path of the connection is encrypted, even over the internal network. The router uses health checks to determine the authenticity of the host.

Example 3.11. A Secured Route Using Re-Encrypt Termination

apiVersion: v1 kind: Route metadata: name: route-pt-secured 1 spec: host: www.example.com to: kind: Service name: service-name 2 tls: termination: reencrypt 3 key: [as in edge termination] certificate: [as in edge termination] caCertificate: [as in edge termination] destinationCACertificate: |- 4 -----BEGIN CERTIFICATE----- [...] -----END CERTIFICATE-----

- 1 2

- The name of the object, which is limited to 63 characters.

- 3

- The

terminationfield is set toreencrypt. Other fields are as in edge termination. - 4

- The

destinationCACertificatefield specifies a CA certificate to validate the endpoint certificate, securing the connection from the router to the destination. This field is required, but only for re-encryption.

3.8. Templates

3.8.1. Overview

A template describes a set of objects that can be parameterized and processed to produce a list of objects for creation by OpenShift. The objects to create can include anything that users have permission to create within a project, for example services, build configurations, and deployment configurations. A template may also define a set of labels to apply to every object defined in the template.

Example 3.12. A Simple Template Object Definition (YAML)

apiVersion: v1 kind: Template metadata: name: redis-template 1 annotations: description: "Description" 2 iconClass: "icon-redis" 3 tags: "database,nosql" 4 objects: 5 - apiVersion: v1 kind: Pod metadata: name: redis-master spec: containers: - env: - name: REDIS_PASSWORD value: ${REDIS_PASSWORD} 6 image: dockerfile/redis name: master ports: - containerPort: 6379 protocol: TCP parameters: 7 - description: Password used for Redis authentication from: '[A-Z0-9]{8}' 8 generate: expression name: REDIS_PASSWORD labels: 9 redis: master

- 1

- The name of the template

- 2

- Optional description for the template

- 3

- The icon that will be shown in the UI for this template; the name of a CSS class defined in the web console source (search content for "openshift-logos-icon").

- 4

- A list of arbitrary tags that this template will have in the UI

- 5

- A list of objects the template will create (in this case, a single pod)

- 6

- Parameter value that will be substituted during processing

- 7

- A list of parameters for the template

- 8

- An expression used to generate a random password if not specified

- 9

- A list of labels to apply to all objects on create

A template describes a set of related object definitions to be created together, as well as a set of parameters for those objects. For example, an application might consist of a frontend web application backed by a database; each consists of a service object and deployment configuration object, and they share a set of credentials (parameters) for the frontend to authenticate to the backend. The template can be processed, either specifying parameters or allowing them to be automatically generated (for example, a unique DB password), in order to instantiate the list of objects in the template as a cohesive application.

Templates can be processed from a definition in a file or from an existing OpenShift API object. Cluster administrators can define standard templates in the API that are available for all users to process, while users can define their own templates within their own projects.

Administrators and developers can interact with templates using the CLI and web console.

3.8.2. Parameters

Templates allow you to define parameters which take on a value. That value is then substituted wherever the parameter is referenced. References can be defined in any text field in the objects list field.

Each parameter describes a variable and the variable value which can be referenced in any text field in the objects list field. During processing, the value can be set explicitly or it can be generated by OpenShift.

An explicit value can be set as the parameter default using the value field:

parameters:

- name: USERNAME

description: "The user name for Joe"

value: joe

The generate field can be set to 'expression' to specify generated values. The from field should specify the pattern for generating the value using a pseudo regular expression syntax:

parameters:

- name: PASSWORD

description: "The random user password"

generate: expression

from: "[a-zA-Z0-9]{12}"In the example above, processing will generate a random password 12 characters long consisting of all upper and lowercase alphabet letters and numbers.

The syntax available is not a full regular expression syntax. However, you can use \w, \d, and \a modifiers:

-

[\w]{10}produces 10 alphabet characters, numbers, and underscores. This follows the PCRE standard and is equal to[a-zA-Z0-9_]{10}. -

[\d]{10}produces 10 numbers. This is equal to[0-9]{10}. -

[\a]{10}produces 10 alphabetical characters. This is equal to[a-zA-Z]{10}.