Nodes

OpenShift Dedicated Nodes

Abstract

Chapter 1. Overview of nodes

1.1. About nodes

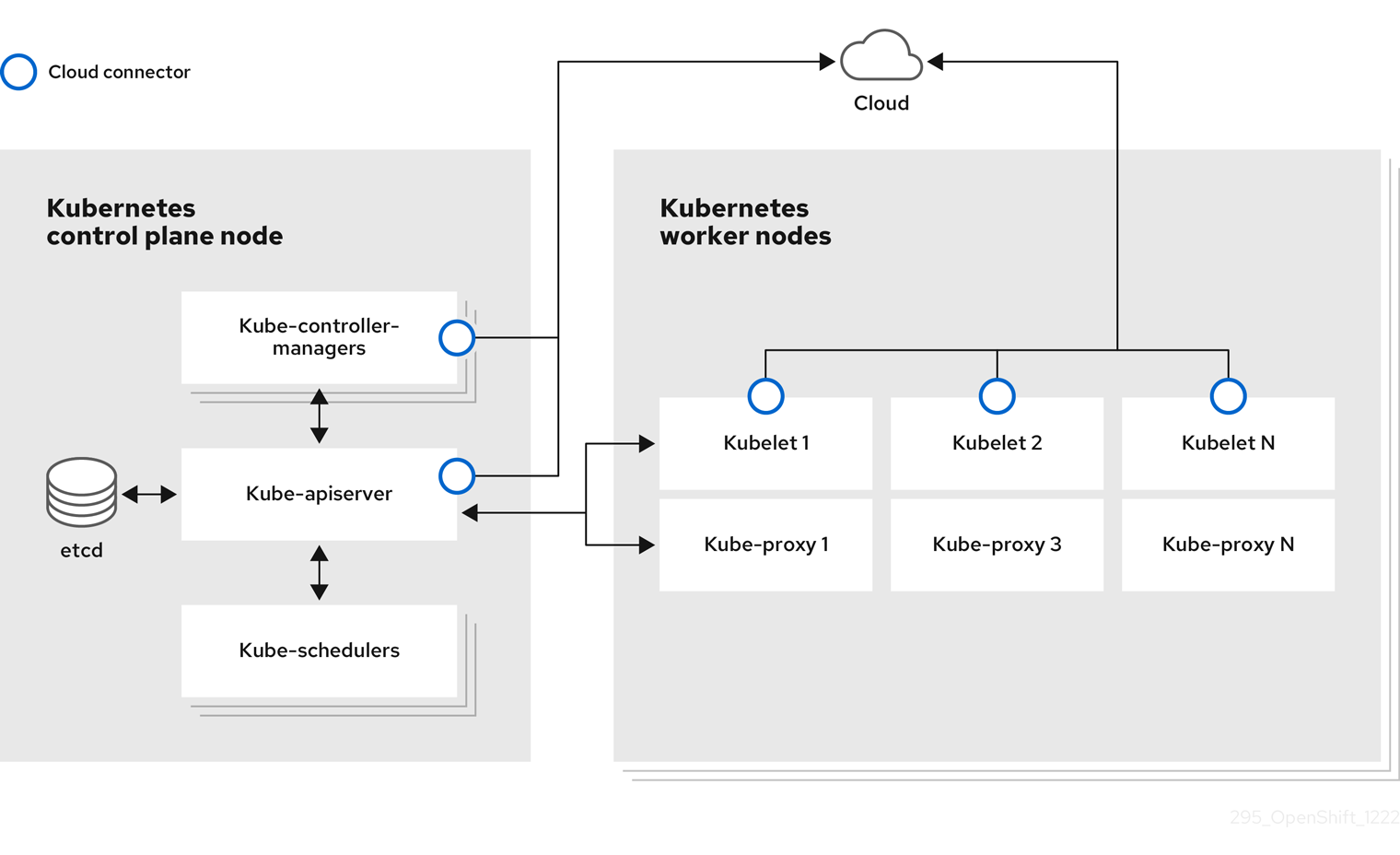

A node is a virtual or bare-metal machine in a Kubernetes cluster. Worker nodes host your application containers, grouped as pods. The control plane nodes run services that are required to control the Kubernetes cluster. In OpenShift Dedicated, the control plane nodes contain more than just the Kubernetes services for managing the OpenShift Dedicated cluster.

Having stable and healthy nodes in a cluster is fundamental to the smooth functioning of your hosted application. In OpenShift Dedicated, you can access, manage, and monitor a node through the Node object representing the node. Using the OpenShift CLI (oc) or the web console, you can perform the following operations on a node.

The following components of a node are responsible for maintaining the running of pods and providing the Kubernetes runtime environment.

- Container runtime

- The container runtime is responsible for running containers. Kubernetes offers several runtimes such as containerd, cri-o, rktlet, and Docker.

- Kubelet

- Kubelet runs on nodes and reads the container manifests. It ensures that the defined containers have started and are running. The kubelet process maintains the state of work and the node server. Kubelet manages network rules and port forwarding. The kubelet manages containers that are created by Kubernetes only.

- Kube-proxy

- Kube-proxy runs on every node in the cluster and maintains the network traffic between the Kubernetes resources. A Kube-proxy ensures that the networking environment is isolated and accessible.

- DNS

- Cluster DNS is a DNS server which serves DNS records for Kubernetes services. Containers started by Kubernetes automatically include this DNS server in their DNS searches.

Read operations

The read operations allow an administrator or a developer to get information about nodes in an OpenShift Dedicated cluster.

- List all the nodes in a cluster.

- Get information about a node, such as memory and CPU usage, health, status, and age.

- List pods running on a node.

Enhancement operations

OpenShift Dedicated allows you to do more than just access and manage nodes; as an administrator, you can perform the following tasks on nodes to make the cluster more efficient, application-friendly, and to provide a better environment for your developers.

- Manage node-level tuning for high-performance applications that require some level of kernel tuning by using the Node Tuning Operator.

- Run background tasks on nodes automatically with daemon sets. You can create and use daemon sets to create shared storage, run a logging pod on every node, or deploy a monitoring agent on all nodes.

1.2. About pods

A pod is one or more containers deployed together on a node. As a cluster administrator, you can define a pod, assign it to run on a healthy node that is ready for scheduling, and manage. A pod runs as long as the containers are running. You cannot change a pod once it is defined and is running. Some operations you can perform when working with pods are:

Read operations

As an administrator, you can get information about pods in a project through the following tasks:

- List pods associated with a project, including information such as the number of replicas and restarts, current status, and age.

- View pod usage statistics such as CPU, memory, and storage consumption.

Management operations

The following list of tasks provides an overview of how an administrator can manage pods in an OpenShift Dedicated cluster.

Control scheduling of pods using the advanced scheduling features available in OpenShift Dedicated:

- Node-to-pod binding rules such as pod affinity, node affinity, and anti-affinity.

- Node labels and selectors.

- Taints and tolerations.

- Pod topology spread constraints.

- Configure how pods behave after a restart using pod controllers and restart policies.

- Limit both egress and ingress traffic on a pod.

- Add and remove volumes to and from any object that has a pod template. A volume is a mounted file system available to all the containers in a pod. Container storage is ephemeral; you can use volumes to persist container data.

Enhancement operations

You can work with pods more easily and efficiently with the help of various tools and features available in OpenShift Dedicated. The following operations involve using those tools and features to better manage pods.

-

Secrets: Some applications need sensitive information, such as passwords and usernames. An administrator can use the

Secretobject to provide sensitive data to pods using theSecretobject.

1.3. About containers

A container is the basic unit of an OpenShift Dedicated application, which comprises the application code packaged along with its dependencies, libraries, and binaries. Containers provide consistency across environments and multiple deployment targets: physical servers, virtual machines (VMs), and private or public cloud.

Linux container technologies are lightweight mechanisms for isolating running processes and limiting access to only designated resources. As an administrator, You can perform various tasks on a Linux container, such as:

OpenShift Dedicated provides specialized containers called Init containers. Init containers run before application containers and can contain utilities or setup scripts not present in an application image. You can use an Init container to perform tasks before the rest of a pod is deployed.

Apart from performing specific tasks on nodes, pods, and containers, you can work with the overall OpenShift Dedicated cluster to keep the cluster efficient and the application pods highly available.

1.4. Glossary of common terms for OpenShift Dedicated nodes

This glossary defines common terms that are used in the node content.

- Container

- It is a lightweight and executable image that comprises software and all its dependencies. Containers virtualize the operating system, as a result, you can run containers anywhere from a data center to a public or private cloud to even a developer’s laptop.

- Daemon set

- Ensures that a replica of the pod runs on eligible nodes in an OpenShift Dedicated cluster.

- egress

- The process of data sharing externally through a network’s outbound traffic from a pod.

- garbage collection

- The process of cleaning up cluster resources, such as terminated containers and images that are not referenced by any running pods.

- Ingress

- Incoming traffic to a pod.

- Job

- A process that runs to completion. A job creates one or more pod objects and ensures that the specified pods are successfully completed.

- Labels

- You can use labels, which are key-value pairs, to organise and select subsets of objects, such as a pod.

- Node

- A worker machine in the OpenShift Dedicated cluster. A node can be either be a virtual machine (VM) or a physical machine.

- Node Tuning Operator

- You can use the Node Tuning Operator to manage node-level tuning by using the TuneD daemon. It ensures custom tuning specifications are passed to all containerized TuneD daemons running in the cluster in the format that the daemons understand. The daemons run on all nodes in the cluster, one per node.

- Self Node Remediation Operator

- The Operator runs on the cluster nodes and identifies and reboots nodes that are unhealthy.

- Pod

- One or more containers with shared resources, such as volume and IP addresses, running in your OpenShift Dedicated cluster. A pod is the smallest compute unit defined, deployed, and managed.

- Toleration

- Indicates that the pod is allowed (but not required) to be scheduled on nodes or node groups with matching taints. You can use tolerations to enable the scheduler to schedule pods with matching taints.

- Taint

- A core object that comprises a key,value, and effect. Taints and tolerations work together to ensure that pods are not scheduled on irrelevant nodes.

Chapter 2. Working with pods

2.1. Using pods

A pod is one or more containers deployed together on one host, and the smallest compute unit that can be defined, deployed, and managed.

2.1.1. Understanding pods

Pods are the rough equivalent of a machine instance (physical or virtual) to a Container. Each pod is allocated its own internal IP address, therefore owning its entire port space, and containers within pods can share their local storage and networking.

Pods have a lifecycle; they are defined, then they are assigned to run on a node, then they run until their container(s) exit or they are removed for some other reason. Pods, depending on policy and exit code, might be removed after exiting, or can be retained to enable access to the logs of their containers.

OpenShift Dedicated treats pods as largely immutable; changes cannot be made to a pod definition while it is running. OpenShift Dedicated implements changes by terminating an existing pod and recreating it with modified configuration, base image(s), or both. Pods are also treated as expendable, and do not maintain state when recreated. Therefore pods should usually be managed by higher-level controllers, rather than directly by users.

Bare pods that are not managed by a replication controller will be not rescheduled upon node disruption.

2.1.2. Example pod configurations

OpenShift Dedicated leverages the Kubernetes concept of a pod, which is one or more containers deployed together on one host, and the smallest compute unit that can be defined, deployed, and managed.

The following is an example definition of a pod. It demonstrates many features of pods, most of which are discussed in other topics and thus only briefly mentioned here:

Pod object definition (YAML)

kind: Pod

apiVersion: v1

metadata:

name: example

labels:

environment: production

app: abc 1

spec:

restartPolicy: Always 2

securityContext: 3

runAsNonRoot: true

seccompProfile:

type: RuntimeDefault

containers: 4

- name: abc

args:

- sleep

- "1000000"

volumeMounts: 5

- name: cache-volume

mountPath: /cache 6

image: registry.access.redhat.com/ubi7/ubi-init:latest 7

securityContext:

allowPrivilegeEscalation: false

runAsNonRoot: true

capabilities:

drop: ["ALL"]

resources:

limits:

memory: "100Mi"

cpu: "1"

requests:

memory: "100Mi"

cpu: "1"

volumes: 8

- name: cache-volume

emptyDir:

sizeLimit: 500Mi

- 1

- Pods can be "tagged" with one or more labels, which can then be used to select and manage groups of pods in a single operation. The labels are stored in key/value format in the

metadatahash. - 2

- The pod restart policy with possible values

Always,OnFailure, andNever. The default value isAlways. - 3

- OpenShift Dedicated defines a security context for containers which specifies whether they are allowed to run as privileged containers, run as a user of their choice, and more. The default context is very restrictive but administrators can modify this as needed.

- 4

containersspecifies an array of one or more container definitions.- 5

- The container specifies where external storage volumes are mounted within the container.

- 6

- Specify the volumes to provide for the pod. Volumes mount at the specified path. Do not mount to the container root,

/, or any path that is the same in the host and the container. This can corrupt your host system if the container is sufficiently privileged, such as the host/dev/ptsfiles. It is safe to mount the host by using/host. - 7

- Each container in the pod is instantiated from its own container image.

- 8

- The pod defines storage volumes that are available to its container(s) to use.

If you attach persistent volumes that have high file counts to pods, those pods can fail or can take a long time to start. For more information, see When using Persistent Volumes with high file counts in OpenShift, why do pods fail to start or take an excessive amount of time to achieve "Ready" state?.

This pod definition does not include attributes that are filled by OpenShift Dedicated automatically after the pod is created and its lifecycle begins. The Kubernetes pod documentation has details about the functionality and purpose of pods.

2.1.3. Additional resources

- For more information on pods and storage see Understanding persistent storage and Understanding ephemeral storage.

2.2. Viewing pods

As an administrator, you can view the pods in your cluster and to determine the health of those pods and the cluster as a whole.

2.2.1. About pods

OpenShift Dedicated leverages the Kubernetes concept of a pod, which is one or more containers deployed together on one host, and the smallest compute unit that can be defined, deployed, and managed. Pods are the rough equivalent of a machine instance (physical or virtual) to a container.

You can view a list of pods associated with a specific project or view usage statistics about pods.

2.2.2. Viewing pods in a project

You can view a list of pods associated with the current project, including the number of replica, the current status, number or restarts and the age of the pod.

Procedure

To view the pods in a project:

Change to the project:

$ oc project <project-name>

Run the following command:

$ oc get pods

For example:

$ oc get pods

Example output

NAME READY STATUS RESTARTS AGE console-698d866b78-bnshf 1/1 Running 2 165m console-698d866b78-m87pm 1/1 Running 2 165m

Add the

-o wideflags to view the pod IP address and the node where the pod is located.$ oc get pods -o wide

Example output

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE console-698d866b78-bnshf 1/1 Running 2 166m 10.128.0.24 ip-10-0-152-71.ec2.internal <none> console-698d866b78-m87pm 1/1 Running 2 166m 10.129.0.23 ip-10-0-173-237.ec2.internal <none>

2.2.3. Viewing pod usage statistics

You can display usage statistics about pods, which provide the runtime environments for containers. These usage statistics include CPU, memory, and storage consumption.

Prerequisites

-

You must have

cluster-readerpermission to view the usage statistics. - Metrics must be installed to view the usage statistics.

Procedure

To view the usage statistics:

Run the following command:

$ oc adm top pods

For example:

$ oc adm top pods -n openshift-console

Example output

NAME CPU(cores) MEMORY(bytes) console-7f58c69899-q8c8k 0m 22Mi console-7f58c69899-xhbgg 0m 25Mi downloads-594fcccf94-bcxk8 3m 18Mi downloads-594fcccf94-kv4p6 2m 15Mi

Run the following command to view the usage statistics for pods with labels:

$ oc adm top pod --selector=''

You must choose the selector (label query) to filter on. Supports

=,==, and!=.For example:

$ oc adm top pod --selector='name=my-pod'

2.2.4. Viewing resource logs

You can view the log for various resources in the OpenShift CLI (oc) and web console. Logs read from the tail, or end, of the log.

Prerequisites

-

Access to the OpenShift CLI (

oc).

Procedure (UI)

In the OpenShift Dedicated console, navigate to Workloads → Pods or navigate to the pod through the resource you want to investigate.

NoteSome resources, such as builds, do not have pods to query directly. In such instances, you can locate the Logs link on the Details page for the resource.

- Select a project from the drop-down menu.

- Click the name of the pod you want to investigate.

- Click Logs.

Procedure (CLI)

View the log for a specific pod:

$ oc logs -f <pod_name> -c <container_name>

where:

-f- Optional: Specifies that the output follows what is being written into the logs.

<pod_name>- Specifies the name of the pod.

<container_name>- Optional: Specifies the name of a container. When a pod has more than one container, you must specify the container name.

For example:

$ oc logs ruby-58cd97df55-mww7r

$ oc logs -f ruby-57f7f4855b-znl92 -c ruby

The contents of log files are printed out.

View the log for a specific resource:

$ oc logs <object_type>/<resource_name> 1- 1

- Specifies the resource type and name.

For example:

$ oc logs deployment/ruby

The contents of log files are printed out.

2.3. Configuring an OpenShift Dedicated cluster for pods

As an administrator, you can create and maintain an efficient cluster for pods.

By keeping your cluster efficient, you can provide a better environment for your developers using such tools as what a pod does when it exits, ensuring that the required number of pods is always running, when to restart pods designed to run only once, limit the bandwidth available to pods, and how to keep pods running during disruptions.

2.3.1. Configuring how pods behave after restart

A pod restart policy determines how OpenShift Dedicated responds when Containers in that pod exit. The policy applies to all Containers in that pod.

The possible values are:

-

Always- Tries restarting a successfully exited Container on the pod continuously, with an exponential back-off delay (10s, 20s, 40s) capped at 5 minutes. The default isAlways. -

OnFailure- Tries restarting a failed Container on the pod with an exponential back-off delay (10s, 20s, 40s) capped at 5 minutes. -

Never- Does not try to restart exited or failed Containers on the pod. Pods immediately fail and exit.

After the pod is bound to a node, the pod will never be bound to another node. This means that a controller is necessary in order for a pod to survive node failure:

| Condition | Controller Type | Restart Policy |

|---|---|---|

| Pods that are expected to terminate (such as batch computations) | Job |

|

| Pods that are expected to not terminate (such as web servers) | Replication controller |

|

| Pods that must run one-per-machine | Daemon set | Any |

If a Container on a pod fails and the restart policy is set to OnFailure, the pod stays on the node and the Container is restarted. If you do not want the Container to restart, use a restart policy of Never.

If an entire pod fails, OpenShift Dedicated starts a new pod. Developers must address the possibility that applications might be restarted in a new pod. In particular, applications must handle temporary files, locks, incomplete output, and so forth caused by previous runs.

Kubernetes architecture expects reliable endpoints from cloud providers. When a cloud provider is down, the kubelet prevents OpenShift Dedicated from restarting.

If the underlying cloud provider endpoints are not reliable, do not install a cluster using cloud provider integration. Install the cluster as if it was in a no-cloud environment. It is not recommended to toggle cloud provider integration on or off in an installed cluster.

For details on how OpenShift Dedicated uses restart policy with failed Containers, see the Example States in the Kubernetes documentation.

2.3.2. Limiting the bandwidth available to pods

You can apply quality-of-service traffic shaping to a pod and effectively limit its available bandwidth. Egress traffic (from the pod) is handled by policing, which simply drops packets in excess of the configured rate. Ingress traffic (to the pod) is handled by shaping queued packets to effectively handle data. The limits you place on a pod do not affect the bandwidth of other pods.

Procedure

To limit the bandwidth on a pod:

Write an object definition JSON file, and specify the data traffic speed using

kubernetes.io/ingress-bandwidthandkubernetes.io/egress-bandwidthannotations. For example, to limit both pod egress and ingress bandwidth to 10M/s:Limited

Podobject definition{ "kind": "Pod", "spec": { "containers": [ { "image": "openshift/hello-openshift", "name": "hello-openshift" } ] }, "apiVersion": "v1", "metadata": { "name": "iperf-slow", "annotations": { "kubernetes.io/ingress-bandwidth": "10M", "kubernetes.io/egress-bandwidth": "10M" } } }Create the pod using the object definition:

$ oc create -f <file_or_dir_path>

2.3.3. Understanding how to use pod disruption budgets to specify the number of pods that must be up

A pod disruption budget allows the specification of safety constraints on pods during operations, such as draining a node for maintenance.

PodDisruptionBudget is an API object that specifies the minimum number or percentage of replicas that must be up at a time. Setting these in projects can be helpful during node maintenance (such as scaling a cluster down or a cluster upgrade) and is only honored on voluntary evictions (not on node failures).

A PodDisruptionBudget object’s configuration consists of the following key parts:

- A label selector, which is a label query over a set of pods.

An availability level, which specifies the minimum number of pods that must be available simultaneously, either:

-

minAvailableis the number of pods must always be available, even during a disruption. -

maxUnavailableis the number of pods can be unavailable during a disruption.

-

Available refers to the number of pods that has condition Ready=True. Ready=True refers to the pod that is able to serve requests and should be added to the load balancing pools of all matching services.

A maxUnavailable of 0% or 0 or a minAvailable of 100% or equal to the number of replicas is permitted but can block nodes from being drained.

The default setting for maxUnavailable is 1 for all the machine config pools in OpenShift Dedicated. It is recommended to not change this value and update one control plane node at a time. Do not change this value to 3 for the control plane pool.

You can check for pod disruption budgets across all projects with the following:

$ oc get poddisruptionbudget --all-namespaces

Example output

NAMESPACE NAME MIN AVAILABLE MAX UNAVAILABLE ALLOWED DISRUPTIONS AGE openshift-apiserver openshift-apiserver-pdb N/A 1 1 121m openshift-cloud-controller-manager aws-cloud-controller-manager 1 N/A 1 125m openshift-cloud-credential-operator pod-identity-webhook 1 N/A 1 117m openshift-cluster-csi-drivers aws-ebs-csi-driver-controller-pdb N/A 1 1 121m openshift-cluster-storage-operator csi-snapshot-controller-pdb N/A 1 1 122m openshift-cluster-storage-operator csi-snapshot-webhook-pdb N/A 1 1 122m openshift-console console N/A 1 1 116m #...

The PodDisruptionBudget is considered healthy when there are at least minAvailable pods running in the system. Every pod above that limit can be evicted.

Depending on your pod priority and preemption settings, lower-priority pods might be removed despite their pod disruption budget requirements.

2.3.3.1. Specifying the number of pods that must be up with pod disruption budgets

You can use a PodDisruptionBudget object to specify the minimum number or percentage of replicas that must be up at a time.

Procedure

To configure a pod disruption budget:

Create a YAML file with the an object definition similar to the following:

apiVersion: policy/v1 1 kind: PodDisruptionBudget metadata: name: my-pdb spec: minAvailable: 2 2 selector: 3 matchLabels: name: my-pod

- 1

PodDisruptionBudgetis part of thepolicy/v1API group.- 2

- The minimum number of pods that must be available simultaneously. This can be either an integer or a string specifying a percentage, for example,

20%. - 3

- A label query over a set of resources. The result of

matchLabelsandmatchExpressionsare logically conjoined. Leave this parameter blank, for exampleselector {}, to select all pods in the project.

Or:

apiVersion: policy/v1 1 kind: PodDisruptionBudget metadata: name: my-pdb spec: maxUnavailable: 25% 2 selector: 3 matchLabels: name: my-pod

- 1

PodDisruptionBudgetis part of thepolicy/v1API group.- 2

- The maximum number of pods that can be unavailable simultaneously. This can be either an integer or a string specifying a percentage, for example,

20%. - 3

- A label query over a set of resources. The result of

matchLabelsandmatchExpressionsare logically conjoined. Leave this parameter blank, for exampleselector {}, to select all pods in the project.

Run the following command to add the object to project:

$ oc create -f </path/to/file> -n <project_name>

2.4. Providing sensitive data to pods by using secrets

Additional resources

Some applications need sensitive information, such as passwords and user names, that you do not want developers to have.

As an administrator, you can use Secret objects to provide this information without exposing that information in clear text.

2.4.1. Understanding secrets

The Secret object type provides a mechanism to hold sensitive information such as passwords, OpenShift Dedicated client configuration files, private source repository credentials, and so on. Secrets decouple sensitive content from the pods. You can mount secrets into containers using a volume plugin or the system can use secrets to perform actions on behalf of a pod.

Key properties include:

- Secret data can be referenced independently from its definition.

- Secret data volumes are backed by temporary file-storage facilities (tmpfs) and never come to rest on a node.

- Secret data can be shared within a namespace.

YAML Secret object definition

apiVersion: v1 kind: Secret metadata: name: test-secret namespace: my-namespace type: Opaque 1 data: 2 username: <username> 3 password: <password> stringData: 4 hostname: myapp.mydomain.com 5

- 1

- Indicates the structure of the secret’s key names and values.

- 2

- The allowable format for the keys in the

datafield must meet the guidelines in the DNS_SUBDOMAIN value in the Kubernetes identifiers glossary. - 3

- The value associated with keys in the

datamap must be base64 encoded. - 4

- Entries in the

stringDatamap are converted to base64 and the entry will then be moved to thedatamap automatically. This field is write-only; the value will only be returned via thedatafield. - 5

- The value associated with keys in the

stringDatamap is made up of plain text strings.

You must create a secret before creating the pods that depend on that secret.

When creating secrets:

- Create a secret object with secret data.

- Update the pod’s service account to allow the reference to the secret.

-

Create a pod, which consumes the secret as an environment variable or as a file (using a

secretvolume).

2.4.1.1. Types of secrets

The value in the type field indicates the structure of the secret’s key names and values. The type can be used to enforce the presence of user names and keys in the secret object. If you do not want validation, use the opaque type, which is the default.

Specify one of the following types to trigger minimal server-side validation to ensure the presence of specific key names in the secret data:

-

kubernetes.io/service-account-token. Uses a service account token. -

kubernetes.io/basic-auth. Use with Basic Authentication. -

kubernetes.io/ssh-auth. Use with SSH Key Authentication. -

kubernetes.io/tls. Use with TLS certificate authorities.

Specify type: Opaque if you do not want validation, which means the secret does not claim to conform to any convention for key names or values. An opaque secret, allows for unstructured key:value pairs that can contain arbitrary values.

You can specify other arbitrary types, such as example.com/my-secret-type. These types are not enforced server-side, but indicate that the creator of the secret intended to conform to the key/value requirements of that type.

For examples of different secret types, see the code samples in Using Secrets.

2.4.1.2. Secret data keys

Secret keys must be in a DNS subdomain.

2.4.1.3. Automatically generated secrets

By default, OpenShift Dedicated creates the following secrets for each service account:

- A dockercfg image pull secret

A service account token secret

NotePrior to OpenShift Dedicated 4.11, a second service account token secret was generated when a service account was created. This service account token secret was used to access the Kubernetes API.

Starting with OpenShift Dedicated 4.11, this second service account token secret is no longer created. This is because the

LegacyServiceAccountTokenNoAutoGenerationupstream Kubernetes feature gate was enabled, which stops the automatic generation of secret-based service account tokens to access the Kubernetes API.After upgrading to 4, any existing service account token secrets are not deleted and continue to function.

This service account token secret and docker configuration image pull secret are necessary to integrate the OpenShift image registry into the cluster’s user authentication and authorization system.

However, if you do not enable the ImageRegistry capability or if you disable the integrated OpenShift image registry in the Cluster Image Registry Operator’s configuration, these secrets are not generated for each service account.

Do not rely on these automatically generated secrets for your own use; they might be removed in a future OpenShift Dedicated release.

Workloads are automatically injected with a projected volume to obtain a bound service account token. If your workload needs an additional service account token, add an additional projected volume in your workload manifest. Bound service account tokens are more secure than service account token secrets for the following reasons:

- Bound service account tokens have a bounded lifetime.

- Bound service account tokens contain audiences.

- Bound service account tokens can be bound to pods or secrets and the bound tokens are invalidated when the bound object is removed.

For more information, see Configuring bound service account tokens using volume projection.

You can also manually create a service account token secret to obtain a token, if the security exposure of a non-expiring token in a readable API object is acceptable to you. For more information, see Creating a service account token secret.

Additional resources

- For information about creating a service account token secret, see Creating a service account token secret.

2.4.2. Understanding how to create secrets

As an administrator you must create a secret before developers can create the pods that depend on that secret.

When creating secrets:

Create a secret object that contains the data you want to keep secret. The specific data required for each secret type is descibed in the following sections.

Example YAML object that creates an opaque secret

apiVersion: v1 kind: Secret metadata: name: test-secret type: Opaque 1 data: 2 username: <username> password: <password> stringData: 3 hostname: myapp.mydomain.com secret.properties: | property1=valueA property2=valueB

Use either the

dataorstringdatafields, not both.Update the pod’s service account to reference the secret:

YAML of a service account that uses a secret

apiVersion: v1 kind: ServiceAccount ... secrets: - name: test-secret

Create a pod, which consumes the secret as an environment variable or as a file (using a

secretvolume):YAML of a pod populating files in a volume with secret data

apiVersion: v1 kind: Pod metadata: name: secret-example-pod spec: securityContext: runAsNonRoot: true seccompProfile: type: RuntimeDefault containers: - name: secret-test-container image: busybox command: [ "/bin/sh", "-c", "cat /etc/secret-volume/*" ] volumeMounts: 1 - name: secret-volume mountPath: /etc/secret-volume 2 readOnly: true 3 securityContext: allowPrivilegeEscalation: false capabilities: drop: [ALL] volumes: - name: secret-volume secret: secretName: test-secret 4 restartPolicy: Never- 1

- Add a

volumeMountsfield to each container that needs the secret. - 2

- Specifies an unused directory name where you would like the secret to appear. Each key in the secret data map becomes the filename under

mountPath. - 3

- Set to

true. If true, this instructs the driver to provide a read-only volume. - 4

- Specifies the name of the secret.

YAML of a pod populating environment variables with secret data

apiVersion: v1 kind: Pod metadata: name: secret-example-pod spec: securityContext: runAsNonRoot: true seccompProfile: type: RuntimeDefault containers: - name: secret-test-container image: busybox command: [ "/bin/sh", "-c", "export" ] env: - name: TEST_SECRET_USERNAME_ENV_VAR valueFrom: secretKeyRef: 1 name: test-secret key: username securityContext: allowPrivilegeEscalation: false capabilities: drop: [ALL] restartPolicy: Never- 1

- Specifies the environment variable that consumes the secret key.

YAML of a build config populating environment variables with secret data

apiVersion: build.openshift.io/v1 kind: BuildConfig metadata: name: secret-example-bc spec: strategy: sourceStrategy: env: - name: TEST_SECRET_USERNAME_ENV_VAR valueFrom: secretKeyRef: 1 name: test-secret key: username from: kind: ImageStreamTag namespace: openshift name: 'cli:latest'- 1

- Specifies the environment variable that consumes the secret key.

2.4.2.1. Secret creation restrictions

To use a secret, a pod needs to reference the secret. A secret can be used with a pod in three ways:

- To populate environment variables for containers.

- As files in a volume mounted on one or more of its containers.

- By kubelet when pulling images for the pod.

Volume type secrets write data into the container as a file using the volume mechanism. Image pull secrets use service accounts for the automatic injection of the secret into all pods in a namespace.

When a template contains a secret definition, the only way for the template to use the provided secret is to ensure that the secret volume sources are validated and that the specified object reference actually points to a Secret object. Therefore, a secret needs to be created before any pods that depend on it. The most effective way to ensure this is to have it get injected automatically through the use of a service account.

Secret API objects reside in a namespace. They can only be referenced by pods in that same namespace.

Individual secrets are limited to 1MB in size. This is to discourage the creation of large secrets that could exhaust apiserver and kubelet memory. However, creation of a number of smaller secrets could also exhaust memory.

2.4.2.2. Creating an opaque secret

As an administrator, you can create an opaque secret, which allows you to store unstructured key:value pairs that can contain arbitrary values.

Procedure

Create a

Secretobject in a YAML file on a control plane node.For example:

apiVersion: v1 kind: Secret metadata: name: mysecret type: Opaque 1 data: username: <username> password: <password>- 1

- Specifies an opaque secret.

Use the following command to create a

Secretobject:$ oc create -f <filename>.yaml

To use the secret in a pod:

- Update the pod’s service account to reference the secret, as shown in the "Understanding how to create secrets" section.

-

Create the pod, which consumes the secret as an environment variable or as a file (using a

secretvolume), as shown in the "Understanding how to create secrets" section.

Additional resources

- For more information on using secrets in pods, see Understanding how to create secrets.

2.4.2.3. Creating a service account token secret

As an administrator, you can create a service account token secret, which allows you to distribute a service account token to applications that must authenticate to the API.

It is recommended to obtain bound service account tokens using the TokenRequest API instead of using service account token secrets. The tokens obtained from the TokenRequest API are more secure than the tokens stored in secrets, because they have a bounded lifetime and are not readable by other API clients.

You should create a service account token secret only if you cannot use the TokenRequest API and if the security exposure of a non-expiring token in a readable API object is acceptable to you.

See the Additional resources section that follows for information on creating bound service account tokens.

Procedure

Create a

Secretobject in a YAML file on a control plane node:Example

secretobject:apiVersion: v1 kind: Secret metadata: name: secret-sa-sample annotations: kubernetes.io/service-account.name: "sa-name" 1 type: kubernetes.io/service-account-token 2Use the following command to create the

Secretobject:$ oc create -f <filename>.yaml

To use the secret in a pod:

- Update the pod’s service account to reference the secret, as shown in the "Understanding how to create secrets" section.

-

Create the pod, which consumes the secret as an environment variable or as a file (using a

secretvolume), as shown in the "Understanding how to create secrets" section.

Additional resources

- For more information on using secrets in pods, see Understanding how to create secrets.

2.4.2.4. Creating a basic authentication secret

As an administrator, you can create a basic authentication secret, which allows you to store the credentials needed for basic authentication. When using this secret type, the data parameter of the Secret object must contain the following keys encoded in the base64 format:

-

username: the user name for authentication -

password: the password or token for authentication

You can use the stringData parameter to use clear text content.

Procedure

Create a

Secretobject in a YAML file on a control plane node:Example

secretobjectapiVersion: v1 kind: Secret metadata: name: secret-basic-auth type: kubernetes.io/basic-auth 1 data: stringData: 2 username: admin password: <password>

Use the following command to create the

Secretobject:$ oc create -f <filename>.yaml

To use the secret in a pod:

- Update the pod’s service account to reference the secret, as shown in the "Understanding how to create secrets" section.

-

Create the pod, which consumes the secret as an environment variable or as a file (using a

secretvolume), as shown in the "Understanding how to create secrets" section.

Additional resources

- For more information on using secrets in pods, see Understanding how to create secrets.

2.4.2.5. Creating an SSH authentication secret

As an administrator, you can create an SSH authentication secret, which allows you to store data used for SSH authentication. When using this secret type, the data parameter of the Secret object must contain the SSH credential to use.

Procedure

Create a

Secretobject in a YAML file on a control plane node:Example

secretobject:apiVersion: v1 kind: Secret metadata: name: secret-ssh-auth type: kubernetes.io/ssh-auth 1 data: ssh-privatekey: | 2 MIIEpQIBAAKCAQEAulqb/Y ...

Use the following command to create the

Secretobject:$ oc create -f <filename>.yaml

To use the secret in a pod:

- Update the pod’s service account to reference the secret, as shown in the "Understanding how to create secrets" section.

-

Create the pod, which consumes the secret as an environment variable or as a file (using a

secretvolume), as shown in the "Understanding how to create secrets" section.

Additional resources

2.4.2.6. Creating a Docker configuration secret

As an administrator, you can create a Docker configuration secret, which allows you to store the credentials for accessing a container image registry.

-

kubernetes.io/dockercfg. Use this secret type to store your local Docker configuration file. Thedataparameter of thesecretobject must contain the contents of a.dockercfgfile encoded in the base64 format. -

kubernetes.io/dockerconfigjson. Use this secret type to store your local Docker configuration JSON file. Thedataparameter of thesecretobject must contain the contents of a.docker/config.jsonfile encoded in the base64 format.

Procedure

Create a

Secretobject in a YAML file on a control plane node.Example Docker configuration

secretobjectapiVersion: v1 kind: Secret metadata: name: secret-docker-cfg namespace: my-project type: kubernetes.io/dockerconfig 1 data: .dockerconfig:bm5ubm5ubm5ubm5ubm5ubm5ubm5ubmdnZ2dnZ2dnZ2dnZ2dnZ2dnZ2cgYXV0aCBrZXlzCg== 2

Example Docker configuration JSON

secretobjectapiVersion: v1 kind: Secret metadata: name: secret-docker-json namespace: my-project type: kubernetes.io/dockerconfig 1 data: .dockerconfigjson:bm5ubm5ubm5ubm5ubm5ubm5ubm5ubmdnZ2dnZ2dnZ2dnZ2dnZ2dnZ2cgYXV0aCBrZXlzCg== 2

Use the following command to create the

Secretobject$ oc create -f <filename>.yaml

To use the secret in a pod:

- Update the pod’s service account to reference the secret, as shown in the "Understanding how to create secrets" section.

-

Create the pod, which consumes the secret as an environment variable or as a file (using a

secretvolume), as shown in the "Understanding how to create secrets" section.

Additional resources

- For more information on using secrets in pods, see Understanding how to create secrets.

2.4.2.7. Creating a secret using the web console

You can create secrets using the web console.

Procedure

- Navigate to Workloads → Secrets.

Click Create → From YAML.

Edit the YAML manually to your specifications, or drag and drop a file into the YAML editor. For example:

apiVersion: v1 kind: Secret metadata: name: example namespace: <namespace> type: Opaque 1 data: username: <base64 encoded username> password: <base64 encoded password> stringData: 2 hostname: myapp.mydomain.com

- 1

- This example specifies an opaque secret; however, you may see other secret types such as service account token secret, basic authentication secret, SSH authentication secret, or a secret that uses Docker configuration.

- 2

- Entries in the

stringDatamap are converted to base64 and the entry will then be moved to thedatamap automatically. This field is write-only; the value will only be returned via thedatafield.

- Click Create.

Click Add Secret to workload.

- From the drop-down menu, select the workload to add.

- Click Save.

2.4.3. Understanding how to update secrets

When you modify the value of a secret, the value (used by an already running pod) will not dynamically change. To change a secret, you must delete the original pod and create a new pod (perhaps with an identical PodSpec).

Updating a secret follows the same workflow as deploying a new Container image. You can use the kubectl rolling-update command.

The resourceVersion value in a secret is not specified when it is referenced. Therefore, if a secret is updated at the same time as pods are starting, the version of the secret that is used for the pod is not defined.

Currently, it is not possible to check the resource version of a secret object that was used when a pod was created. It is planned that pods will report this information, so that a controller could restart ones using an old resourceVersion. In the interim, do not update the data of existing secrets, but create new ones with distinct names.

2.4.4. Creating and using secrets

As an administrator, you can create a service account token secret. This allows you to distribute a service account token to applications that must authenticate to the API.

Procedure

Create a service account in your namespace by running the following command:

$ oc create sa <service_account_name> -n <your_namespace>

Save the following YAML example to a file named

service-account-token-secret.yaml. The example includes aSecretobject configuration that you can use to generate a service account token:apiVersion: v1 kind: Secret metadata: name: <secret_name> 1 annotations: kubernetes.io/service-account.name: "sa-name" 2 type: kubernetes.io/service-account-token 3

Generate the service account token by applying the file:

$ oc apply -f service-account-token-secret.yaml

Get the service account token from the secret by running the following command:

$ oc get secret <sa_token_secret> -o jsonpath='{.data.token}' | base64 --decode 1Example output

ayJhbGciOiJSUzI1NiIsImtpZCI6IklOb2dtck1qZ3hCSWpoNnh5YnZhSE9QMkk3YnRZMVZoclFfQTZfRFp1YlUifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJkZWZhdWx0Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZWNyZXQubmFtZSI6ImJ1aWxkZXItdG9rZW4tdHZrbnIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiYnVpbGRlciIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjNmZGU2MGZmLTA1NGYtNDkyZi04YzhjLTNlZjE0NDk3MmFmNyIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDpkZWZhdWx0OmJ1aWxkZXIifQ.OmqFTDuMHC_lYvvEUrjr1x453hlEEHYcxS9VKSzmRkP1SiVZWPNPkTWlfNRp6bIUZD3U6aN3N7dMSN0eI5hu36xPgpKTdvuckKLTCnelMx6cxOdAbrcw1mCmOClNscwjS1KO1kzMtYnnq8rXHiMJELsNlhnRyyIXRTtNBsy4t64T3283s3SLsancyx0gy0ujx-Ch3uKAKdZi5iT-I8jnnQ-ds5THDs2h65RJhgglQEmSxpHrLGZFmyHAQI-_SjvmHZPXEc482x3SkaQHNLqpmrpJorNqh1M8ZHKzlujhZgVooMvJmWPXTb2vnvi3DGn2XI-hZxl1yD2yGH1RBpYUHA

- 1

- Replace <sa_token_secret> with the name of your service token secret.

Use your service account token to authenticate with the API of your cluster:

$ curl -X GET <openshift_cluster_api> --header "Authorization: Bearer <token>" 1 2

2.4.5. About using signed certificates with secrets

To secure communication to your service, you can configure OpenShift Dedicated to generate a signed serving certificate/key pair that you can add into a secret in a project.

A service serving certificate secret is intended to support complex middleware applications that need out-of-the-box certificates. It has the same settings as the server certificates generated by the administrator tooling for nodes and masters.

Service Pod spec configured for a service serving certificates secret.

apiVersion: v1

kind: Service

metadata:

name: registry

annotations:

service.beta.openshift.io/serving-cert-secret-name: registry-cert1

# ...

- 1

- Specify the name for the certificate

Other pods can trust cluster-created certificates (which are only signed for internal DNS names), by using the CA bundle in the /var/run/secrets/kubernetes.io/serviceaccount/service-ca.crt file that is automatically mounted in their pod.

The signature algorithm for this feature is x509.SHA256WithRSA. To manually rotate, delete the generated secret. A new certificate is created.

2.4.5.1. Generating signed certificates for use with secrets

To use a signed serving certificate/key pair with a pod, create or edit the service to add the service.beta.openshift.io/serving-cert-secret-name annotation, then add the secret to the pod.

Procedure

To create a service serving certificate secret:

-

Edit the

Podspec for your service. Add the

service.beta.openshift.io/serving-cert-secret-nameannotation with the name you want to use for your secret.kind: Service apiVersion: v1 metadata: name: my-service annotations: service.beta.openshift.io/serving-cert-secret-name: my-cert 1 spec: selector: app: MyApp ports: - protocol: TCP port: 80 targetPort: 9376The certificate and key are in PEM format, stored in

tls.crtandtls.keyrespectively.Create the service:

$ oc create -f <file-name>.yaml

View the secret to make sure it was created:

View a list of all secrets:

$ oc get secrets

Example output

NAME TYPE DATA AGE my-cert kubernetes.io/tls 2 9m

View details on your secret:

$ oc describe secret my-cert

Example output

Name: my-cert Namespace: openshift-console Labels: <none> Annotations: service.beta.openshift.io/expiry: 2023-03-08T23:22:40Z service.beta.openshift.io/originating-service-name: my-service service.beta.openshift.io/originating-service-uid: 640f0ec3-afc2-4380-bf31-a8c784846a11 service.beta.openshift.io/expiry: 2023-03-08T23:22:40Z Type: kubernetes.io/tls Data ==== tls.key: 1679 bytes tls.crt: 2595 bytes

Edit your

Podspec with that secret.apiVersion: v1 kind: Pod metadata: name: my-service-pod spec: securityContext: runAsNonRoot: true seccompProfile: type: RuntimeDefault containers: - name: mypod image: redis volumeMounts: - name: my-container mountPath: "/etc/my-path" securityContext: allowPrivilegeEscalation: false capabilities: drop: [ALL] volumes: - name: my-volume secret: secretName: my-cert items: - key: username path: my-group/my-username mode: 511When it is available, your pod will run. The certificate will be good for the internal service DNS name,

<service.name>.<service.namespace>.svc.The certificate/key pair is automatically replaced when it gets close to expiration. View the expiration date in the

service.beta.openshift.io/expiryannotation on the secret, which is in RFC3339 format.NoteIn most cases, the service DNS name

<service.name>.<service.namespace>.svcis not externally routable. The primary use of<service.name>.<service.namespace>.svcis for intracluster or intraservice communication, and with re-encrypt routes.

2.4.6. Troubleshooting secrets

If a service certificate generation fails with (service’s service.beta.openshift.io/serving-cert-generation-error annotation contains):

secret/ssl-key references serviceUID 62ad25ca-d703-11e6-9d6f-0e9c0057b608, which does not match 77b6dd80-d716-11e6-9d6f-0e9c0057b60

The service that generated the certificate no longer exists, or has a different serviceUID. You must force certificates regeneration by removing the old secret, and clearing the following annotations on the service service.beta.openshift.io/serving-cert-generation-error, service.beta.openshift.io/serving-cert-generation-error-num:

Delete the secret:

$ oc delete secret <secret_name>

Clear the annotations:

$ oc annotate service <service_name> service.beta.openshift.io/serving-cert-generation-error-

$ oc annotate service <service_name> service.beta.openshift.io/serving-cert-generation-error-num-

The command removing annotation has a - after the annotation name to be removed.

2.5. Creating and using config maps

The following sections define config maps and how to create and use them.

2.5.1. Understanding config maps

Many applications require configuration by using some combination of configuration files, command line arguments, and environment variables. In OpenShift Dedicated, these configuration artifacts are decoupled from image content to keep containerized applications portable.

The ConfigMap object provides mechanisms to inject containers with configuration data while keeping containers agnostic of OpenShift Dedicated. A config map can be used to store fine-grained information like individual properties or coarse-grained information like entire configuration files or JSON blobs.

The ConfigMap object holds key-value pairs of configuration data that can be consumed in pods or used to store configuration data for system components such as controllers. For example:

ConfigMap Object Definition

kind: ConfigMap apiVersion: v1 metadata: creationTimestamp: 2016-02-18T19:14:38Z name: example-config namespace: my-namespace data: 1 example.property.1: hello example.property.2: world example.property.file: |- property.1=value-1 property.2=value-2 property.3=value-3 binaryData: bar: L3Jvb3QvMTAw 2

You can use the binaryData field when you create a config map from a binary file, such as an image.

Configuration data can be consumed in pods in a variety of ways. A config map can be used to:

- Populate environment variable values in containers

- Set command-line arguments in a container

- Populate configuration files in a volume

Users and system components can store configuration data in a config map.

A config map is similar to a secret, but designed to more conveniently support working with strings that do not contain sensitive information.

Config map restrictions

A config map must be created before its contents can be consumed in pods.

Controllers can be written to tolerate missing configuration data. Consult individual components configured by using config maps on a case-by-case basis.

ConfigMap objects reside in a project.

They can only be referenced by pods in the same project.

The Kubelet only supports the use of a config map for pods it gets from the API server.

This includes any pods created by using the CLI, or indirectly from a replication controller. It does not include pods created by using the OpenShift Dedicated node’s --manifest-url flag, its --config flag, or its REST API because these are not common ways to create pods.

2.5.2. Creating a config map in the OpenShift Dedicated web console

You can create a config map in the OpenShift Dedicated web console.

Procedure

To create a config map as a cluster administrator:

-

In the Administrator perspective, select

Workloads→Config Maps. - At the top right side of the page, select Create Config Map.

- Enter the contents of your config map.

- Select Create.

-

In the Administrator perspective, select

To create a config map as a developer:

-

In the Developer perspective, select

Config Maps. - At the top right side of the page, select Create Config Map.

- Enter the contents of your config map.

- Select Create.

-

In the Developer perspective, select

2.5.3. Creating a config map by using the CLI

You can use the following command to create a config map from directories, specific files, or literal values.

Procedure

Create a config map:

$ oc create configmap <configmap_name> [options]

2.5.3.1. Creating a config map from a directory

You can create a config map from a directory by using the --from-file flag. This method allows you to use multiple files within a directory to create a config map.

Each file in the directory is used to populate a key in the config map, where the name of the key is the file name, and the value of the key is the content of the file.

For example, the following command creates a config map with the contents of the example-files directory:

$ oc create configmap game-config --from-file=example-files/

View the keys in the config map:

$ oc describe configmaps game-config

Example output

Name: game-config Namespace: default Labels: <none> Annotations: <none> Data game.properties: 158 bytes ui.properties: 83 bytes

You can see that the two keys in the map are created from the file names in the directory specified in the command. The content of those keys might be large, so the output of oc describe only shows the names of the keys and their sizes.

Prerequisite

You must have a directory with files that contain the data you want to populate a config map with.

The following procedure uses these example files:

game.propertiesandui.properties:$ cat example-files/game.properties

Example output

enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30

$ cat example-files/ui.properties

Example output

color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNice

Procedure

Create a config map holding the content of each file in this directory by entering the following command:

$ oc create configmap game-config \ --from-file=example-files/

Verification

Enter the

oc getcommand for the object with the-ooption to see the values of the keys:$ oc get configmaps game-config -o yaml

Example output

apiVersion: v1 data: game.properties: |- enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30 ui.properties: | color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNice kind: ConfigMap metadata: creationTimestamp: 2016-02-18T18:34:05Z name: game-config namespace: default resourceVersion: "407" selflink: /api/v1/namespaces/default/configmaps/game-config uid: 30944725-d66e-11e5-8cd0-68f728db1985

2.5.3.2. Creating a config map from a file

You can create a config map from a file by using the --from-file flag. You can pass the --from-file option multiple times to the CLI.

You can also specify the key to set in a config map for content imported from a file by passing a key=value expression to the --from-file option. For example:

$ oc create configmap game-config-3 --from-file=game-special-key=example-files/game.properties

If you create a config map from a file, you can include files containing non-UTF8 data that are placed in this field without corrupting the non-UTF8 data. OpenShift Dedicated detects binary files and transparently encodes the file as MIME. On the server, the MIME payload is decoded and stored without corrupting the data.

Prerequisite

You must have a directory with files that contain the data you want to populate a config map with.

The following procedure uses these example files:

game.propertiesandui.properties:$ cat example-files/game.properties

Example output

enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30

$ cat example-files/ui.properties

Example output

color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNice

Procedure

Create a config map by specifying a specific file:

$ oc create configmap game-config-2 \ --from-file=example-files/game.properties \ --from-file=example-files/ui.propertiesCreate a config map by specifying a key-value pair:

$ oc create configmap game-config-3 \ --from-file=game-special-key=example-files/game.properties

Verification

Enter the

oc getcommand for the object with the-ooption to see the values of the keys from the file:$ oc get configmaps game-config-2 -o yaml

Example output

apiVersion: v1 data: game.properties: |- enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30 ui.properties: | color.good=purple color.bad=yellow allow.textmode=true how.nice.to.look=fairlyNice kind: ConfigMap metadata: creationTimestamp: 2016-02-18T18:52:05Z name: game-config-2 namespace: default resourceVersion: "516" selflink: /api/v1/namespaces/default/configmaps/game-config-2 uid: b4952dc3-d670-11e5-8cd0-68f728db1985Enter the

oc getcommand for the object with the-ooption to see the values of the keys from the key-value pair:$ oc get configmaps game-config-3 -o yaml

Example output

apiVersion: v1 data: game-special-key: |- 1 enemies=aliens lives=3 enemies.cheat=true enemies.cheat.level=noGoodRotten secret.code.passphrase=UUDDLRLRBABAS secret.code.allowed=true secret.code.lives=30 kind: ConfigMap metadata: creationTimestamp: 2016-02-18T18:54:22Z name: game-config-3 namespace: default resourceVersion: "530" selflink: /api/v1/namespaces/default/configmaps/game-config-3 uid: 05f8da22-d671-11e5-8cd0-68f728db1985- 1

- This is the key that you set in the preceding step.

2.5.3.3. Creating a config map from literal values

You can supply literal values for a config map.

The --from-literal option takes a key=value syntax, which allows literal values to be supplied directly on the command line.

Procedure

Create a config map by specifying a literal value:

$ oc create configmap special-config \ --from-literal=special.how=very \ --from-literal=special.type=charm

Verification

Enter the

oc getcommand for the object with the-ooption to see the values of the keys:$ oc get configmaps special-config -o yaml

Example output

apiVersion: v1 data: special.how: very special.type: charm kind: ConfigMap metadata: creationTimestamp: 2016-02-18T19:14:38Z name: special-config namespace: default resourceVersion: "651" selflink: /api/v1/namespaces/default/configmaps/special-config uid: dadce046-d673-11e5-8cd0-68f728db1985

2.5.4. Use cases: Consuming config maps in pods

The following sections describe some uses cases when consuming ConfigMap objects in pods.

2.5.4.1. Populating environment variables in containers by using config maps

You can use config maps to populate individual environment variables in containers or to populate environment variables in containers from all keys that form valid environment variable names.

As an example, consider the following config map:

ConfigMap with two environment variables

apiVersion: v1 kind: ConfigMap metadata: name: special-config 1 namespace: default 2 data: special.how: very 3 special.type: charm 4

ConfigMap with one environment variable

apiVersion: v1 kind: ConfigMap metadata: name: env-config 1 namespace: default data: log_level: INFO 2

Procedure

You can consume the keys of this

ConfigMapin a pod usingconfigMapKeyRefsections.Sample

Podspecification configured to inject specific environment variablesapiVersion: v1 kind: Pod metadata: name: dapi-test-pod spec: securityContext: runAsNonRoot: true seccompProfile: type: RuntimeDefault containers: - name: test-container image: gcr.io/google_containers/busybox command: [ "/bin/sh", "-c", "env" ] env: 1 - name: SPECIAL_LEVEL_KEY 2 valueFrom: configMapKeyRef: name: special-config 3 key: special.how 4 - name: SPECIAL_TYPE_KEY valueFrom: configMapKeyRef: name: special-config 5 key: special.type 6 optional: true 7 envFrom: 8 - configMapRef: name: env-config 9 securityContext: allowPrivilegeEscalation: false capabilities: drop: [ALL] restartPolicy: Never- 1

- Stanza to pull the specified environment variables from a

ConfigMap. - 2

- Name of a pod environment variable that you are injecting a key’s value into.

- 3 5

- Name of the

ConfigMapto pull specific environment variables from. - 4 6

- Environment variable to pull from the

ConfigMap. - 7

- Makes the environment variable optional. As optional, the pod will be started even if the specified

ConfigMapand keys do not exist. - 8

- Stanza to pull all environment variables from a

ConfigMap. - 9

- Name of the

ConfigMapto pull all environment variables from.

When this pod is run, the pod logs will include the following output:

SPECIAL_LEVEL_KEY=very log_level=INFO

SPECIAL_TYPE_KEY=charm is not listed in the example output because optional: true is set.

2.5.4.2. Setting command-line arguments for container commands with config maps

You can use a config map to set the value of the commands or arguments in a container by using the Kubernetes substitution syntax $(VAR_NAME).

As an example, consider the following config map:

apiVersion: v1 kind: ConfigMap metadata: name: special-config namespace: default data: special.how: very special.type: charm

Procedure

To inject values into a command in a container, you must consume the keys you want to use as environment variables. Then you can refer to them in a container’s command using the

$(VAR_NAME)syntax.Sample pod specification configured to inject specific environment variables

apiVersion: v1 kind: Pod metadata: name: dapi-test-pod spec: securityContext: runAsNonRoot: true seccompProfile: type: RuntimeDefault containers: - name: test-container image: gcr.io/google_containers/busybox command: [ "/bin/sh", "-c", "echo $(SPECIAL_LEVEL_KEY) $(SPECIAL_TYPE_KEY)" ] 1 env: - name: SPECIAL_LEVEL_KEY valueFrom: configMapKeyRef: name: special-config key: special.how - name: SPECIAL_TYPE_KEY valueFrom: configMapKeyRef: name: special-config key: special.type securityContext: allowPrivilegeEscalation: false capabilities: drop: [ALL] restartPolicy: Never- 1

- Inject the values into a command in a container using the keys you want to use as environment variables.

When this pod is run, the output from the echo command run in the test-container container is as follows:

very charm

2.5.4.3. Injecting content into a volume by using config maps

You can inject content into a volume by using config maps.

Example ConfigMap custom resource (CR)

apiVersion: v1 kind: ConfigMap metadata: name: special-config namespace: default data: special.how: very special.type: charm

Procedure

You have a couple different options for injecting content into a volume by using config maps.

The most basic way to inject content into a volume by using a config map is to populate the volume with files where the key is the file name and the content of the file is the value of the key:

apiVersion: v1 kind: Pod metadata: name: dapi-test-pod spec: securityContext: runAsNonRoot: true seccompProfile: type: RuntimeDefault containers: - name: test-container image: gcr.io/google_containers/busybox command: [ "/bin/sh", "-c", "cat", "/etc/config/special.how" ] volumeMounts: - name: config-volume mountPath: /etc/config securityContext: allowPrivilegeEscalation: false capabilities: drop: [ALL] volumes: - name: config-volume configMap: name: special-config 1 restartPolicy: Never- 1

- File containing key.

When this pod is run, the output of the cat command will be:

very

You can also control the paths within the volume where config map keys are projected:

apiVersion: v1 kind: Pod metadata: name: dapi-test-pod spec: securityContext: runAsNonRoot: true seccompProfile: type: RuntimeDefault containers: - name: test-container image: gcr.io/google_containers/busybox command: [ "/bin/sh", "-c", "cat", "/etc/config/path/to/special-key" ] volumeMounts: - name: config-volume mountPath: /etc/config securityContext: allowPrivilegeEscalation: false capabilities: drop: [ALL] volumes: - name: config-volume configMap: name: special-config items: - key: special.how path: path/to/special-key 1 restartPolicy: Never- 1

- Path to config map key.

When this pod is run, the output of the cat command will be:

very

2.6. Including pod priority in pod scheduling decisions

You can enable pod priority and preemption in your cluster. Pod priority indicates the importance of a pod relative to other pods and queues the pods based on that priority. pod preemption allows the cluster to evict, or preempt, lower-priority pods so that higher-priority pods can be scheduled if there is no available space on a suitable node pod priority also affects the scheduling order of pods and out-of-resource eviction ordering on the node.

To use priority and preemption, reference a priority class in the pod specification to apply that weight for scheduling.

2.6.1. Understanding pod priority

When you use the Pod Priority and Preemption feature, the scheduler orders pending pods by their priority, and a pending pod is placed ahead of other pending pods with lower priority in the scheduling queue. As a result, the higher priority pod might be scheduled sooner than pods with lower priority if its scheduling requirements are met. If a pod cannot be scheduled, scheduler continues to schedule other lower priority pods.

2.6.1.1. Pod priority classes

You can assign pods a priority class, which is a non-namespaced object that defines a mapping from a name to the integer value of the priority. The higher the value, the higher the priority.

A priority class object can take any 32-bit integer value smaller than or equal to 1000000000 (one billion). Reserve numbers larger than or equal to one billion for critical pods that must not be preempted or evicted. By default, OpenShift Dedicated has two reserved priority classes for critical system pods to have guaranteed scheduling.

$ oc get priorityclasses

Example output

NAME VALUE GLOBAL-DEFAULT AGE system-node-critical 2000001000 false 72m system-cluster-critical 2000000000 false 72m openshift-user-critical 1000000000 false 3d13h cluster-logging 1000000 false 29s

system-node-critical - This priority class has a value of 2000001000 and is used for all pods that should never be evicted from a node. Examples of pods that have this priority class are

sdn-ovs,sdn, and so forth. A number of critical components include thesystem-node-criticalpriority class by default, for example:- master-api

- master-controller

- master-etcd

- sdn

- sdn-ovs

- sync

system-cluster-critical - This priority class has a value of 2000000000 (two billion) and is used with pods that are important for the cluster. Pods with this priority class can be evicted from a node in certain circumstances. For example, pods configured with the

system-node-criticalpriority class can take priority. However, this priority class does ensure guaranteed scheduling. Examples of pods that can have this priority class are fluentd, add-on components like descheduler, and so forth. A number of critical components include thesystem-cluster-criticalpriority class by default, for example:- fluentd

- metrics-server

- descheduler

-

openshift-user-critical - You can use the

priorityClassNamefield with important pods that cannot bind their resource consumption and do not have predictable resource consumption behavior. Prometheus pods under theopenshift-monitoringandopenshift-user-workload-monitoringnamespaces use theopenshift-user-criticalpriorityClassName. Monitoring workloads usesystem-criticalas their firstpriorityClass, but this causes problems when monitoring uses excessive memory and the nodes cannot evict them. As a result, monitoring drops priority to give the scheduler flexibility, moving heavy workloads around to keep critical nodes operating. - cluster-logging - This priority is used by Fluentd to make sure Fluentd pods are scheduled to nodes over other apps.

2.6.1.2. Pod priority names

After you have one or more priority classes, you can create pods that specify a priority class name in a Pod spec. The priority admission controller uses the priority class name field to populate the integer value of the priority. If the named priority class is not found, the pod is rejected.

2.6.2. Understanding pod preemption

When a developer creates a pod, the pod goes into a queue. If the developer configured the pod for pod priority or preemption, the scheduler picks a pod from the queue and tries to schedule the pod on a node. If the scheduler cannot find space on an appropriate node that satisfies all the specified requirements of the pod, preemption logic is triggered for the pending pod.

When the scheduler preempts one or more pods on a node, the nominatedNodeName field of higher-priority Pod spec is set to the name of the node, along with the nodename field. The scheduler uses the nominatedNodeName field to keep track of the resources reserved for pods and also provides information to the user about preemptions in the clusters.

After the scheduler preempts a lower-priority pod, the scheduler honors the graceful termination period of the pod. If another node becomes available while scheduler is waiting for the lower-priority pod to terminate, the scheduler can schedule the higher-priority pod on that node. As a result, the nominatedNodeName field and nodeName field of the Pod spec might be different.

Also, if the scheduler preempts pods on a node and is waiting for termination, and a pod with a higher-priority pod than the pending pod needs to be scheduled, the scheduler can schedule the higher-priority pod instead. In such a case, the scheduler clears the nominatedNodeName of the pending pod, making the pod eligible for another node.

Preemption does not necessarily remove all lower-priority pods from a node. The scheduler can schedule a pending pod by removing a portion of the lower-priority pods.

The scheduler considers a node for pod preemption only if the pending pod can be scheduled on the node.

2.6.2.1. Non-preempting priority classes

Pods with the preemption policy set to Never are placed in the scheduling queue ahead of lower-priority pods, but they cannot preempt other pods. A non-preempting pod waiting to be scheduled stays in the scheduling queue until sufficient resources are free and it can be scheduled. Non-preempting pods, like other pods, are subject to scheduler back-off. This means that if the scheduler tries unsuccessfully to schedule these pods, they are retried with lower frequency, allowing other pods with lower priority to be scheduled before them.

Non-preempting pods can still be preempted by other, high-priority pods.

2.6.2.2. Pod preemption and other scheduler settings

If you enable pod priority and preemption, consider your other scheduler settings:

- Pod priority and pod disruption budget

- A pod disruption budget specifies the minimum number or percentage of replicas that must be up at a time. If you specify pod disruption budgets, OpenShift Dedicated respects them when preempting pods at a best effort level. The scheduler attempts to preempt pods without violating the pod disruption budget. If no such pods are found, lower-priority pods might be preempted despite their pod disruption budget requirements.

- Pod priority and pod affinity

- Pod affinity requires a new pod to be scheduled on the same node as other pods with the same label.

If a pending pod has inter-pod affinity with one or more of the lower-priority pods on a node, the scheduler cannot preempt the lower-priority pods without violating the affinity requirements. In this case, the scheduler looks for another node to schedule the pending pod. However, there is no guarantee that the scheduler can find an appropriate node and pending pod might not be scheduled.

To prevent this situation, carefully configure pod affinity with equal-priority pods.

2.6.2.3. Graceful termination of preempted pods

When preempting a pod, the scheduler waits for the pod graceful termination period to expire, allowing the pod to finish working and exit. If the pod does not exit after the period, the scheduler kills the pod. This graceful termination period creates a time gap between the point that the scheduler preempts the pod and the time when the pending pod can be scheduled on the node.

To minimize this gap, configure a small graceful termination period for lower-priority pods.

2.6.3. Configuring priority and preemption

You apply pod priority and preemption by creating a priority class object and associating pods to the priority by using the priorityClassName in your pod specs.

You cannot add a priority class directly to an existing scheduled pod.

Procedure

To configure your cluster to use priority and preemption:

Define a pod spec to include the name of a priority class by creating a YAML file similar to the following:

apiVersion: v1 kind: Pod metadata: name: nginx labels: env: test spec: containers: - name: nginx image: nginx imagePullPolicy: IfNotPresent priorityClassName: system-cluster-critical 1- 1

- Specify the priority class to use with this pod.

Create the pod:

$ oc create -f <file-name>.yaml

You can add the priority name directly to the pod configuration or to a pod template.

2.7. Placing pods on specific nodes using node selectors

A node selector specifies a map of key-value pairs. The rules are defined using custom labels on nodes and selectors specified in pods.

For the pod to be eligible to run on a node, the pod must have the indicated key-value pairs as the label on the node.

If you are using node affinity and node selectors in the same pod configuration, see the important considerations below.

2.7.1. Using node selectors to control pod placement

You can use node selectors on pods and labels on nodes to control where the pod is scheduled. With node selectors, OpenShift Dedicated schedules the pods on nodes that contain matching labels.

You add labels to a node, a compute machine set, or a machine config. Adding the label to the compute machine set ensures that if the node or machine goes down, new nodes have the label. Labels added to a node or machine config do not persist if the node or machine goes down.

To add node selectors to an existing pod, add a node selector to the controlling object for that pod, such as a ReplicaSet object, DaemonSet object, StatefulSet object, Deployment object, or DeploymentConfig object. Any existing pods under that controlling object are recreated on a node with a matching label. If you are creating a new pod, you can add the node selector directly to the pod spec. If the pod does not have a controlling object, you must delete the pod, edit the pod spec, and recreate the pod.

You cannot add a node selector directly to an existing scheduled pod.

Prerequisites

To add a node selector to existing pods, determine the controlling object for that pod. For example, the router-default-66d5cf9464-m2g75 pod is controlled by the router-default-66d5cf9464 replica set:

$ oc describe pod router-default-66d5cf9464-7pwkc

Example output

kind: Pod apiVersion: v1 metadata: # ... Name: router-default-66d5cf9464-7pwkc Namespace: openshift-ingress # ... Controlled By: ReplicaSet/router-default-66d5cf9464 # ...

The web console lists the controlling object under ownerReferences in the pod YAML:

apiVersion: v1

kind: Pod

metadata:

name: router-default-66d5cf9464-7pwkc

# ...

ownerReferences:

- apiVersion: apps/v1

kind: ReplicaSet

name: router-default-66d5cf9464

uid: d81dd094-da26-11e9-a48a-128e7edf0312

controller: true

blockOwnerDeletion: true

# ...Procedure