-

Language:

English

-

Language:

English

Installation Guide

Installing Red Hat Enterprise Virtualization

Andrew Burden

aburden@redhat.comSteve Gordon

sgordon@redhat.comTim Hildred

thildred@redhat.comCheryn Tan

chetan@redhat.comAbstract

Part I. Introduction

Chapter 1. Introduction

1.2. Red Hat Enterprise Virtualization Manager Requirements

1.2.1. Red Hat Enterprise Virtualization Hardware Requirements Overview

- one machine to act as the management server,

- one or more machines to act as virtualization hosts - at least two are required to support migration and power management,

- one or more machines to use as clients for accessing the Administration Portal.

- storage infrastructure provided by NFS, POSIX, iSCSI, SAN, or local storage.

1.2.2. Red Hat Enterprise Virtualization Manager Hardware Requirements

Minimum

- A dual core CPU.

- 4 GB of available system RAM that is not being consumed by existing processes.

- 25 GB of locally accessible, writeable, disk space.

- 1 Network Interface Card (NIC) with bandwidth of at least 1 Gbps.

Recommended

- A quad core CPU or multiple dual core CPUs.

- 16 GB of system RAM.

- 50 GB of locally accessible, writeable, disk space.

- 1 Network Interface Card (NIC) with bandwidth of at least 1 Gbps.

1.2.3. Operating System Requirements

Important

1.2.4. Red Hat Enterprise Virtualization Manager Client Requirements

- Mozilla Firefox 17, and later, on Red Hat Enterprise Linux is required to access both portals.

- Internet Explorer 8, and later, on Microsoft Windows is required to access the User Portal. Use the desktop version, not the touchscreen version of Internet Explorer 10.

- Internet Explorer 9, and later, on Microsoft Windows is required to access the Administration Portal. Use the desktop version, not the touchscreen version of Internet Explorer 10.

- Red Hat Enterprise Linux 5.8+ (i386, AMD64 and Intel 64)

- Red Hat Enterprise Linux 6.2+ (i386, AMD64 and Intel 64)

- Red Hat Enterprise Linux 6.5+ (i386, AMD64 and Intel 64)

- Windows XP

- Windows XP Embedded (XPe)

- Windows 7 (x86, AMD64 and Intel 64)

- Windows 8 (x86, AMD64 and Intel 64)

- Windows Embedded Standard 7

- Windows 2008/R2 (x86, AMD64 and Intel 64)

- Windows Embedded Standard 2009

- Red Hat Enterprise Virtualization Certified Linux-based thin clients

Note

yum.

1.2.5. Red Hat Enterprise Virtualization Manager Software Channels

Note

Certificate-based Red Hat Network

- The

Red Hat Enterprise Linux Serverentitlement, provides Red Hat Enterprise Linux. - The

Red Hat Enterprise Virtualizationentitlement, provides Red Hat Enterprise Virtualization Manager. - The

Red Hat JBoss Enterprise Application Platformentitlement, provides the supported release of the application platform on which the Manager runs.

Red Hat Network Classic

- The

Red Hat Enterprise Linux Server (v. 6 for 64-bit x86_64)channel, also referred to asrhel-x86_64-server-6, provides Red Hat Enterprise Linux 6 Server. The Channel Entitlement name for this channel isRed Hat Enterprise Linux Server (v. 6). - The

RHEL Server Supplementary (v. 6 64-bit x86_64)channel, also referred to asrhel-x86_64-server-supplementary-6, provides the virtio-win package. The virtio-win package provides the Windows VirtIO drivers for use in virtual machines. The Channel Entitlement Name for the supplementary channel isRed Hat Enterprise Linux Server Supplementary (v. 6). - The

Red Hat Enterprise Virtualization Manager (v3.4 x86_64)channel, also referred to asrhel-x86_64-server-6-rhevm-3.4, provides Red Hat Enterprise Virtualization Manager. The Channel Entitlement Name for this channel isRed Hat Enterprise Virtualization Manager (v3). - The

Red Hat JBoss EAP (v 6) for 6Server x86_64channel, also referred to asjbappplatform-6-x86_64-server-6-rpm, provides the supported release of the application platform on which the Manager runs. The Channel Entitlement Name for this channel isRed Hat JBoss Enterprise Application Platform (v 4, zip format).

1.3. Hypervisor Requirements

1.3.1. Virtualization Host Hardware Requirements Overview

1.3.2. Virtualization Host CPU Requirements

- AMD Opteron G1

- AMD Opteron G2

- AMD Opteron G3

- AMD Opteron G4

- AMD Opteron G5

- Intel Conroe

- Intel Penryn

- Intel Nehalem

- Intel Westmere

- Intel Sandybridge

- Intel Haswell

No eXecute flag (NX) is also required. To check that your processor supports the required flags, and that they are enabled:

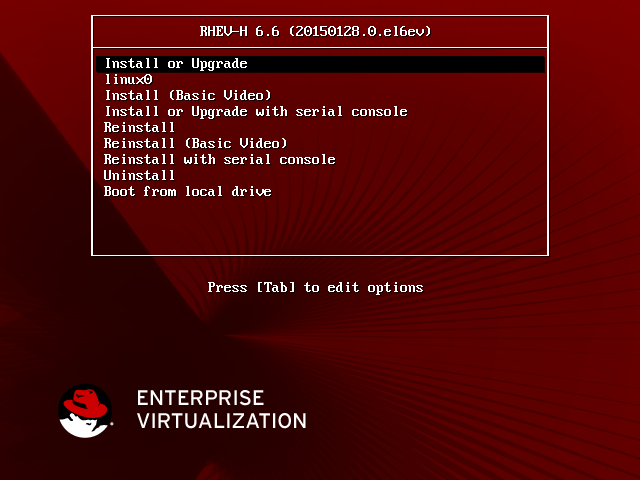

- At the Red Hat Enterprise Linux or Red Hat Enterprise Virtualization Hypervisor boot screen, press any key and select the Boot or Boot with serial console entry from the list.

- Press Tab to edit the kernel parameters for the selected option.

- Ensure there is a Space after the last kernel parameter listed, and append the

rescueparameter. - Press Enter to boot into rescue mode.

- At the prompt which appears, determine that your processor has the required extensions and that they are enabled by running this command:

# grep -E 'svm|vmx' /proc/cpuinfo | grep nx

If any output is shown, then the processor is hardware virtualization capable. If no output is shown, then it is still possible that your processor supports hardware virtualization. In some circumstances manufacturers disable the virtualization extensions in the BIOS. If you believe this to be the case, consult the system's BIOS and the motherboard manual provided by the manufacturer. - As an additional check, verify that the

kvmmodules are loaded in the kernel:# lsmod | grep kvm

If the output includeskvm_intelorkvm_amdthen thekvmhardware virtualization modules are loaded and your system meets requirements.

1.3.3. Virtualization Host RAM Requirements

- guest operating system requirements,

- guest application requirements, and

- memory activity and usage of guests.

1.3.4. Virtualization Host Storage Requirements

- The root partitions require at least 512 MB of storage.

- The configuration partition requires at least 8 MB of storage.

- The recommended minimum size of the logging partition is 2048 MB.

- The data partition requires at least 256 MB of storage. Use of a smaller data partition may prevent future upgrades of the Hypervisor from the Red Hat Enterprise Virtualization Manager. By default all disk space remaining after allocation of swap space will be allocated to the data partition.

- The swap partition requires at least 8 MB of storage. The recommended size of the swap partition varies depending on both the system the Hypervisor is being installed upon and the anticipated level of overcommit for the environment. Overcommit allows the Red Hat Enterprise Virtualization environment to present more RAM to guests than is actually physically present. The default overcommit ratio is

0.5.The recommended size of the swap partition can be determined by:- Multiplying the amount of system RAM by the expected overcommit ratio, and adding

- 2 GB of swap space for systems with 4 GB of RAM or less, or

- 4 GB of swap space for systems with between 4 GB and 16 GB of RAM, or

- 8 GB of swap space for systems with between 16 GB and 64 GB of RAM, or

- 16 GB of swap space for systems with between 64 GB and 256 GB of RAM.

Example 1.1. Calculating Swap Partition Size

For a system with 8 GB of RAM this means the formula for determining the amount of swap space to allocate is:(8 GB x 0.5) + 4 GB = 8 GB

Important

0.5 is used for this calculation. For some systems the result of this calculation may be a swap partition that requires more free disk space than is available at installation. Where this is the case Hypervisor installation will fail.

storage_vol boot parameter.

Example 1.2. Manually Setting Swap Partition Size

storage_vol boot parameter is used to set a swap partition size of 4096 MB. Note that no sizes are specified for the other partitions, allowing the Hypervisor to use the default sizes.

storage_vol=:4096::::

Important

fakeraid devices. Where a fakeraid device is present it must be reconfigured such that it no longer runs in RAID mode.

- Access the RAID controller's BIOS and remove all logical drives from it.

- Change controller mode to be non-RAID. This may be referred to as compatibility or JBOD mode.

1.3.5. Virtualization Host PCI Device Requirements

1.4. User Authentication

1.4.1. About Directory Services

1.4.2. Directory Services Support in Red Hat Enterprise Virtualization

admin. This account is intended for use when initially configuring the environment, and for troubleshooting. To add other users to Red Hat Enterprise Virtualization you must attach a directory server to the Manager using the Domain Management Tool, engine-manage-domains.

user@domain. Attachment of more than one directory server to the Manager is also supported.

- Active Directory

- Identity Management (IdM)

- Red Hat Directory Server 9 (RHDS 9)

- OpenLDAP

- A valid pointer record (PTR) for the directory server's reverse look-up address.

- A valid service record (SRV) for LDAP over TCP port

389. - A valid service record (SRV) for Kerberos over TCP port

88. - A valid service record (SRV) for Kerberos over UDP port

88.

engine-manage-domains.

- Active Directory - http://technet.microsoft.com/en-us/windowsserver/dd448614.

- Identity Management (IdM) - http://docs.redhat.com/docs/en-US/Red_Hat_Enterprise_Linux/6/html/Identity_Management_Guide/index.html

- Red Hat Directory Server (RHDS) - http://docs.redhat.com/docs/en-US/Red_Hat_Directory_Server/index.html

- OpenLDAP - http://www.openldap.org/doc/

Important

Important

Important

sysprep in the creation of Templates and Virtual Machines, then the Red Hat Enterprise Virtualization administrative user must be delegated control over the Domain to:

- Join a computer to the domain

- Modify the membership of a group

Note

- Configure the

memberOfplug-in for RHDS to allow group membership. In particular ensure that the value of thememberofgroupattrattribute of thememberOfplug-in is set touniqueMember. In OpenLDAP, thememberOffunctionality is not called a "plugin". It is called an "overlay" and requires no configuration after installation.Consult the Red Hat Directory Server 9.0 Plug-in Guide for more information on configuring thememberOfplug-in. - Define the directory server as a service of the form

ldap/hostname@REALMNAMEin the Kerberos realm. Replace hostname with the fully qualified domain name associated with the directory server and REALMNAME with the fully qualified Kerberos realm name. The Kerberos realm name must be specified in capital letters. - Generate a

keytabfile for the directory server in the Kerberos realm. Thekeytabfile contains pairs of Kerberos principals and their associated encrypted keys. These keys allow the directory server to authenticate itself with the Kerberos realm.Consult the documentation for your Kerberos principle for more information on generating akeytabfile. - Install the

keytabfile on the directory server. Then configure RHDS to recognize thekeytabfile and accept Kerberos authentication using GSSAPI.Consult the Red Hat Directory Server 9.0 Administration Guide for more information on configuring RHDS to use an externalkeytabfile. - Test the configuration on the directory server by using the

kinitcommand to authenticate as a user defined in the Kerberos realm. Once authenticated run theldapsearchcommand against the directory server. Use the-Y GSSAPIparameters to ensure the use of Kerberos for authentication.

1.5. Firewalls

1.5.1. Red Hat Enterprise Virtualization Manager Firewall Requirements

engine-setup script is able to configure the firewall automatically, but this overwrites any pre-existing firewall configuration.

engine-setup command saves a list of the iptables rules required in the /usr/share/ovirt-engine/conf/iptables.example file.

80 and 443) listed here.

Table 1.1. Red Hat Enterprise Virtualization Manager Firewall Requirements

| Port(s) | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

| - | ICMP |

|

| When registering to the Red Hat Enterprise Virtualization Manager, virtualization hosts send an ICMP ping request to the Manager to confirm that it is online. |

| 22 | TCP |

|

| SSH (optional) |

| 80, 443 | TCP |

|

|

Provides HTTP and HTTPS access to the Manager.

|

Important

NFSv4

- TCP port

2049for NFS.

NFSv3

- TCP and UDP port

2049for NFS. - TCP and UDP port

111(rpcbind/sunrpc). - TCP and UDP port specified with

MOUNTD_PORT="port" - TCP and UDP port specified with

STATD_PORT="port" - TCP port specified with

LOCKD_TCPPORT="port" - UDP port specified with

LOCKD_UDPPORT="port"

MOUNTD_PORT, STATD_PORT, LOCKD_TCPPORT, and LOCKD_UDPPORT ports are configured in the /etc/sysconfig/nfs file.

1.5.2. Virtualization Host Firewall Requirements

Table 1.2. Virtualization Host Firewall Requirements

| Port(s) | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

| 22 | TCP |

|

| Secure Shell (SSH) access. |

| 161 | UDP |

|

| Simple network management protocol (SNMP). |

| 5900 - 6923 | TCP |

|

|

Remote guest console access via VNC and SPICE. These ports must be open to facilitate client access to virtual machines.

|

| 5989 | TCP, UDP |

|

|

Used by Common Information Model Object Managers (CIMOM) to monitor virtual machines running on the virtualization host. To use a CIMOM to monitor the virtual machines in your virtualization environment then you must ensure that this port is open.

|

| 16514 | TCP |

|

|

Virtual machine migration using

libvirt.

|

| 49152 - 49216 | TCP |

|

|

Virtual machine migration and fencing using VDSM. These ports must be open facilitate both automated and manually initiated migration of virtual machines.

|

| 54321 | TCP |

|

|

VDSM communications with the Manager and other virtualization hosts.

|

Example 1.3. Option Name: IPTablesConfig

*filter :INPUT ACCEPT [0:0] :FORWARD ACCEPT [0:0] :OUTPUT ACCEPT [0:0] -A INPUT -m state --state ESTABLISHED,RELATED -j ACCEPT -A INPUT -p icmp -j ACCEPT -A INPUT -i lo -j ACCEPT # vdsm -A INPUT -p tcp --dport 54321 -j ACCEPT # libvirt tls -A INPUT -p tcp --dport 16514 -j ACCEPT # SSH -A INPUT -p tcp --dport 22 -j ACCEPT # guest consoles -A INPUT -p tcp -m multiport --dports 5900:6923 -j ACCEPT # migration -A INPUT -p tcp -m multiport --dports 49152:49216 -j ACCEPT # snmp -A INPUT -p udp --dport 161 -j ACCEPT # Reject any other input traffic -A INPUT -j REJECT --reject-with icmp-host-prohibited -A FORWARD -m physdev ! --physdev-is-bridged -j REJECT --reject-with icmp-host-prohibited COMMIT

1.5.3. Directory Server Firewall Requirements

Table 1.3. Host Firewall Requirements

| Port(s) | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

| 88, 464 | TCP, UDP |

|

| Kerberos authentication. |

| 389, 636 | TCP |

|

| Lightweight Directory Access Protocol (LDAP) and LDAP over SSL. |

1.5.4. Database Server Firewall Requirements

Table 1.4. Host Firewall Requirements

| Port(s) | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

| 5432 | TCP, UDP |

|

| Default port for PostgreSQL database connections. |

1.6. System Accounts

1.6.1. Red Hat Enterprise Virtualization Manager User Accounts

- The

vdsmuser (UID36). Required for support tools that mount and access NFS storage domains. - The

ovirtuser (UID108). Owner of theovirt-engineRed Hat JBoss Enterprise Application Platform instance.

1.6.2. Red Hat Enterprise Virtualization Manager Groups

- The

kvmgroup (GID36). Group members include:- The

vdsmuser.

- The

ovirtgroup (GID108). Group members include:- The

ovirtuser.

1.6.3. Virtualization Host User Accounts

- The

vdsmuser (UID36). - The

qemuuser (UID107). - The

sanlockuser (UID179).

admin user (UID 500). This admin user is not created on Red Hat Enterprise Linux virtualization hosts. The admin user is created with the required permissions to run commands as the root user using the sudo command. The vdsm user which is present on both types of virtualization hosts is also given access to the sudo command.

Important

vdsm user however is fixed to a UID of 36 and the kvm group is fixed to a GID of 36.

36 or GID 36 is already used by another account on the system then a conflict will arise during installation of the vdsm and qemu-kvm-rhev packages.

1.6.4. Virtualization Host Groups

- The

kvmgroup (GID36). Group members include:- The

qemuuser. - The

sanlockuser.

- The

qemugroup (GID107). Group members include:- The

vdsmuser. - The

sanlockuser.

Important

vdsm user however is fixed to a UID of 36 and the kvm group is fixed to a GID of 36.

36 or GID 36 is already used by another account on the system then a conflict will arise during installation of the vdsm and qemu-kvm-rhev packages.

Part II. Installing Red Hat Enterprise Virtualization

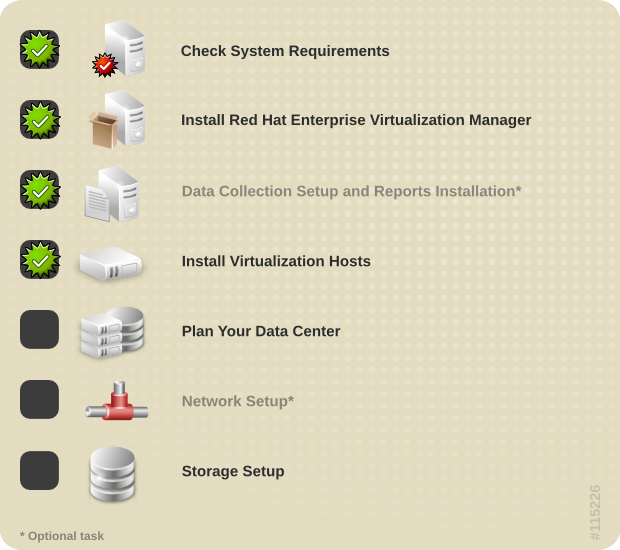

Chapter 2. Installing Red Hat Enterprise Virtualization

2.2. Installing the Red Hat Enterprise Virtualization Manager

The Red Hat Enterprise Virtualization Manager can be installed under one of two arrangements - a standard setup in which the Manager is installed on an independent physical machine or virtual machine, or a self-hosted engine setup in which the Manager runs on a virtual machine that the Manager itself controls.

Important

Before installing the Red Hat Virtualization Manager, you must ensure that you meet all the prerequisites. To complete installation of the Red Hat Enterprise Virtualization Manager successfully, you must also be able to determine:

- The ports to be used for HTTP and HTTPS communication. The defaults ports are

80and443respectively. - The fully qualified domain name (FQDN) of the system on which the Manager is to be installed.

- The password you use to secure the Red Hat Enterprise Virtualization administration account.

- The location of the database server to be used. You can use the setup script to install and configure a local database server or use an existing remote database server. To use a remote database server you must know:You must also know the user name and password of a user that is known to the remote database server. The user must have permission to create databases in PostgreSQL.

- The host name of the system on which the remote database server exists.

- The port on which the remote database server is listening.

- That the

uuid-osspextension had been loaded by the remote database server.

- The organization name to use when creating the Manager's security certificates.

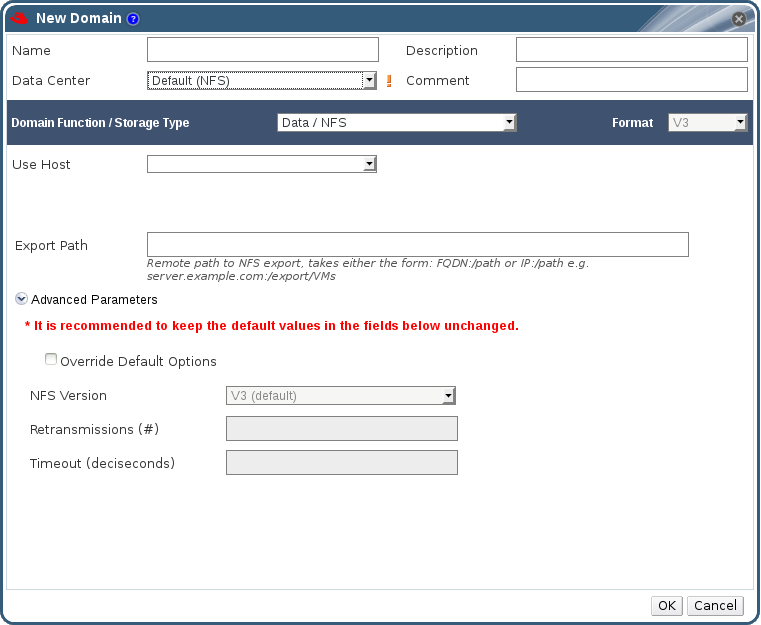

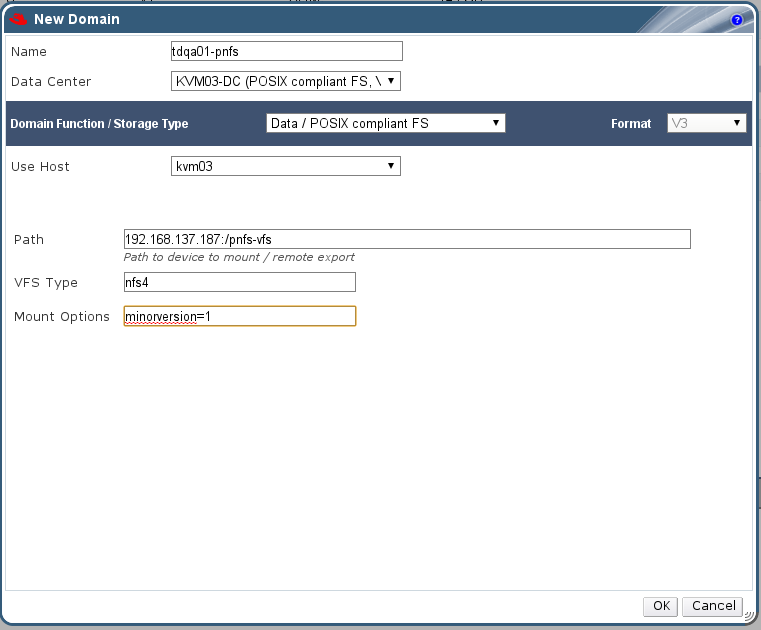

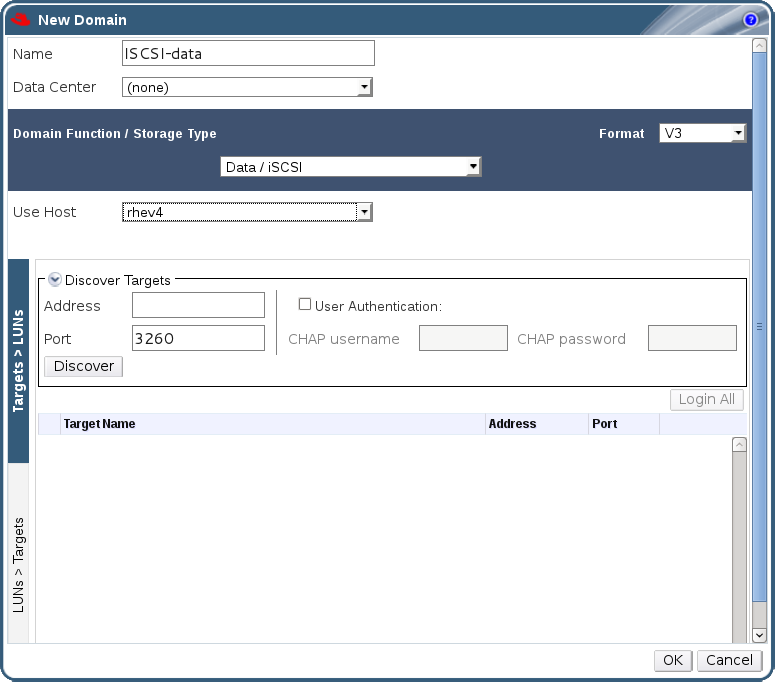

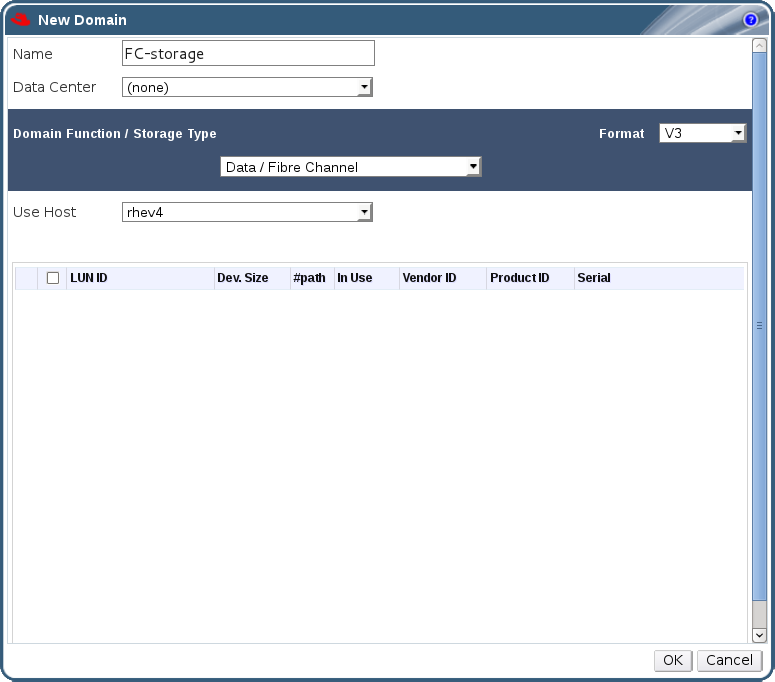

- The storage type to be used for the initial data center attached to the Manager. The default is NFS.

- The path to use for the ISO share, if the Manager is being configured to provide one. The display name, which will be used to label the domain in the Red Hat Enterprise Virtualization Manager also needs to be provided.

- The firewall rules, if any, present on the system that need to be integrated with the rules required for the Manager to function.

Before installation is completed the values selected are displayed for confirmation. Once the values have been confirmed they are applied and the Red Hat Enterprise Virtualization Manager is ready for use.

Example 2.1. Completed Installation

--== CONFIGURATION PREVIEW ==--

Engine database name : engine

Engine database secured connection : False

Engine database host : localhost

Engine database user name : engine

Engine database host name validation : False

Engine database port : 5432

NFS setup : True

PKI organization : Your Org

Application mode : both

Firewall manager : iptables

Update Firewall : True

Configure WebSocket Proxy : True

Host FQDN : Your Manager's FQDN

NFS export ACL : 0.0.0.0/0.0.0.0(rw)

NFS mount point : /var/lib/exports/iso

Datacenter storage type : nfs

Configure local Engine database : True

Set application as default page : True

Configure Apache SSL : True

Please confirm installation settings (OK, Cancel) [OK]:

Note

engine-setup with an answer file. An answer file contains answers to the questions asked by the setup command.

- To create an answer file, use the

--generate-answerparameter to specify a path and file name with which to create the answer file. When this option is specified, theengine-setupcommand records your answers to the questions in the setup process to the answer file.# engine-setup --generate-answer=[ANSWER_FILE]

- To use an answer file for a new installation, use the

--config-appendparameter to specify the path and file name of the answer file to be used. Theengine-setupcommand will use the answers stored in the file to complete the installation.# engine-setup --config-append=[ANSWER_FILE]

engine-setup --help for a full list of parameters.

Note

2.3. Subscribing to the Required Channels

2.3.1. Subscribing to the Red Hat Enterprise Virtualization Manager Channels using Subscription Manager

Before you can install the Red Hat Enterprise Virtualization Manager, you must register the system on which the Red Hat Enterprise Virtualization Manager will be installed with the Red Hat Network and subscribe to the required channels.

Procedure 2.1. Subscribing to the Red Hat Enterprise Virtualization Manager Channels using Subscription Manager

Register the System with Subscription Manager

Run the following command and enter your Red Hat Network user name and password to register the system with the Red Hat Network:# subscription-manager register

Identify Available Entitlement Pools

Run the following commands to find entitlement pools containing the channels required to install the Red Hat Enterprise Virtualization Manager:# subscription-manager list --available | grep -A8 "Red Hat Enterprise Linux Server" # subscription-manager list --available | grep -A8 "Red Hat Enterprise Virtualization"

Attach Entitlement Pools to the System

Use the pool identifiers located in the previous step to attach theRed Hat Enterprise Linux ServerandRed Hat Enterprise Virtualizationentitlements to the system. Run the following command to attach the entitlements:# subscription-manager attach --pool=[POOLID]

Enable the Required Channels

Run the following commands to enable the channels required to install Red Hat Enterprise Virtualization:# yum-config-manager --enable rhel-6-server-rpms # yum-config-manager --enable rhel-6-server-supplementary-rpms # yum-config-manager --enable rhel-6-server-rhevm-3.4-rpms # yum-config-manager --enable jb-eap-6-for-rhel-6-server-rpms

You have registered the system with Red Hat Network and subscribed to the channels required to install the Red Hat Enterprise Virtualization Manager.

2.3.2. Subscribing to the Red Hat Enterprise Virtualization Manager Channels Using RHN Classic

Note

To install Red Hat Enterprise Virtualization Manager you must first register the target system to Red Hat Network and subscribe to the required software channels.

Procedure 2.2. Subscribing to the Red Hat Enterprise Virtualization Manager Channels using RHN Classic

- Run the

rhn_registercommand to register the system with Red Hat Network. To complete registration successfully you must supply your Red Hat Network user name and password. Follow the on-screen prompts to complete registration of the system.# rhn_register

Subscribe to Required Channels

You must subscribe the system to the required channels using either the web interface to Red Hat Network or the command linerhn-channelcommand.Using the

rhn-channelCommandRun therhn-channelcommand to subscribe the system to each of the required channels. Run the following commands:# rhn-channel --add --channel=rhel-x86_64-server-6 # rhn-channel --add --channel=rhel-x86_64-server-supplementary-6 # rhn-channel --add --channel=rhel-x86_64-server-6-rhevm-3.4 # rhn-channel --add --channel=jbappplatform-6-x86_64-server-6-rpm

Important

If you are not the administrator for the machine as defined in Red Hat Network, or the machine is not registered to Red Hat Network, then use of therhn-channelcommand results in an error:Error communicating with server. The message was: Error Class Code: 37 Error Class Info: You are not allowed to perform administrative tasks on this system. Explanation: An error has occurred while processing your request. If this problem persists please enter a bug report at bugzilla.redhat.com. If you choose to submit the bug report, please be sure to include details of what you were trying to do when this error occurred and details on how to reproduce this problem.If you encounter this error when usingrhn-channel, you must use the web user interface to add the channel.Using the Web Interface to Red Hat Network

To add a channel subscription to a system from the web interface:- Log on to Red Hat Network (http://rhn.redhat.com).

- Move the mouse cursor over the Subscriptions link at the top of the screen, and then click the Registered Systems link in the menu that appears.

- Select the system to which you are adding channels from the list presented on the screen, by clicking the name of the system.

- Click Alter Channel Subscriptions in the Subscribed Channels section of the screen.

- Select the channels to be added from the list presented on the screen. Red Hat Enterprise Virtualization Manager requires:

- The Red Hat Enterprise Linux Server (v. 6 for 64-bit x86_64) channel. This channel is located under the Release Channels for Red Hat Enterprise Linux 6 for x86_64 expandable menu.

- The RHEL Server Supplementary (v. 6 64-bit x86_64) channel. This channel is located under the Release Channels for Red Hat Enterprise Linux 6 for x86_64 expandable menu.

- The Red Hat Enterprise Virtualization Manager (v.3.4 x86_64) channel. This channel is located under the Additional Services Channels for Red Hat Enterprise Linux 6 for x86_64 expandable menu.

- The Red Hat JBoss EAP (v 6) for 6Server x86_64 channel. This channel is located under the Additional Services Channels for Red Hat Enterprise Linux 6 for x86_64 expandable menu.

- Click the Change Subscription button to finalize the change.

The system is now registered with Red Hat Network and subscribed to the channels required for Red Hat Enterprise Virtualization Manager installation.

2.4. Installing the Red Hat Enterprise Virtualization Manager

2.4.1. Configuring an Offline Repository for Red Hat Enterprise Virtualization Manager Installation

Install Red Hat Enterprise Linux

Install Red Hat Enterprise Linux 6 Server on a system that has access to Red Hat Network. This system downloads all required packages, and distribute them to your offline system(s).Important

Ensure that the system used has a large amount of free disk space available. This procedure downloads a large number of packages, and requires up to 1.5 GB of free disk space.Register Red Hat Enterprise Linux

Register the system with Red Hat Network (RHN) using either Subscription Manager or RHN Classic.Subscription Manager

Use thesubscription-managercommand asrootwith theregisterparameter.# subscription-manager register

RHN Classic

Use therhn_registercommand asroot.# rhn_register

Add required channel subscriptions

Subscribe the system for all channels listed in the Red Hat Enterprise Virtualization - Installation Guide.Subscription Manager

RHN Classic

Configure File Transfer Protocol (FTP) access

Servers that are not connected to the Internet can access the software repository using File Transfer Protocol (FTP). To create the FTP repository you must install and configure vsftpd, while logged in to the system as therootuser:Install vsftpd

Install the vsftpd package.# yum install vsftpd

Start vsftpd

Start thevsftpddaemon.# chkconfig vsftpd on service vsftpd start

Create sub-directory

Create a sub-directory inside the/var/ftp/pub/directory. This is where the downloaded packages will be made available.# mkdir /var/ftp/pub/rhevrepo

Download packages

Once the FTP server has been configured, you must use thereposynccommand to download the packages to be shared. It downloads all packages from all configured software repositories. This includes repositories for all Red Hat Network channels the system is subscribed to, and any locally configured repositories.- As the

rootuser, change into the/var/ftp/pub/rhevrepodirectory.# cd /var/ftp/pub/rhevrepo

- Run the

reposynccommand.# reposync --plugins .

Create local repository metadata

Use thecreaterepocommand to create repository metadata for each of the sub-directories where packages were downloaded under/var/ftp/pub/rhevrepo.# for DIR in `find /var/ftp/pub/rhevrepo -maxdepth 1 -mindepth 1 -type d`; do createrepo $DIR; done;

Create repository configuration files

Create ayumconfiguration file, and copy it to the/etc/yum.repos.d/directory on client systems that you want to connect to this software repository. Ensure that the system hosting the repository is connected to the same network as the client systems where the packages are to be installed.The configuration file can be created manually, or using a script. If using a script, then before running it you must replaceADDRESSin thebaseurlwith the IP address or Fully Qualified Domain Name (FQDN) of the system hosting the repository. The script must be run on this system and then distributed to the client machines. For example:#!/bin/sh REPOFILE="/etc/yum.repos.d/rhev.repo" for DIR in `find /var/ftp/pub/rhevrepo -maxdepth 1 -mindepth 1 -type d`; do echo -e "[`basename $DIR`]" > $REPOFILE echo -e "name=`basename $DIR`" >> $REPOFILE echo -e "baseurl=ftp://ADDRESS/pub/rhevrepo/`basename $DIR`" >> $REPOFILE echo -e "enabled=1" >> $REPOFILE echo -e "gpgcheck=0" >> $REPOFILE echo -e "\n" >> $REPOFILE done;Copy the repository configuration file to client system

Copy the repository configuration file to the/etc/yum.repos.d/directory on every system that you want to connect to this software repository. For example: Red Hat Enterprise Virtualization Manager system(s), all Red Hat Enterprise Linux virtualization hosts, and all Red Hat Enterprise Linux virtual machines.

Note

- Recursively copy the

/var/ftp/pub/rhevrepodirectory, and all its contents, to the removable media. - Modify the

/etc/yum.repos.d/rhev.repofile, replacing thebaseurlvalues with the path to which the removable media will be mounted on the client systems. For examplefile:///media/disk/rhevrepo/.

Note

--newest-only parameter to the reposync command ensures that it only retrieves the newest version of each available package. Once the repository is updated you must ensure it is available to each of your client systems and then run yum update on it.

2.4.2. Installing the Red Hat Enterprise Virtualization Manager Packages

Before you can configure and use the Red Hat Enterprise Virtualization Manager, you must install the rhevm package and dependencies.

Procedure 2.3. Installing the Red Hat Enterprise Virtualization Manager Packages

- To ensure all packages are up to date, run the following command on the machine where you are installing the Red Hat Enterprise Virtualization Manager:

# yum update

- Run the following command to install the rhevm package and dependencies.

# yum install rhevm

Note

The rhevm-doc package is installed as a dependency of the rhevm package, and provides a local copy of the Red Hat Enterprise Virtualization documentation suite. This documentation is also used to provide context sensitive help links from the Administration and User Portals. You can run the following commands to search for translated versions of the documentation:# yum search rhevm-doc

You have installed the rhevm package and dependencies.

2.4.3. Configuring the Red Hat Enterprise Virtualization Manager

engine-setup command. This command asks you a series of questions and, after you provide the required values for all questions, applies that configuration and starts the ovirt-engine service.

Note

engine-setup command guides you through several distinct configuration stages, each comprising several steps that require user input. Suggested configuration defaults are provided in square brackets; if the suggested value is acceptable for a given step, press Enter to accept that value.

Procedure 2.4. Configuring the Red Hat Enterprise Virtualization Manager

Packages

Theengine-setupcommand checks to see if it is performing an upgrade or an installation, and whether any updates are available for the packages linked to the Manager. No user input is required at this stage.[ INFO ] Checking for product updates... [ INFO ] No product updates found

Network Configuration

A reverse lookup is performed on the host name of the machine on which the Red Hat Enterprise Virtualization Manager is being installed. The host name is detected automatically, but you can correct this host name if it is incorrect or if you are using virtual hosts. There must be forward and reverse lookup records for the provided host name in DNS, especially if you will also install the reports server.Host fully qualified DNS name of this server [autodetected host name]:

Theengine-setupcommand checks your firewall configuration and offers to modify that configuration for you to open the ports used by the Manager for external communication such as TCP ports 80 and 443. If you do not allow theengine-setupcommand to modify your firewall configuration, then you must manually open the ports used by the Red Hat Enterprise Virtualization Manager.Do you want Setup to configure the firewall? (Yes, No) [Yes]:

Database Configuration

You can use either a local or remote PostgreSQL database. Theengine-setupcommand can configure your database automatically (including adding a user and a database), or it can use values that you supply.Where is the database located? (Local, Remote) [Local]: Setup can configure the local postgresql server automatically for the engine to run. This may conflict with existing applications. Would you like Setup to automatically configure postgresql and create Engine database, or prefer to perform that manually? (Automatic, Manual) [Automatic]:

oVirt Engine Configuration

Select Gluster, Virt, or Both:Application mode (Both, Virt, Gluster) [Both]:

Both offers the greatest flexibility.Set a password for the automatically created administrative user of the Red Hat Enterprise Virtualization Manager:Engine admin password: Confirm engine admin password:

PKI Configuration

The Manager uses certificates to communicate securely with its hosts. You provide the organization name for the certificate. This certificate can also optionally be used to secure https communications with the Manager.Organization name for certificate [autodetected domain-based name]:

Apache Configuration

By default, external SSL (HTTPS) communication with the Manager is secured with the self-signed certificate created in the PKI configuration stage to securely communicate with hosts. Another certificate may be chosen for external HTTPS connections, without affecting how the Manager communicates with hosts.Setup can configure apache to use SSL using a certificate issued from the internal CA. Do you wish Setup to configure that, or prefer to perform that manually? (Automatic, Manual) [Automatic]:

The Red Hat Enterprise Virtualization Manager uses the Apache web server to present a landing page to users. Theengine-setupcommand can make the landing page of the Manager the default page presented by Apache.Setup can configure the default page of the web server to present the application home page. This may conflict with existing applications. Do you wish to set the application as the default web page of the server? (Yes, No) [Yes]:

System Configuration

Theengine-setupcommand can create an NFS share on the Manager to use as an ISO storage domain. Hosting the ISO domain locally to the Manager simplifies keeping some elements of your environment up to date.Configure an NFS share on this server to be used as an ISO Domain? (Yes, No) [Yes]: Local ISO domain path [/var/lib/exports/iso]: Local ISO domain ACL [0.0.0.0/0.0.0.0(rw)]: Local ISO domain name [ISO_DOMAIN]:

Websocket Proxy Server Configuration

Theengine-setupcommand can optionally configure a websocket proxy server for allowing users to connect to virtual machines via the noVNC or HTML 5 consoles.Configure WebSocket Proxy on this machine? (Yes, No) [Yes]:

Miscellaneous Configuration

You can use theengine-setupcommand to allow a proxy server to broker transactions from the Red Hat Access plug-in.Would you like transactions from the Red Hat Access Plugin sent from the RHEV Manager to be brokered through a proxy server? (Yes, No) [No]:

[ INFO ] Stage: Setup validation

Configuration Preview

Check the configuration preview to confirm the values you entered before they are applied. If you choose to proceed,engine-setupconfigures the Red Hat Enterprise Virtualization Manager using those values.Engine database name : engine Engine database secured connection : False Engine database host : localhost Engine database user name : engine Engine database host name validation : False Engine database port : 5432 NFS setup : True PKI organization : Your Org Application mode : both Firewall manager : iptables Update Firewall : True Configure WebSocket Proxy : True Host FQDN : Your Manager's FQDN NFS export ACL : 0.0.0.0/0.0.0.0(rw) NFS mount point : /var/lib/exports/iso Datacenter storage type : nfs Configure local Engine database : True Set application as default page : True Configure Apache SSL : True Please confirm installation settings (OK, Cancel) [OK]:When your environment has been configured, theengine-setupcommand displays details about how to access your environment and related security details.Clean Up and Termination

Theengine-setupcommand cleans up any temporary files created during the configuration process, and outputs the location of the log file for the Red Hat Enterprise Virtualization Manager configuration process.[ INFO ] Stage: Clean up Log file is located at /var/log/ovirt-engine/setup/ovirt-engine-setup-installation-date.log [ INFO ] Stage: Pre-termination [ INFO ] Stage: Termination [ INFO ] Execution of setup completed successfully

The Red Hat Enterprise Virtualization Manager has been configured and is running on your server. You can log in to the Administration Portal as the admin@internal user to continue configuring the Manager. Furthermore, the engine-setup command saves your answers to a file that can be used to reconfigure the Manager using the same values.

2.4.4. Preparing a PostgreSQL Database for Use with Red Hat Enterprise Virtualization Manager

You can manually configure a database server to host the database used by the Red Hat Enterprise Virtualization Manager. The database can be hosted either locally on the machine on which the Red Hat Enterprise Virtualization Manager is installed, or remotely on another machine.

Important

engine-setup command.

Procedure 2.5. Preparing a PostgreSQL Database for use with Red Hat Enterprise Virtualization Manager

- Run the following commands to initialize the PostgreSQL database, start the

postgresqlservice and ensure this service starts on boot:# service postgresql initdb # service postgresql start # chkconfig postgresql on

- Create a user for the Red Hat Enterprise Virtualization Manager to use when it writes to and reads from the database, and a database in which to store data about the Red Hat Enterprise Virtualization environment. You must perform this step on both local and remote databases. Use the psql terminal as the

postgresuser.# su - postgres $ psql postgres=# create role [user name] with login encrypted password '[password]'; postgres=# create database [database name] owner [user name] template template0 encoding 'UTF8' lc_collate 'en_US.UTF-8' lc_ctype 'en_US.UTF-8';

- Run the following commands to connect to the new database and add the

plpgsqllanguage:postgres=# \c [database name] CREATE LANGUAGE plpgsql;

- Ensure the database can be accessed remotely by enabling client authentication. Edit the

/var/lib/pgsql/data/pg_hba.conffile, and add the following in accordance with the location of the database:- For local databases, add the two following lines immediately underneath the line starting with

Localat the bottom of the file:host [database name] [user name] 0.0.0.0/0 md5 host [database name] [user name] ::0/0 md5

- For remote databases, add the following line immediately underneath the line starting with

Localat the bottom of the file, replacing X.X.X.X with the IP address of the Manager:host [database name] [user name] X.X.X.X/32 md5

- Allow TCP/IP connections to the database. You must perform this step for remote databases. Edit the

/var/lib/pgsql/data/postgresql.conffile and add the following line:listen_addresses='*'

This example configures thepostgresqlservice to listen for connections on all interfaces. You can specify an interface by giving its IP address. - Restart the

postgresqlservice. This step is required on both local and remote manually configured database servers.# service postgresql restart

You have manually configured a PostgreSQL database to use with the Red Hat Enterprise Virtualization Manager.

2.4.5. Configuring the Manager to Use a Manually Configured Local or Remote PostgreSQL Database

During the database configuration stage of configuring the Red Hat Enterprise Virtualization Manager using the engine-setup script, you can choose to use a manually configured database. You can select to use a locally or remotely installed PostgreSQL database.

Procedure 2.6. Configuring the Manager to use a Manually Configured Local or Remote PostgreSQL Database

- During configuration of the Red Hat Enterprise Virtualization Manager, the

engine-setupcommand prompts you to decide where your database is located:Where is the database located? (Local, Remote) [Local]:

The steps involved in manually configuring the Red Hat Enterprise Virtualization Manger to use local or remotely hosted databases are the same. However, to use a remotely hosted database you must provide the host name of the remote database server and the port on which it is listening. - When prompted, enter

Manualto manually configure the database:Would you like Setup to automatically configure postgresql, or prefer to perform that manually? (Automatic, Manual) [Automatic]: Manual

- If you are using a remotely hosted database, supply the

engine-setupcommand with the host name of your database server and the port on which it is listening:Database host [localhost]: Database port [5432]:

- For both local and remotely hosted databases, you must select whether or not your database uses a secured connection. You must also enter the name of the database you configured, the user the Manager can use to access the database, and the password of that user.

Database secured connection (Yes, No) [No]: Database name [engine]: Database user [engine]: Database password:

Note

Using a secured connection to your database requires you to also have manually configured secured database connections.

You have configured the Red Hat Enterprise Virtualization Manager to use a manually configured database. The engine-setup command continues with the rest of your environment configuration.

2.4.6. Connecting to the Administration Portal

Access the Administration Portal using a web browser.

Procedure 2.7. Connecting to the Administration Portal

- Open a supported web browser.

- Navigate to

https://[your-manager-fqdn]/ovirt-engine, replacing [your-manager-fqdn] with the fully qualified domain name that you provided during installation to open the login screen.Important

The first time that you connect to the Administration Portal, you are prompted to trust the certificate being used to secure communications between your browser and the web server. - Enter your User Name and Password. If you are logging in for the first time, use the user name

adminin conjunction with the administrator password that you specified during installation. - Select the domain against which to authenticate from the Domain drop-down list. If you are logging in using the internal

adminuser name, select theinternaldomain. - You can view the Administration Portal in multiple languages. The default selection will be chosen based on the locale settings of your web browser. If you would like to view the Administration Portal in a language other than the default, select your preferred language from the list.

- Click Login.

You have logged into the Administration Portal.

2.4.7. Removing the Red Hat Enterprise Virtualization Manager

You can use the engine-cleanup command to remove the files associated with the Red Hat Enterprise Virtualization Manager.

Procedure 2.8. Removing Red Hat Enterprise Virtualization Manager

- Run the following command on the machine on which the Red Hat Enterprise Virtualization Manager is installed:

# engine-cleanup

- You are prompted to confirm removal of all Red Hat Enterprise Virtualization Manager components. These components include PKI keys, the locally hosted ISO domain file system layout, PKI configuration, the local NFS exports configuration, and the engine database content.

Do you want to remove all components? (Yes, No) [Yes]:

Note

A backup of the Engine database and a compressed archive of the PKI keys and configuration are always automatically created. These files are saved under/var/lib/ovirt-engine/backups/, and include the date andengine-andengine-pki-in their file names respectively. - You are given another opportunity to change your mind and cancel the removal of the Red Hat Enterprise Virtualization Manager. If you choose to proceed, the

ovirt-engineservice is stopped, and your environment's configuration is removed in accordance with the options you selected.During execution engine service will be stopped (OK, Cancel) [OK]: ovirt-engine is about to be removed, data will be lost (OK, Cancel) [Cancel]:OK

The configuration files of your environment have been removed according to your selections when you ran engine-cleanup.

--== SUMMARY ==--

A backup of the database is available at /var/lib/ovirt-engine/backups/engine-date-and-extra-characters.sql

Engine setup successfully cleaned up

A backup of PKI configuration and keys is available at /var/lib/ovirt-engine/backups/engine-pki-date-and-extra-characters.tar.gz

--== END OF SUMMARY ==--

[ INFO ] Generating answer file '/var/lib/ovirt-engine/setup/answers/20130827181911-cleanup.conf'

[ INFO ] Stage: Clean up

Log file is located at /var/log/ovirt-engine/setup/ovirt-engine-remove-date.log

[ INFO ] Stage: Pre-termination

[ INFO ] Stage: Termination

[ INFO ] Execution of cleanup completed successfullyyum command.

# yum remove rhevm* vdsm-bootstrap

2.5. SPICE Client

2.5.1. SPICE Features

- SPICE-HTML5 support (Technology Preview), BZ#974060

- Initial support for the SPICE-HTML5 console client is now offered as a technology preview. This feature allows users to connect to a SPICE console from their browser using the SPICE-HTML5 client. The requirements for enabling SPICE-HTML5 are the same as that of the noVNC console, as follows:On the guest:

- The WebSocket proxy must be set up and running in the environment.

- The engine must be aware of the WebSocket proxy - use

engine-configto set theWebSocketProxykey.

On the client:- The client must have a browser with WebSocket and postMessage support.

- If SSL is enabled, the engine's certificate authority must be imported in the client browser.

Table 2.1.

| Client Operating System | Wan Optimizations | Dynamic Console Resizing | SPICE Proxy Support | Full High Definition Display | Multiple Monitor Support |

|---|---|---|---|---|---|

| RHEL 5.8+ | No | No | No | Yes | Yes |

| RHEL 6.2 - 6.4 | No | No | No | Yes | Yes |

| RHEL 6.5 + | Yes | Yes | Yes | Yes | Yes |

| Windows XP (All versions) | Yes | Yes | Yes | Yes | Yes |

| Windows 7 (All versions) | Yes | Yes | Yes | Yes | Yes |

| Windows 8 (All versions) | Yes | Yes | Yes | Yes | Yes |

| Windows Server 2008 | Yes | Yes | Yes | Yes | Yes |

| Windows Server 2012 | Yes | Yes | Yes | Yes | Yes |

Chapter 3. The Self-Hosted Engine

3.1. About the Self-Hosted Engine

3.2. Limitations of the Self-Hosted Engine

- An NFS storage domain is required for the configuration. NFS is the only supported file system for the self-hosted engine.

- The host of the self-hosted engine and all attached hosts must use Red Hat Enterprise Linux 6.5 or 6.6. Red Hat Enterprise Virtualization Hypervisors are not supported.

3.3. Installing the Self-Hosted Engine

Install a Red Hat Enterprise Virtualization environment that takes advantage of the self-hosted engine feature, in which the engine is installed on a virtual machine within the environment itself.

rhel-6-server-rpmsrhel-6-server-supplementary-rpmsrhel-6-server-rhevm-3.4-rpmsjb-eap-6-for-rhel-6-server-rpmsrhel-6-server-rhev-mgmt-agent-rpms

rhel-x86_64-server-6rhel-x86_64-server-supplementary-6rhel-x86_64-server-6-rhevm-3.4jbappplatform-6-x86_64-server-6-rpmrhel-x86_64-rhev-mgmt-agent-6

Important

rhel-6-server-rhev-mgmt-agent-rpms in Subscription Manager and rhel-x86_64-rhev-mgmt-agent-6 in RHN Classic.

root user.

Procedure 3.1. Installing the Self-Hosted Engine

- Run the following command to ensure that the most up-to-date versions of all installed packages are in use:

# yum upgrade

- Run the following command to install the ovirt-hosted-engine-setup package and dependencies:

# yum install ovirt-hosted-engine-setup

You have installed the ovirt-hosted-engine-setup package and are ready to configure the self-hosted engine.

3.4. Configuring the Self-Hosted Engine

When package installation is complete, the Red Hat Enterprise Virtualization Manager must be configured. The hosted-engine deployment script is provided to assist with this task. The script asks you a series of questions, and configures your environment based on your answers. When the required values have been provided, the updated configuration is applied and the Red Hat Enterprise Virtualization Manager services are started.

hosted-engine deployment script guides you through several distinct configuration stages. The script suggests possible configuration defaults in square brackets. Where these default values are acceptable, no additional input is required.

Host-HE1.example.com in this procedure.

hosted-engine deployment script to access this virtual machine multiple times to install an operating system and to configure the engine.

root user for the specified machine.

Procedure 3.2. Configuring the Self-Hosted Engine

Initiating Hosted Engine Deployment

Begin configuration of the self-hosted environment by deploying thehosted-enginecustomization script on Host_HE1. To escape the script at any time, use the CTRL+D keyboard combination to abort deployment.# hosted-engine --deploy

Configuring Storage

Select the version of NFS and specify the full address, using either the FQDN or IP address, and path name of the shared storage domain. Choose the storage domain and storage data center names to be used in the environment.During customization use CTRL-D to abort. Please specify the storage you would like to use (nfs3, nfs4)[nfs3]: Please specify the full shared storage connection path to use (example: host:/path): storage.example.com:/hosted_engine/nfs [ INFO ] Installing on first host Please provide storage domain name. [hosted_storage]: Local storage datacenter name is an internal name and currently will not be shown in engine's admin UI.Please enter local datacenter name [hosted_datacenter]:

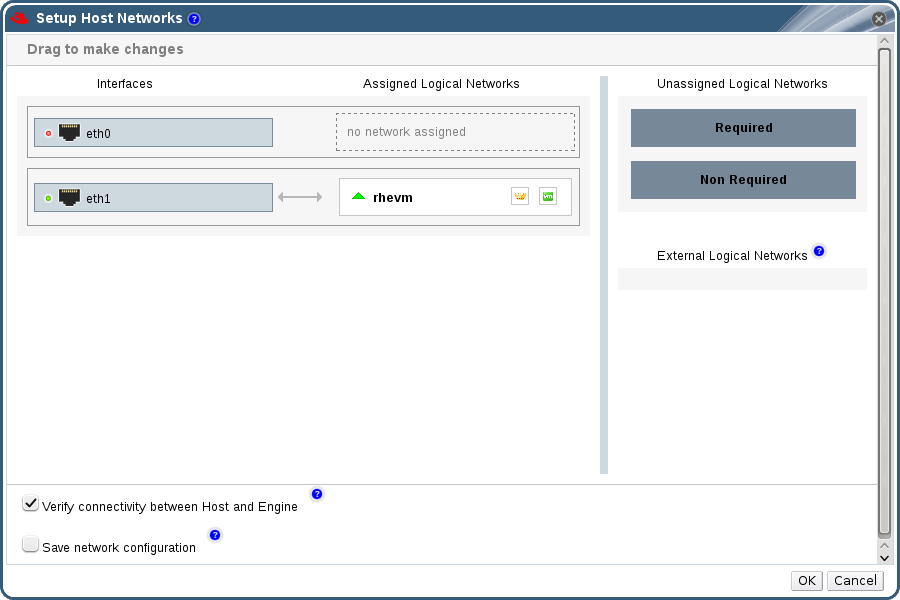

Configuring the Network

The script detects possible network interface controllers (NICs) to use as a management bridge for the environment. It then checks your firewall configuration and offers to modify it for console (SPICE or VNC) access HostedEngine-VM. Provide a pingable gateway IP address, to be used by theovirt-ha-agentto help determine a host's suitability for running HostedEngine-VM.Please indicate a nic to set rhevm bridge on: (eth1, eth0) [eth1]: iptables was detected on your computer, do you wish setup to configure it? (Yes, No)[Yes]: Please indicate a pingable gateway IP address [X.X.X.X]:

Configuring the Virtual Machine

The script creates a virtual machine to be configured as the Red Hat Enterprise Virtualization Manager, the hosted engine referred to in this procedure as HostedEngine-VM. Specify the boot device and, if applicable, the path name of the installation media, the CPU type, the number of virtual CPUs, and the disk size. Specify a MAC address for the HostedEngine-VM, or accept a randomly generated one. The MAC address can be used to update your DHCP server prior to installing the operating system on the virtual machine. Specify memory size and console connection type for the creation of HostedEngine-VM.Please specify the device to boot the VM from (cdrom, disk, pxe) [cdrom]: The following CPU types are supported by this host: - model_Penryn: Intel Penryn Family - model_Conroe: Intel Conroe Family Please specify the CPU type to be used by the VM [model_Penryn]: Please specify the number of virtual CPUs for the VM [Defaults to minimum requirement: 2]: Please specify the disk size of the VM in GB [Defaults to minimum requirement: 25]: You may specify a MAC address for the VM or accept a randomly generated default [00:16:3e:77:b2:a4]: Please specify the memory size of the VM in MB [Defaults to minimum requirement: 4096]: Please specify the console type you would like to use to connect to the VM (vnc, spice) [vnc]:Configuring the Hosted Engine

Specify the name for Host-HE1 to be identified in the Red Hat Enterprise Virtualization environment, and the password for theadmin@internaluser to access the Administrator Portal. Provide the FQDN for HostedEngine-VM; this procedure uses the FQDN HostedEngine-VM.example.com. Finally, provide the name and TCP port number of the SMTP server, the email address used to send email notifications, and a comma-separated list of email addresses to receive these notifications.Enter the name which will be used to identify this host inside the Administrator Portal [hosted_engine_1]: Host-HE1 Enter 'admin@internal' user password that will be used for accessing the Administrator Portal: Confirm 'admin@internal' user password: Please provide the FQDN for the engine you would like to use. This needs to match the FQDN that you will use for the engine installation within the VM: HostedEngine-VM.example.com Please provide the name of the SMTP server through which we will send notifications [localhost]: Please provide the TCP port number of the SMTP server [25]: Please provide the email address from which notifications will be sent [root@localhost]: Please provide a comma-separated list of email addresses which will get notifications [root@localhost]:

Configuration Preview

Before proceeding, thehosted-enginescript displays the configuration values you have entered, and prompts for confirmation to proceed with these values.Bridge interface : eth1 Engine FQDN : HostedEngine-VM.example.com Bridge name : rhevm SSH daemon port : 22 Firewall manager : iptables Gateway address : X.X.X.X Host name for web application : Host-HE1 Host ID : 1 Image size GB : 25 Storage connection : storage.example.com:/hosted_engine/nfs Console type : vnc Memory size MB : 4096 MAC address : 00:16:3e:77:b2:a4 Boot type : pxe Number of CPUs : 2 CPU Type : model_Penryn Please confirm installation settings (Yes, No)[No]:

Creating HostedEngine-VM

The script creates a virtual machine to be HostedEngine-VM and provides connection details. You must install an operating system on HostedEngine-VM before thehosted-enginescript can proceed on Host-HE1.[ INFO ] Generating answer file '/etc/ovirt-hosted-engine/answers.conf' [ INFO ] Stage: Transaction setup [ INFO ] Stage: Misc configuration [ INFO ] Stage: Package installation [ INFO ] Stage: Misc configuration [ INFO ] Configuring libvirt [ INFO ] Generating VDSM certificates [ INFO ] Configuring VDSM [ INFO ] Starting vdsmd [ INFO ] Waiting for VDSM hardware info [ INFO ] Creating Storage Domain [ INFO ] Creating Storage Pool [ INFO ] Connecting Storage Pool [ INFO ] Verifying sanlock lockspace initialization [ INFO ] Initializing sanlock lockspace [ INFO ] Initializing sanlock metadata [ INFO ] Creating VM Image [ INFO ] Disconnecting Storage Pool [ INFO ] Start monitoring domain [ INFO ] Configuring VM [ INFO ] Updating hosted-engine configuration [ INFO ] Stage: Transaction commit [ INFO ] Stage: Closing up [ INFO ] Creating VM You can now connect to the VM with the following command: /usr/bin/remote-viewer vnc://localhost:5900 Use temporary password "3042QHpX" to connect to vnc console. Please note that in order to use remote-viewer you need to be able to run graphical applications. This means that if you are using ssh you have to supply the -Y flag (enables trusted X11 forwarding). Otherwise you can run the command from a terminal in your preferred desktop environment. If you cannot run graphical applications you can connect to the graphic console from another host or connect to the console using the following command: virsh -c qemu+tls://Test/system console HostedEngine If you need to reboot the VM you will need to start it manually using the command: hosted-engine --vm-start You can then set a temporary password using the command: hosted-engine --add-console-password The VM has been started. Install the OS and shut down or reboot it. To continue please make a selection: (1) Continue setup - VM installation is complete (2) Reboot the VM and restart installation (3) Abort setup (1, 2, 3)[1]:Using the naming convention of this procedure, connect to the virtual machine using VNC with the following command:/usr/bin/remote-viewer vnc://Host-HE1.example.com:5900

Installing the Virtual Machine Operating System

Connect to HostedEngine-VM, the virtual machine created by the hosted-engine script, and install a Red Hat Enterprise Linux 6.5 or 6.6 operating system. Ensure the machine is rebooted once installation has completed.Synchronizing the Host and the Virtual Machine

Return to Host-HE1 and continue thehosted-enginedeployment script by selecting option 1:(1) Continue setup - VM installation is complete

Waiting for VM to shut down... [ INFO ] Creating VM You can now connect to the VM with the following command: /usr/bin/remote-viewer vnc://localhost:5900 Use temporary password "3042QHpX" to connect to vnc console. Please note that in order to use remote-viewer you need to be able to run graphical applications. This means that if you are using ssh you have to supply the -Y flag (enables trusted X11 forwarding). Otherwise you can run the command from a terminal in your preferred desktop environment. If you cannot run graphical applications you can connect to the graphic console from another host or connect to the console using the following command: virsh -c qemu+tls://Test/system console HostedEngine If you need to reboot the VM you will need to start it manually using the command: hosted-engine --vm-start You can then set a temporary password using the command: hosted-engine --add-console-password Please install and setup the engine in the VM. You may also be interested in subscribing to "agent" RHN/Satellite channel and installing rhevm-guest-agent-common package in the VM. To continue make a selection from the options below: (1) Continue setup - engine installation is complete (2) Power off and restart the VM (3) Abort setupInstalling the Manager

Connect to HostedEngine-VM, subscribe to the appropriate Red Hat Enterprise Virtualization Manager channels, ensure that the most up-to-date versions of all installed packages are in use, and install the rhevm packages.# yum upgrade

# yum install rhevm

Configuring the Manager

Configure the engine on HostedEngine-VM:# engine-setup

Synchronizing the Host and the Manager

Return to Host-HE1 and continue thehosted-enginedeployment script by selecting option 1:(1) Continue setup - engine installation is complete

[ INFO ] Engine replied: DB Up!Welcome to Health Status! [ INFO ] Waiting for the host to become operational in the engine. This may take several minutes... [ INFO ] Still waiting for VDSM host to become operational... [ INFO ] The VDSM Host is now operational Please shutdown the VM allowing the system to launch it as a monitored service. The system will wait until the VM is down.Shutting Down HostedEngine-VM

Shutdown HostedEngine-VM.# shutdown now

Setup Confirmation

Return to Host-HE1 to confirm it has detected that HostedEngine-VM is down.[ INFO ] Enabling and starting HA services Hosted Engine successfully set up [ INFO ] Stage: Clean up [ INFO ] Stage: Pre-termination [ INFO ] Stage: Termination

When the hosted-engine deployment script completes successfully, the Red Hat Enterprise Virtualization Manager is configured and running on your server. In contrast to a bare-metal Manager installation, the hosted engine Manager has already configured the data center, cluster, host (Host-HE1), storage domain, and virtual machine of the hosted engine (HostedEngine-VM). You can log in as the admin@internal user to continue configuring the Manager and add further resources.

engine-manage-domains command.

ovirt-host-engine-setup script also saves the answers you gave during configuration to a file, to help with disaster recovery. If a destination is not specified using the --generate-answer=<file> argument, the answer file is generated at /etc/ovirt-hosted-engine/answers.conf.

3.5. Installing Additional Hosts to a Self-Hosted Environment

Adding additional nodes to a self-hosted environment is very similar to deploying the original host, though heavily truncated as the script detects the environment.

root user.

Procedure 3.3. Adding the host

- Install the ovirt-hosted-engine-setup package.

# yum install ovirt-hosted-engine-setup

- Configure the host with the deployment command.

# hosted-engine --deploy

Configuring Storage

Specify the storage type and the full address, using either the Fully Qualified Domain Name (FQDN) or IP address, and path name of the shared storage domain used in the self-hosted environment.Please specify the storage you would like to use (nfs3, nfs4)[nfs3]: Please specify the full shared storage connection path to use (example: host:/path): storage.example.com:/hosted_engine/nfs

Detecting the Self-Hosted Engine

Thehosted-enginescript detects that the shared storage is being used and asks if this is an additional host setup. You are then prompted for the host ID, which must be an integer not already assigned to an additional host in the environment.The specified storage location already contains a data domain. Is this an additional host setup (Yes, No)[Yes]? [ INFO ] Installing on additional host Please specify the Host ID [Must be integer, default: 2]:

Configuring the System

Thehosted-enginescript uses the answer file generated by the original hosted-engine setup. To achieve this, the script requires the FQDN or IP address and the password of therootuser of that host so as to access and secure-copy the answer file to the additional host.[WARNING] A configuration file must be supplied to deploy Hosted Engine on an additional host. The answer file may be fetched from the first host using scp. If you do not want to download it automatically you can abort the setup answering no to the following question. Do you want to scp the answer file from the first host? (Yes, No)[Yes]: Please provide the FQDN or IP of the first host: Enter 'root' user password for host Host-HE1.example.com: [ INFO ] Answer file successfully downloaded

Configuring the Hosted Engine

Specify the name for the additional host to be identified in the Red Hat Enterprise Virtualization environment, and the password for theadmin@internaluser.Enter the name which will be used to identify this host inside the Administrator Portal [hosted_engine_2]: Enter 'admin@internal' user password that will be used for accessing the Administrator Portal: Confirm 'admin@internal' user password:

Configuration Preview

Before proceeding, thehosted-enginescript displays the configuration values you have entered, and prompts for confirmation to proceed with these values.Bridge interface : eth1 Engine FQDN : HostedEngine-VM.example.com Bridge name : rhevm SSH daemon port : 22 Firewall manager : iptables Gateway address : X.X.X.X Host name for web application : hosted_engine_2 Host ID : 2 Image size GB : 25 Storage connection : storage.example.com:/hosted_engine/nfs Console type : vnc Memory size MB : 4096 MAC address : 00:16:3e:05:95:50 Boot type : disk Number of CPUs : 2 CPU Type : model_Penryn Please confirm installation settings (Yes, No)[Yes]:

After confirmation, the script completes installation of the host and adds it to the environment.

3.6. Maintaining the Self-Hosted Engine

global- All high-availability agents in the cluster are disabled from monitoring the state of the engine virtual machine. Theglobalmaintenance mode must be applied for any setup or upgrade operations that require the engine to be stopped. Examples of this include upgrading to a later version of Red Hat Enterprise Virtualization, and installation of the rhevm-dwh and rhevm-reports packages necessary for the Reports Portal.local- The high-availability agent on the host issuing the command is disabled from monitoring the state of the engine virtual machine. The host is exempt from hosting the engine virtual machine while inlocalmaintenance mode; if hosting the engine virtual machine when placed into this mode, the engine will be migrated to another host, provided there is a suitable contender. Thelocalmaintenance mode is recommended when applying system changes or updates to the host.none- Disables maintenance mode, ensuring that the high-availability agents are operating.

# hosted-engine --set-maintenance --mode=mode

root user.

3.7. Upgrading the Self-Hosted Engine

Upgrade your Red Hat Enterprise Virtualization hosted-engine environment from version 3.3 to 3.4.

root user.

Procedure 3.4. Upgrading the Self-Hosted Engine

- Log into either host and set the maintenance mode to

globalto disable the high-availability agents.# hosted-engine --set-maintenance --mode=global

- Access the Red Hat Enterprise Virtualization Manager Administration Portal. Select Host A and put it into maintenance mode by clicking the Maintenance button.

Important

The host that you put into maintenance mode and upgrade must not be the host currently hosting the Manager virtual machine. - Log into and update Host A.

# yum update

- Restart VDSM on Host A.

# service vdsmd restart

- Restart

ovirt-ha-brokerandovirt-ha-agenton Host A.# service ovirt-ha-broker restart

# service ovirt-ha-agent restart

- Log into either host and turn off the hosted-engine maintenance mode so that the Manager virtual machine can migrate to the other host.

# hosted-engine --set-maintenance --mode=none

- Access the Red Hat Enterprise Virtualization Manager Administration Portal. Select Host A and activate it by clicking the Activate button.

- Log into Host B and set the maintenance mode to

globalto disable the high-availability agents.# hosted-engine --set-maintenance --mode=global

- Update Host B.

# yum update

- Restart VDSM on Host B.

# service vdsmd restart

- Restart

ovirt-ha-brokerandovirt-ha-agenton Host B.# service ovirt-ha-broker restart

# service ovirt-ha-agent restart

- Turn off the hosted-engine maintenance mode on Host B.

# hosted-engine --set-maintenance --mode=none

- Access the Red Hat Enterprise Virtualization Manager Administration Portal. Select Host B and activate it by clicking the Activate button.

- Log into the Manager virtual machine and update the engine as per the instructions in Section 5.2.4, “Upgrading to Red Hat Enterprise Virtualization Manager 3.4”.

- Access the Red Hat Enterprise Virtualization Manager Administration Portal.

- Select the Default cluster and click Edit to open the Edit Cluster window.

- Use the Compatibility Version drop-down menu to select 3.4. Click OK to save the change and close the window.

You have upgraded both the hosts and the Manager in your hosted-engine setup to Red Hat Enterprise Virtualization 3.4.

3.8. Upgrading Additional Hosts in a Self-Hosted Environment

It is recommended that all hosts in your self-hosted environment are upgraded at the same time. This prevents version 3.3 hosts from going into a Non Operational state. If this is not practical in your environment, follow this procedure to upgrade any additional hosts.

root user.

Procedure 3.5. Upgrading Additional Hosts

- Log into the host and set the maintenance mode to

local.# hosted-engine --set-maintenance --mode=local

- Access the Red Hat Enterprise Virtualization Manager Administration Portal. Select the host and put it into maintenance mode by clicking the Maintenance button.

- Log into and update the host.

# yum update

- Restart VDSM on the host.

# service vdsmd restart

- Restart

ovirt-ha-brokerandovirt-ha-agenton the host.# service ovirt-ha-broker restart

# service ovirt-ha-agent restart

- Turn off the hosted-engine maintenance mode on the host.

# hosted-engine --set-maintenance --mode=none

- Access the Red Hat Enterprise Virtualization Manager Administration Portal. Select the host and activate it by clicking the Activate button.

You have updated an additional host in your self-hosted environment to Red Hat Enterprise Virtualization 3.4.

3.9. Backing up and Restoring a Self-Hosted Environment

engine-backup tool, and only allows you to back up the Red Hat Enterprise Virtualization Manager virtual machine but not the host that contains the Manager virtual machine.

- Back up the original Red Hat Enterprise Virtualization Manager configuration settings and database content.

- Create a freshly installed Red Hat Enterprise Linux host and run the hosted-engine deployment script.

- Restore the Red Hat Enterprise Virtualization Manager configuration settings and database content in the new Manager virtual machine.

- Removing hosted-engine hosts in a Non Operational state and re-installing them into the restored self-hosted engine environment.

Prerequisites

- To restore a self-hosted engine environment, you must prepare a freshly installed Red Hat Enterprise Linux system on a physical host.

- The operating system version of the new host and Manager must be the same as that of the original host and Manager.

- You must have entitlements to subscribe your new environment. For a list of the required repositories, see Subscribing to the Required Entitlements.

- The fully qualified domain name of the new Manager must be the same fully qualified domain name as that of the original Manager. Forward and reverse lookup records must both be set in DNS.

- The new Manager database must have the same database user name as the original Manager database.

3.9.1. Backing up the Self-Hosted Engine Manager Virtual Machine

engine-backup tool and can be performed without interrupting the ovirt-engine service. The engine-backup tool only allows you to back up the Red Hat Enterprise Virtualization Manager virtual machine, but not the host that contains the Manager virtual machine.

Procedure 3.6. Backing up the Original Red Hat Enterprise Virtualization Manager

Preparing the Failover Host

A failover host, one of the hosted-engine hosts in the environment, must be placed into maintenance mode so that it has no virtual load at the time of the backup. This host can then later be used to deploy the restored self-hosted engine environment. Any of the hosted-engine hosts can be used as the failover host for this backup scenario, however the restore process is more straightforward ifHost 1is used. The default name for theHost 1host ishosted_engine_1; this was set when the hosted-engine deployment script was initially run.- Log in to one of the hosted-engine hosts.

- Confirm that the

hosted_engine_1host isHost 1:# hosted-engine --vm-status

- Log in to the Administration Portal.

- Select the Hosts tab.

- Select the

hosted_engine_1host in the results list, and click Maintenance. - Click Ok.

Disabling the High-Availability Agents

Disable the high-availability agents on the hosted-engine hosts to prevent migration of the Red Hat Enterprise Virtualization Manager virtual machine during the backup process. Connect to any of the hosted-engine hosts and place the high-availability agents on all hosts into global maintenance mode.# hosted-engine --set-maintenance --mode=global

Creating a Backup of the Manager

On the Manager virtual machine, back up the configuration settings and database content, replacing [EngineBackupFile] with the file name for the backup file, and [LogFILE] with the file name for the backup log.# engine-backup --mode=backup --file=[EngineBackupFile] --log=[LogFILE]

Copying the Backup Files to an External Server

Secure copy the backup files to an external server. In the following example, [Storage.example.com] is the fully qualified domain name of a network storage server that will store the backup until it is needed, and /backup/ is any designated folder or path. This step is not mandatory, but the backup files must be accessible to restore the configuration settings and database content.# scp -p [EngineBackupFiles] [Storage.example.com:/backup/EngineBackupFiles]

Enabling the High-Availability Agents

Connect to any of the hosted-engine hosts and turn off global maintenance mode. This enables the high-availability agents.# hosted-engine --set-maintenance --none

Activating the Failover Host

Bring thehosted_engine_1host out of maintenance mode.- Log in to the Administration Portal.

- Select the Hosts tab.

- Select

hosted_engine_1from the results list. - Click Activate.

3.9.2. Creating a New Self-Hosted Engine Environment to be Used as the Restored Environment

Host 1, used in Section 3.9.1, “Backing up the Self-Hosted Engine Manager Virtual Machine” uses the default hostname of hosted_engine_1 which is also used in this procedure. Due to the nature of the restore process for the self-hosted engine, before the final synchronization of the restored engine can take place, this failover host will need to be removed, and this can only be achieved if the host had no virtual load when the backup was taken. You can also restore the backup on a separate hardware which was not used in the backed up environment and this is not a concern.

Important

Procedure 3.7. Creating a New Self-Hosted Environment to be Used as the Restored Environment

Updating DNS

Update your DNS so that the fully qualified domain name of the Red Hat Enterprise Virtualization environment correlates to the IP address of the new Manager. In this procedure, fully qualified domain name was set as Manager.example.com. The fully qualified domain name provided for the engine must be identical to that given in the engine setup of the original engine that was backed up.Initiating Hosted Engine Deployment

On the newly installed Red Hat Enterprise Linux host, run thehosted-enginedeployment script. To escape the script at any time, use the CTRL+D keyboard combination to abort deployment.# hosted-engine --deploy

If running thehosted-enginedeployment script over a network, it is recommended to use thescreenwindow manager to avoid losing the session in case of network or terminal disruption. Install the screen package first if not installed.# screen hosted-engine --deploy

Configuring Storage

Select the type of storage to use.During customization use CTRL-D to abort. Please specify the storage you would like to use (iscsi, nfs3, nfs4)[nfs3]:

Choose the storage domain and storage data center names to be used in the environment.- For NFS storage types, specify the full address, using either the fully qualified domain name or IP address, and path name of the shared storage domain.

Please specify the full shared storage connection path to use (example: host:/path): storage.example.com:/hosted_engine/nfs

- For iSCSI, specify the iSCSI portal IP address, port, user name and password, and select a target name from the auto-detected list:

Please specify the iSCSI portal IP address: Please specify the iSCSI portal port [3260]: Please specify the iSCSI portal user: Please specify the iSCSI portal password: Please specify the target name (auto-detected values) [default]:

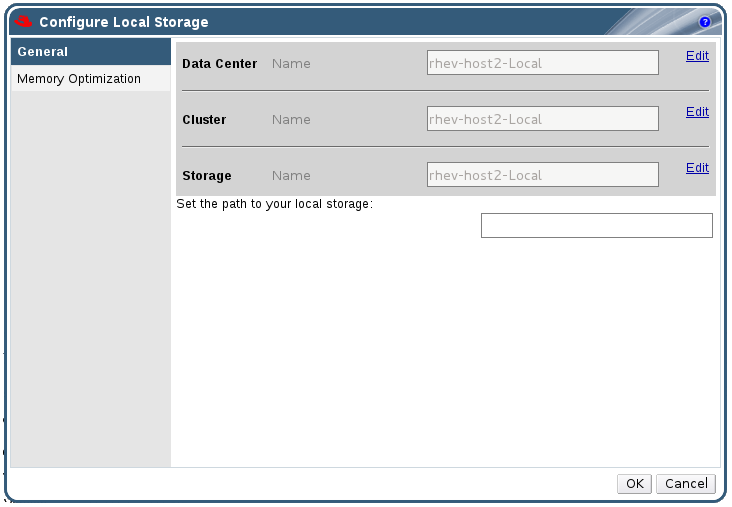

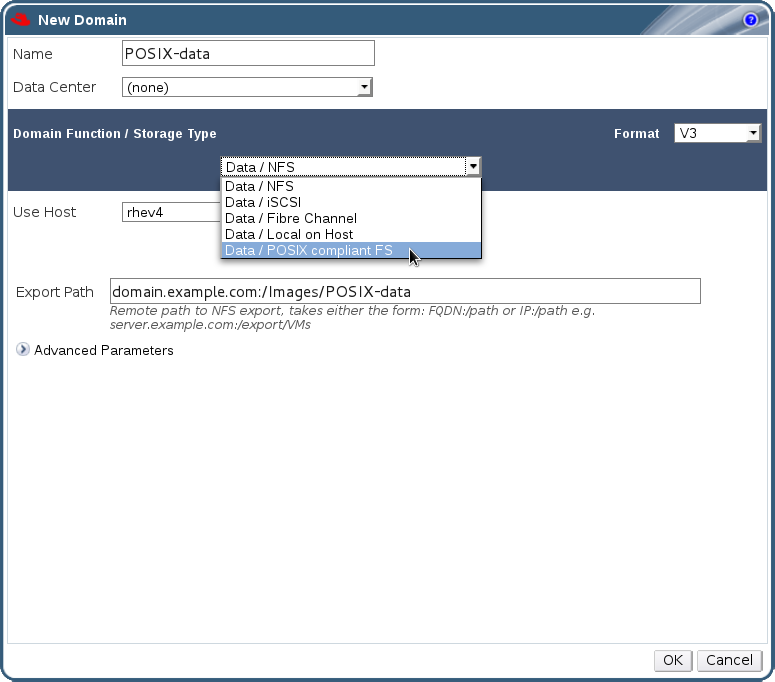

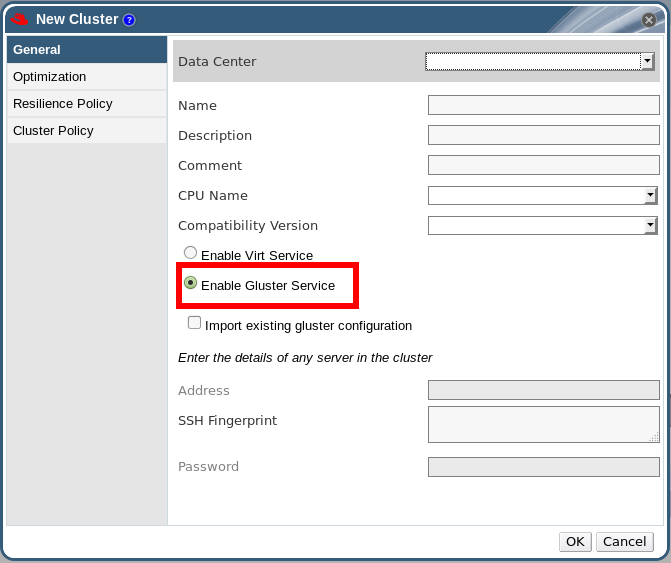

[ INFO ] Installing on first host Please provide storage domain name. [hosted_storage]: Local storage datacenter name is an internal name and currently will not be shown in engine's admin UI.Please enter local datacenter name [hosted_datacenter]: