Red Hat Training

A Red Hat training course is available for Red Hat Enterprise Linux

Chapter 6. CPU

This chapter outlines CPU hardware details and configuration options that affect application performance in Red Hat Enterprise Linux 7. Section 6.1, “Considerations” discusses the CPU related factors that affect performance. Section 6.2, “Monitoring and Diagnosing Performance Problems” teaches you how to use Red Hat Enterprise Linux 7 tools to diagnose performance problems related to CPU hardware or configuration details. Section 6.3, “Configuration Suggestions” discusses the tools and strategies you can use to solve CPU related performance problems in Red Hat Enterprise Linux 7.

6.1. Considerations

Read this section to gain an understanding of how system and application performance is affected by the following factors:

- How processors are connected to each other and to related resources like memory.

- How processors schedule threads for execution.

- How processors handle interrupts in Red Hat Enterprise Linux 7.

6.1.1. System Topology

In modern computing, the idea of a central processing unit is a misleading one, as most modern systems have multiple processors. How these processors are connected to each other and to other system resources — the topology of the system — can greatly affect system and application performance, and the tuning considerations for a system.

There are two primary types of topology used in modern computing:

- Symmetric Multi-Processor (SMP) topology

- SMP topology allows all processors to access memory in the same amount of time. However, because shared and equal memory access inherently forces serialized memory accesses from all the CPUs, SMP system scaling constraints are now generally viewed as unacceptable. For this reason, practically all modern server systems are NUMA machines.

- Non-Uniform Memory Access (NUMA) topology

- NUMA topology was developed more recently than SMP topology. In a NUMA system, multiple processors are physically grouped on a socket. Each socket has a dedicated area of memory, and processors that have local access to that memory are referred to collectively as a node.Processors on the same node have high speed access to that node's memory bank, and slower access to memory banks not on their node. Therefore, there is a performance penalty to accessing non-local memory.Given this performance penalty, performance sensitive applications on a system with NUMA topology should access memory that is on the same node as the processor executing the application, and should avoid accessing remote memory wherever possible.When tuning application performance on a system with NUMA topology, it is therefore important to consider where the application is being executed, and which memory bank is closest to the point of execution.In a system with NUMA topology, the

/sysfile system contains information about how processors, memory, and peripheral devices are connected. The/sys/devices/system/cpudirectory contains details about how processors in the system are connected to each other. The/sys/devices/system/nodedirectory contains information about NUMA nodes in the system, and the relative distances between those nodes.

6.1.1.1. Determining System Topology

There are a number of commands that can help you understand the topology of your system. The

numactl --hardware command gives an overview of your system's topology.

$ numactl --hardware available: 4 nodes (0-3) node 0 cpus: 0 4 8 12 16 20 24 28 32 36 node 0 size: 65415 MB node 0 free: 43971 MB node 1 cpus: 2 6 10 14 18 22 26 30 34 38 node 1 size: 65536 MB node 1 free: 44321 MB node 2 cpus: 1 5 9 13 17 21 25 29 33 37 node 2 size: 65536 MB node 2 free: 44304 MB node 3 cpus: 3 7 11 15 19 23 27 31 35 39 node 3 size: 65536 MB node 3 free: 44329 MB node distances: node 0 1 2 3 0: 10 21 21 21 1: 21 10 21 21 2: 21 21 10 21 3: 21 21 21 10

The

lscpu command, provided by the util-linux package, gathers information about the CPU architecture, such as the number of CPUs, threads, cores, sockets, and NUMA nodes.

$ lscpu Architecture: x86_64 CPU op-mode(s): 32-bit, 64-bit Byte Order: Little Endian CPU(s): 40 On-line CPU(s) list: 0-39 Thread(s) per core: 1 Core(s) per socket: 10 Socket(s): 4 NUMA node(s): 4 Vendor ID: GenuineIntel CPU family: 6 Model: 47 Model name: Intel(R) Xeon(R) CPU E7- 4870 @ 2.40GHz Stepping: 2 CPU MHz: 2394.204 BogoMIPS: 4787.85 Virtualization: VT-x L1d cache: 32K L1i cache: 32K L2 cache: 256K L3 cache: 30720K NUMA node0 CPU(s): 0,4,8,12,16,20,24,28,32,36 NUMA node1 CPU(s): 2,6,10,14,18,22,26,30,34,38 NUMA node2 CPU(s): 1,5,9,13,17,21,25,29,33,37 NUMA node3 CPU(s): 3,7,11,15,19,23,27,31,35,39

The

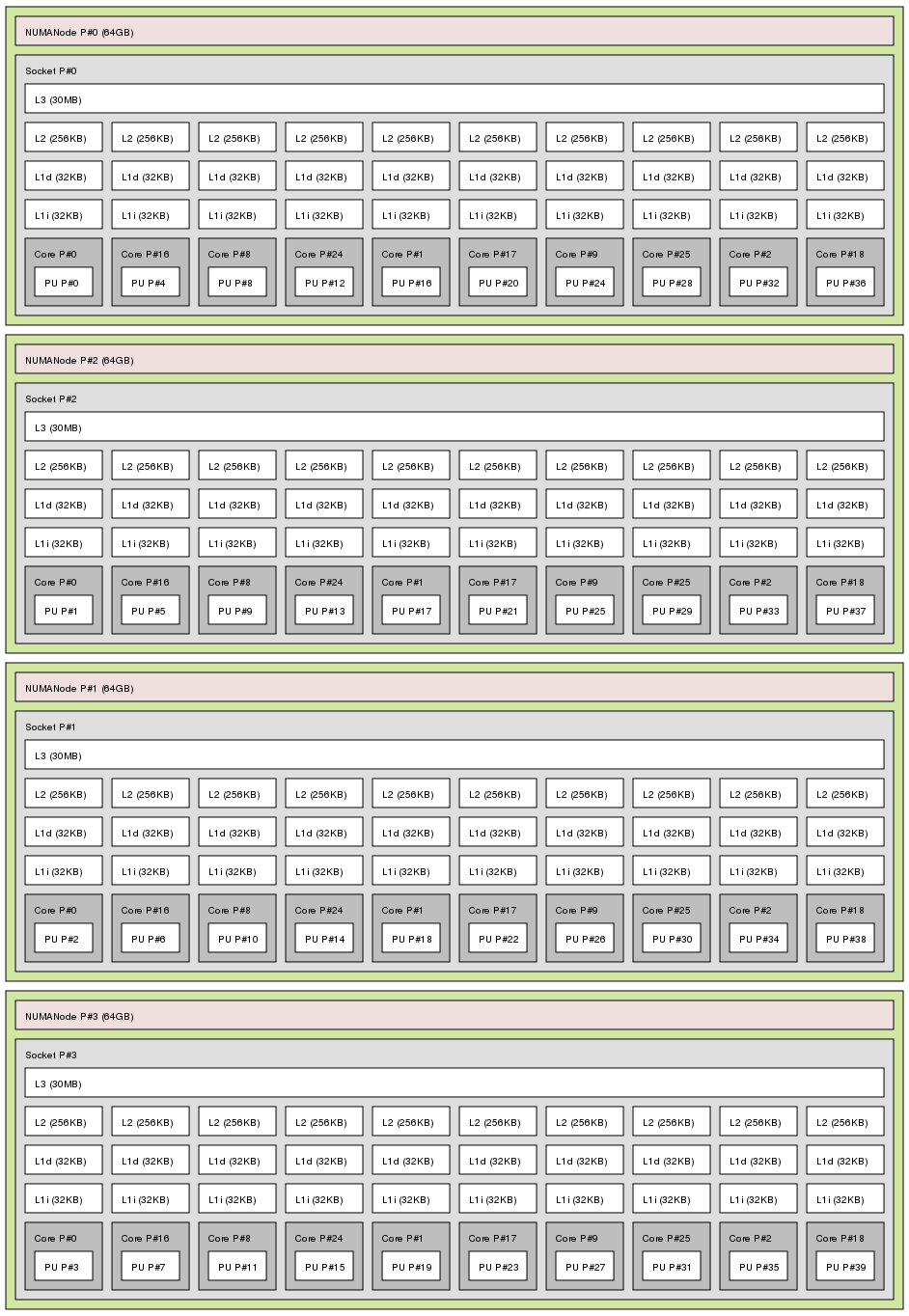

lstopo command, provided by the hwloc package, creates a graphical representation of your system. The lstopo-no-graphics command provides detailed textual output.

Output of lstopo command

6.1.2. Scheduling

In Red Hat Enterprise Linux, the smallest unit of process execution is called a thread. The system scheduler determines which processor runs a thread, and for how long the thread runs. However, because the scheduler's primary concern is to keep the system busy, it may not schedule threads optimally for application performance.

For example, say an application on a NUMA system is running on Node A when a processor on Node B becomes available. To keep the processor on Node B busy, the scheduler moves one of the application's threads to Node B. However, the application thread still requires access to memory on Node A. Because the thread is now running on Node B, and Node A memory is no longer local to the thread, it will take longer to access. It may take longer for the thread to finish running on Node B than it would have taken to wait for a processor on Node A to become available, and to execute the thread on the original node with local memory access.

Performance sensitive applications often benefit from the designer or administrator determining where threads are run. For details about how to ensure threads are scheduled appropriately for the needs of performance sensitive applications, see Section 6.3.6, “Tuning Scheduling Policy”.

6.1.2.1. Kernel Ticks

In previous versions of Red Hat Enterprise Linux, the Linux kernel interrupted each CPU on a regular basis to check what work needed to be done. It used the results to make decisions about process scheduling and load balancing. This regular interruption was known as a kernel tick.

This tick occurred regardless of whether there was work for the core to do. This meant that even idle cores were forced into higher power states on a regular basis (up to 1000 times per second) to respond to the interrupts. This prevented the system from effectively using deep sleep states included in recent generations of x86 processors.

In Red Hat Enterprise Linux 6 and 7, by default, the kernel no longer interrupts idle CPUs, which tend to be in low power states. This behavior is known as the tickless kernel. Where one or fewer tasks are running, periodic interrupts have been replaced with on-demand interrupts, allowing CPUs to remain in an idle or low power state for longer, and reducing power usage.

Red Hat Enterprise Linux 7 offers a dynamic tickless option (

nohz_full) to further improve determinism by reducing kernel interference with user-space tasks. This option can be enabled on specified cores with the nohz_full kernel parameter. When this option is enabled on a core, all timekeeping activities are moved to non-latency-sensitive cores. This can be useful for high performance computing and realtime computing workloads where user-space tasks are particularly sensitive to microsecond-level latencies associated with the kernel timer tick.

For details on how to enable the dynamic tickless behavior in Red Hat Enterprise Linux 7, see Section 6.3.1, “Configuring Kernel Tick Time”.

6.1.3. Interrupt Request (IRQ) Handling

An interrupt request or IRQ is a signal for immediate attention sent from a piece of hardware to a processor. Each device in a system is assigned one or more IRQ numbers to allow it to send unique interrupts. When interrupts are enabled, a processor that receives an interrupt request will immediately pause execution of the current application thread in order to address the interrupt request.

Because they halt normal operation, high interrupt rates can severely degrade system performance. It is possible to reduce the amount of time taken by interrupts by configuring interrupt affinity or by sending a number of lower priority interrupts in a batch (coalescing a number of interrupts).

For more information about tuning interrupt requests, see Section 6.3.7, “Setting Interrupt Affinity on AMD64 and Intel 64” or Section 6.3.8, “Configuring CPU, Thread, and Interrupt Affinity with Tuna”. For information specific to network interrupts, see Chapter 9, Networking.