Red Hat Training

A Red Hat training course is available for Red Hat Enterprise Linux

Virtualization Deployment and Administration Guide

Installing, configuring, and managing virtual machines on a RHEL physical machine

Yehuda Zimmerman

Laura Novich

Dayle Parker

Scott Radvan

Tahlia Richardson

Abstract

Important

Part I. Deployment

Chapter 1. System Requirements

1.1. Host System Requirements

Minimum host system requirements

- 6 GB free disk space.

- 2 GB RAM.

Recommended system requirements

- One core or thread for each virtualized CPU and one for the host.

- 2 GB of RAM, plus additional RAM for virtual machines.

- 6 GB disk space for the host, plus the required disk space for the virtual machine(s).Most guest operating systems require at least 6 GB of disk space. Additional storage space for each guest depends on their workload.

Swap space

Swap space in Linux is used when the amount of physical memory (RAM) is full. If the system needs more memory resources and the RAM is full, inactive pages in memory are moved to the swap space. While swap space can help machines with a small amount of RAM, it should not be considered a replacement for more RAM. Swap space is located on hard drives, which have a slower access time than physical memory. The size of your swap partition can be calculated from the physical RAM of the host. The Red Hat Customer Portal contains an article on safely and efficiently determining the size of the swap partition: https://access.redhat.com/site/solutions/15244.- When using raw image files, the total disk space required is equal to or greater than the sum of the space required by the image files, the 6 GB of space required by the host operating system, and the swap space for the guest.

Equation 1.1. Calculating required space for guest virtual machines using raw images

total for raw format = images + hostspace + swapFor qcow images, you must also calculate the expected maximum storage requirements of the guest(total for qcow format), as qcow and qcow2 images are able to grow as required. To allow for this expansion, first multiply the expected maximum storage requirements of the guest(expected maximum guest storage)by 1.01, and add to this the space required by the host(host), and the necessary swap space(swap).Equation 1.2. Calculating required space for guest virtual machines using qcow images

total for qcow format = (expected maximum guest storage * 1.01) + host + swap

1.2. KVM Hypervisor Requirements

- an Intel processor with the Intel VT-x and Intel 64 virtualization extensions for x86-based systems; or

- an AMD processor with the AMD-V and the AMD64 virtualization extensions.

Procedure 1.1. Verifying virtualization extensions

Verify the CPU virtualization extensions are available

enter the following command to verify the CPU virtualization extensions are available:$

grep -E 'svm|vmx' /proc/cpuinfoAnalyze the output

- The following example output contains a

vmxentry, indicating an Intel processor with the Intel VT-x extension:flags : fpu tsc msr pae mce cx8

vmxapic mtrr mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm syscall lm constant_tsc pni monitor ds_cpl vmx est tm2 cx16 xtpr lahf_lm - The following example output contains an

svmentry, indicating an AMD processor with the AMD-V extensions:flags : fpu tsc msr pae mce cx8 apic mtrr mca cmov pat pse36 clflush mmx fxsr sse sse2 ht syscall nx mmxext

svmfxsr_opt lm 3dnowext 3dnow pni cx16 lahf_lm cmp_legacy svm cr8legacy ts fid vid ttp tm stc

If thegrep -E 'svm|vmx' /proc/cpuinfocommand returns any output, the processor contains the hardware virtualization extensions. In some circumstances, manufacturers disable the virtualization extensions in the BIOS. If the extensions do not appear, or full virtualization does not work, see Procedure A.3, “Enabling virtualization extensions in BIOS” for instructions on enabling the extensions in your BIOS configuration utility.Ensure the KVM kernel modules are loaded

As an additional check, verify that thekvmmodules are loaded in the kernel with the following command:#

lsmod | grep kvmIf the output includeskvm_intelorkvm_amd, thekvmhardware virtualization modules are loaded.

Note

virsh utility (provided by the libvirt-client package) can output a full list of your system's virtualization capabilities with the following command:

# virsh capabilities1.3. KVM Guest Virtual Machine Compatibility

- For host systems: https://access.redhat.com/articles/rhel-limits

- For the KVM hypervisor: https://access.redhat.com/articles/rhel-kvm-limits

Note

1.4. Supported Guest CPU Models

1.4.1. Listing the Guest CPU Models

virsh cpu-models architecture command. For example:

$ virsh cpu-models x86_64

486

pentium

pentium2

pentium3

pentiumpro

coreduo

n270

core2duo

qemu32

kvm32

cpu64-rhel5

cpu64-rhel6

kvm64

qemu64

Conroe

Penryn

Nehalem

Westmere

SandyBridge

Haswell

athlon

phenom

Opteron_G1

Opteron_G2

Opteron_G3

Opteron_G4

Opteron_G5

$ virsh cpu-models ppc64

POWER7

POWER7_v2.1

POWER7_v2.3

POWER7+_v2.1

POWER8_v1.0

cpu_map.xml file, located in /usr/share/libvirt/:

# cat /usr/share/libvirt/cpu_map.xml<cpu> section of the domain XML file. See Section 23.12, “CPU Models and Topology” for more information.

Chapter 2. Installing the Virtualization Packages

yum command and the Subscription Manager application.

kvm kernel module.

2.1. Installing Virtualization Packages During a Red Hat Enterprise Linux Installation

Note

Important

Procedure 2.1. Installing virtualization packages

Select software

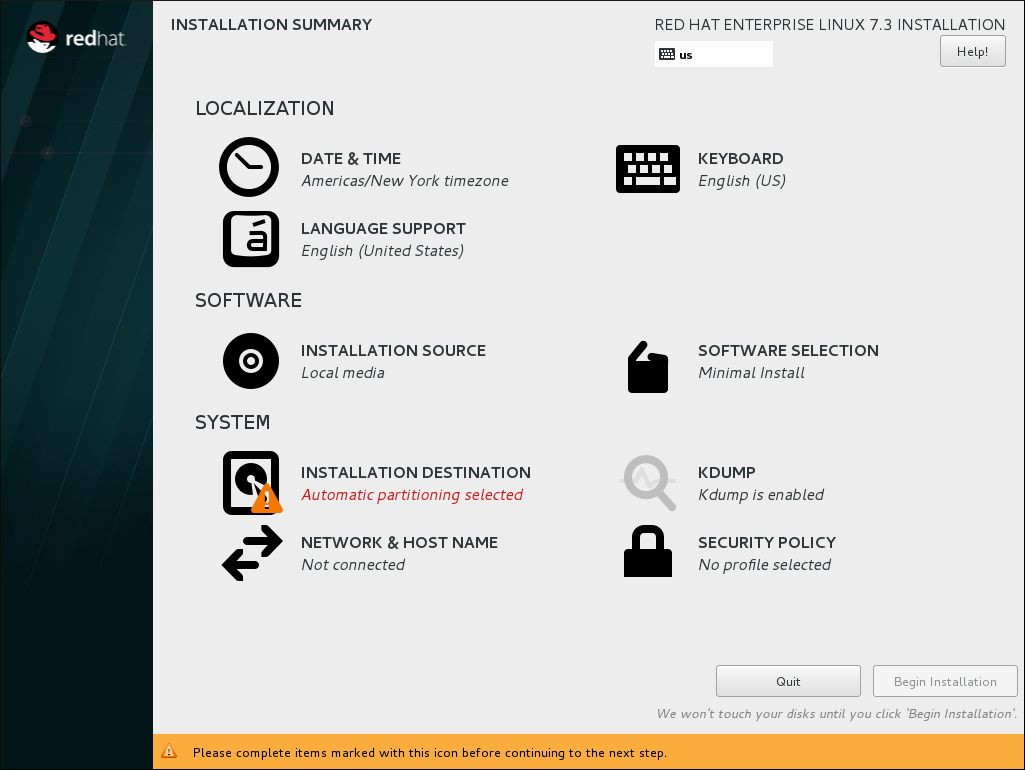

Follow the installation procedure until the Installation Summary screen.

Figure 2.1. The Installation Summary screen

In the Installation Summary screen, click Software Selection. The Software Selection screen opens.Select the server type and package groups

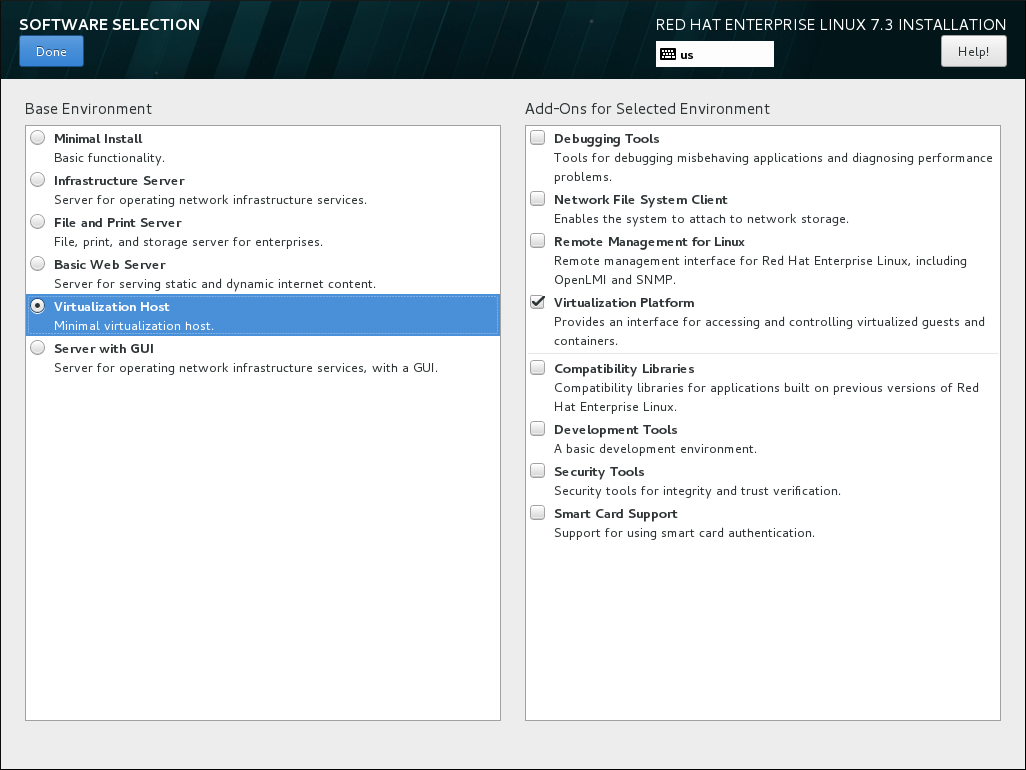

You can install Red Hat Enterprise Linux 7 with only the basic virtualization packages or with packages that allow management of guests through a graphical user interface. Do one of the following:- Install a minimal virtualization hostSelect the Virtualization Host radio button in the Base Environment pane and the Virtualization Platform check box in the Add-Ons for Selected Environment pane. This installs a basic virtualization environment which can be run with

virshor remotely over the network.

Figure 2.2. Virtualization Host selected in the Software Selection screen

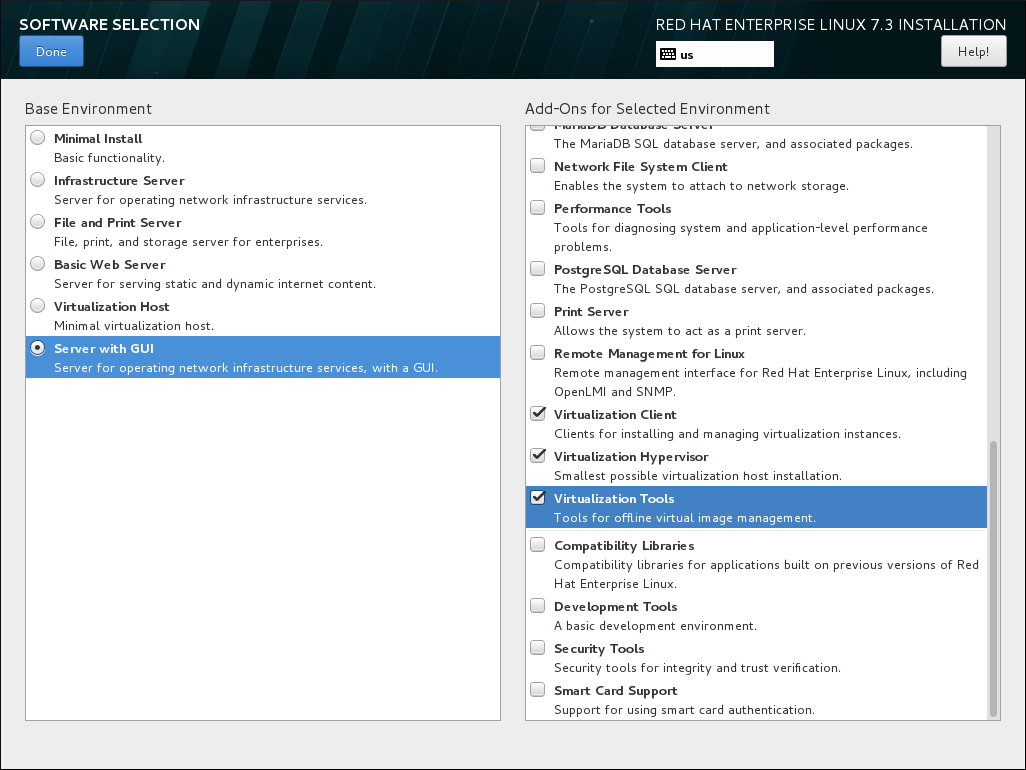

- Install a virtualization host with a graphical user interfaceSelect the Server with GUI radio button in the Base Environment pane and the Virtualization Client, Virtualization Hypervisor, and Virtualization Tools check boxes in the Add-Ons for Selected Environment pane. This installs a virtualization environment along with graphical tools for installing and managing guest virtual machines.

Figure 2.3. Server with GUI selected in the software selection screen

Finalize installation

Click Done and continue with the installation.

Important

2.1.1. Installing KVM Packages with Kickstart Files

%packages section of your Kickstart file:

@virtualization-hypervisor @virtualization-client @virtualization-platform @virtualization-tools

2.2. Installing Virtualization Packages on an Existing Red Hat Enterprise Linux System

subscription-manager register command and follow the prompts. Alternatively, run the Red Hat Subscription Manager application from Applications → System Tools on the desktop to register.

2.2.1. Installing Virtualization Packages Manually

- qemu-kvm: This package provides the user-level KVM emulator and facilitates communication between hosts and guest virtual machines.

- qemu-img: This package provides disk management for guest virtual machines.

Note

The qemu-img package is installed as a dependency of the qemu-kvm package. - libvirt: This package provides the server and host-side libraries for interacting with hypervisors and host systems, and the

libvirtddaemon that handles the library calls, manages virtual machines, and controls the hypervisor.

# yum install qemu-kvm libvirt- virt-install: This package provides the

virt-installcommand for creating virtual machines from the command line. - libvirt-python: This package contains a module that permits applications written in the Python programming language to use the interface supplied by the libvirt API.

- virt-manager: This package provides the virt-manager tool, also known as Virtual Machine Manager. This is a graphical tool for administering virtual machines. It uses the libvirt-client library as the management API.

- libvirt-client: This package provides the client-side APIs and libraries for accessing libvirt servers. The libvirt-client package includes the

virshcommand-line tool to manage and control virtual machines and hypervisors from the command line or a special virtualization shell.

# yum install virt-install libvirt-python virt-manager virt-install libvirt-client2.2.2. Installing Virtualization Package Groups

yum grouplist hidden commad.

Table 2.1. Virtualization Package Groups

| Package Group | Description | Mandatory Packages | Optional Packages |

|---|---|---|---|

Virtualization Hypervisor | Smallest possible virtualization host installation | libvirt, qemu-kvm, qemu-img | qemu-kvm-tools |

Virtualization Client | Clients for installing and managing virtualization instances | gnome-boxes, virt-install, virt-manager, virt-viewer, qemu-img | virt-top, libguestfs-tools, libguestfs-tools-c |

Virtualization Platform | Provides an interface for accessing and controlling virtual machines and containers | libvirt, libvirt-client, virt-who, qemu-img | fence-virtd-libvirt, fence-virtd-multicast, fence-virtd-serial, libvirt-cim, libvirt-java, libvirt-snmp, perl-Sys-Virt |

Virtualization Tools | Tools for offline virtual image management | libguestfs, qemu-img | libguestfs-java, libguestfs-tools, libguestfs-tools-c |

yum group install package_group command. For example, to install the Virtualization Tools package group with all the package types, run:

# yum group install "Virtualization Tools" --setopt=group_package_types=mandatory,default,optionalChapter 3. Creating a Virtual Machine

virt-install command-line utility by a list of parameters or with a script. Both methods are covered by this chapter.

3.1. Guest Virtual Machine Deployment Considerations

- Performance

- Guest virtual machines should be deployed and configured based on their intended tasks. Some guest systems (for instance, guests running a database server) may require special performance considerations. Guests may require more assigned CPUs or memory based on their role and projected system load.

- Input/Output requirements and types of Input/Output

- Some guest virtual machines may have a particularly high I/O requirement or may require further considerations or projections based on the type of I/O (for instance, typical disk block size access, or the amount of clients).

- Storage

- Some guest virtual machines may require higher priority access to storage or faster disk types, or may require exclusive access to areas of storage. The amount of storage used by guests should also be regularly monitored and taken into account when deploying and maintaining storage. Make sure to read all the considerations outlined in Red Hat Enterprise Linux 7 Virtualization Security Guide. It is also important to understand that your physical storage may limit your options in your virtual storage.

- Networking and network infrastructure

- Depending upon your environment, some guest virtual machines could require faster network links than other guests. Bandwidth or latency are often factors when deploying and maintaining guests, especially as requirements or load changes.

- Request requirements

- SCSI requests can only be issued to guest virtual machines on virtio drives if the virtio drives are backed by whole disks, and the disk device parameter is set to

lunin the domain XML file, as shown in the following example:<devices> <emulator>/usr/libexec/qemu-kvm</emulator> <disk type='block' device='lun'>

3.2. Creating Guests with virt-install

virt-install command to create virtual machines and install operating system on those virtual machines from the command line. virt-install can be used either interactively or as part of a script to automate the creation of virtual machines. If you are using an interactive graphical installation, you must have virt-viewer installed before you run virt-install. In addition, you can start an unattended installation of virtual machine operating systems using virt-install with kickstart files.

Note

virt-install commands to complete successfully.

virt-install utility uses a number of command-line options. However, most virt-install options are not required.

--name- The name of the virtual machine.

--memory- The amount of memory (RAM) to allocate to the guest, in MiB.

- Guest storage

- Use one of the following guest storage options:

--diskThe storage configuration details for the virtual machine. If you use the--disk noneoption, the virtual machine is created with no disk space.--filesystemThe path to the file system for the virtual machine guest.

- Installation method

- Use one of the following installation methods:

--locationThe location of the installation media.--cdromThe file or device used as a virtual CD-ROM device. It can be path to an ISO image, or a URL from which to fetch or access a minimal boot ISO image. However, it can not be a physical host CD-ROM or DVD-ROM device.--pxeUses the PXE boot protocol to load the initial ramdisk and kernel for starting the guest installation process.--importSkips the OS installation process and builds a guest around an existing disk image. The device used for booting is the first device specified by thediskorfilesystemoption.--bootThe post-install VM boot configuration. This option allows specifying a boot device order, permanently booting off kernel and initrd with optional kernel arguments and enabling a BIOS boot menu.

# virt-install --help# virt install --option=?virt-install man page also documents each command option, important variables, and examples.

virt-install, you may also need to use qemu-img to configure storage options. For instructions on using qemu-img, see Chapter 14, Using qemu-img.

3.2.1. Installing a virtual machine from an ISO image

#virt-install \--name guest1-rhel7 \--memory 2048 \--vcpus 2 \--disk size=8 \--cdrom /path/to/rhel7.iso \--os-variant rhel7

--cdrom /path/to/rhel7.iso option specifies that the virtual machine will be installed from the CD or DVD image at the specified location.

3.2.2. Importing a virtual machine image

#virt-install \--name guest1-rhel7 \--memory 2048 \--vcpus 2 \--disk /path/to/imported/disk.qcow \--import \--os-variant rhel7

--import option specifies that the virtual machine will be imported from the virtual disk image specified by the --disk /path/to/imported/disk.qcow option.

3.2.3. Installing a virtual machine from the network

#virt-install \--name guest1-rhel7 \--memory 2048 \--vcpus 2 \--disk size=8 \--location http://example.com/path/to/os \--os-variant rhel7

--location http://example.com/path/to/os option specifies that the installation tree is at the specified network location.

3.2.4. Installing a virtual machine using PXE

--network option specifying a bridged network and the --pxe option must be specified.

#virt-install \--name guest1-rhel7 \--memory 2048 \--vcpus 2 \--disk size=8 \--network=bridge:br0 \--pxe \--os-variant rhel7

3.2.5. Installing a virtual machine with Kickstart

#virt-install \--name guest1-rhel7 \--memory 2048 \--vcpus 2 \--disk size=8 \--location http://example.com/path/to/os \--os-variant rhel7 \--initrd-inject /path/to/ks.cfg \--extra-args="ks=file:/ks.cfg console=tty0 console=ttyS0,115200n8"

initrd-inject and the extra-args options specify that the virtual machine will be installed using a Kickstarter file.

3.2.6. Configuring the guest virtual machine network during guest creation

Default network with NAT

libvirtd's network address translation (NAT) virtual network switch. For more information about NAT, see Section 6.1, “Network Address Translation (NAT) with libvirt”.

virt-install:

--network defaultNote

network option is specified, the guest virtual machine is configured with a default network with NAT.

Bridged network with DHCP

--network br0Note

virt-install. For details on creating a network bridge, see Section 6.4.1, “Configuring Bridged Networking on a Red Hat Enterprise Linux 7 Host”.

Bridged network with a static IP address

--network br0 \--extra-args "ip=192.168.1.2::192.168.1.1:255.255.255.0:test.example.com:eth0:none"

No network

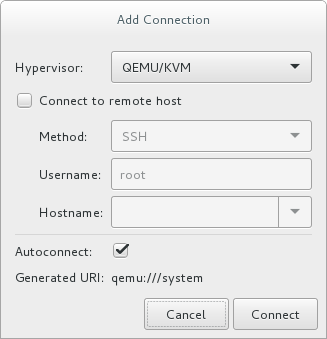

--network=none3.3. Creating Guests with virt-manager

virt-manager, is a graphical tool for creating and managing guest virtual machines.

3.3.1. virt-manager installation overview

- Choosing the hypervisor and installation type

- Locating and configuring the installation media

- Configuring memory and CPU options

- Configuring the virtual machine's storage

- Configuring virtual machine name, networking, architecture, and other hardware settings

virt-manager can access the installation media (whether locally or over the network) before you continue.

3.3.2. Creating a Red Hat Enterprise Linux 7 Guest with virt-manager

Note

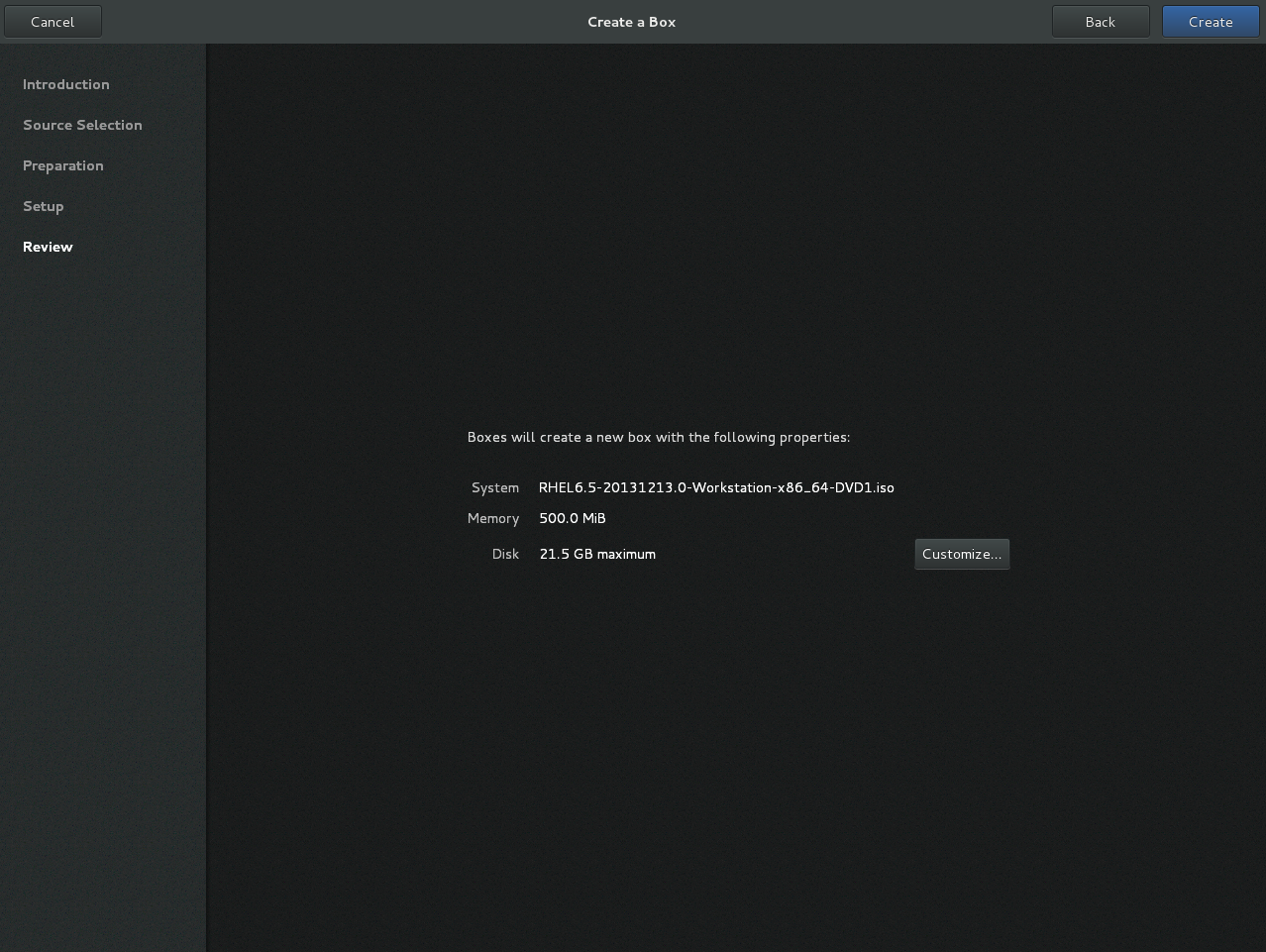

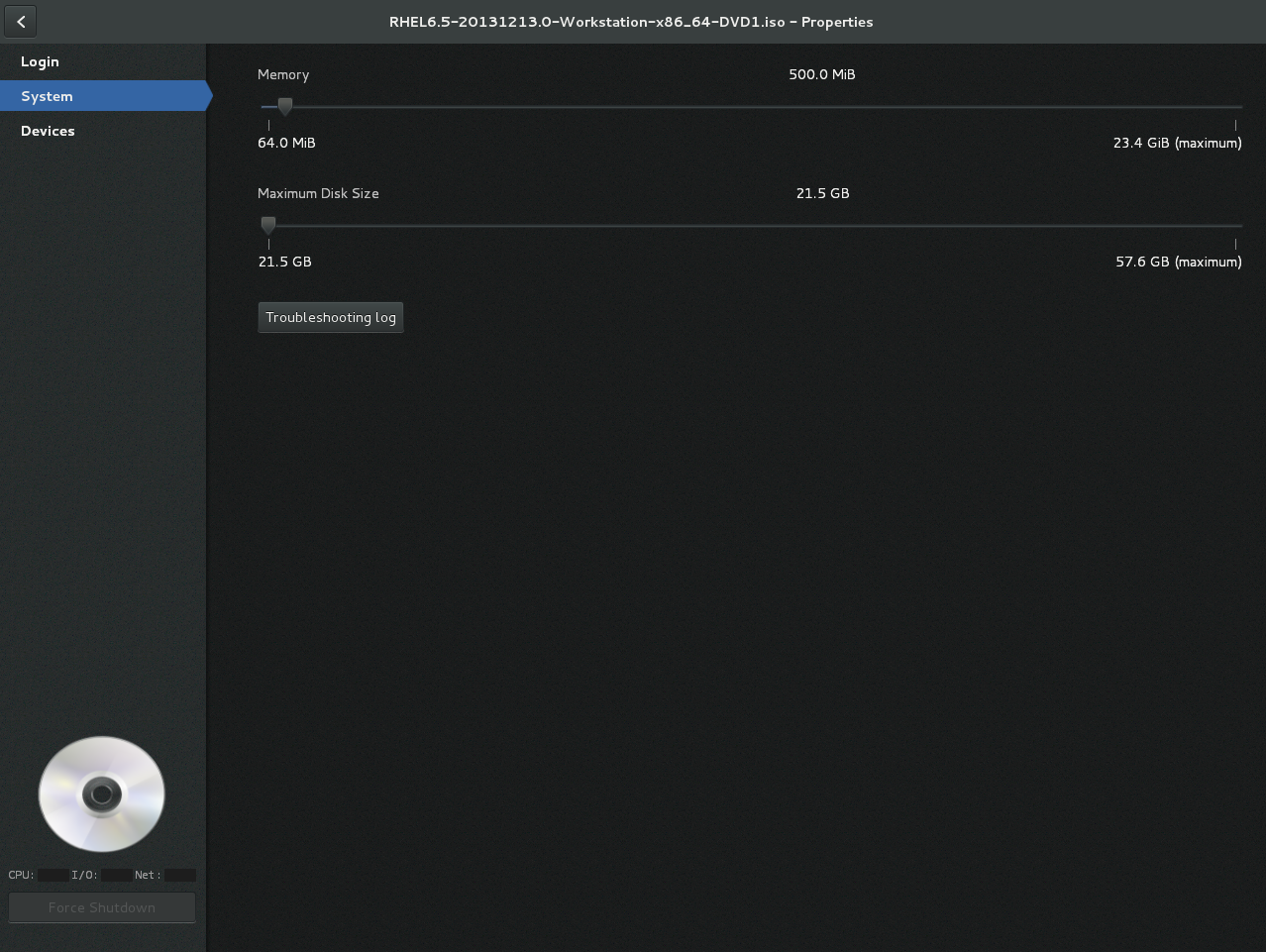

Procedure 3.1. Creating a Red Hat Enterprise Linux 7 guest virtual machine with virt-manager using local installation media

Optional: Preparation

Prepare the storage environment for the virtual machine. For more information on preparing storage, see Chapter 13, Managing Storage for Virtual Machines.Important

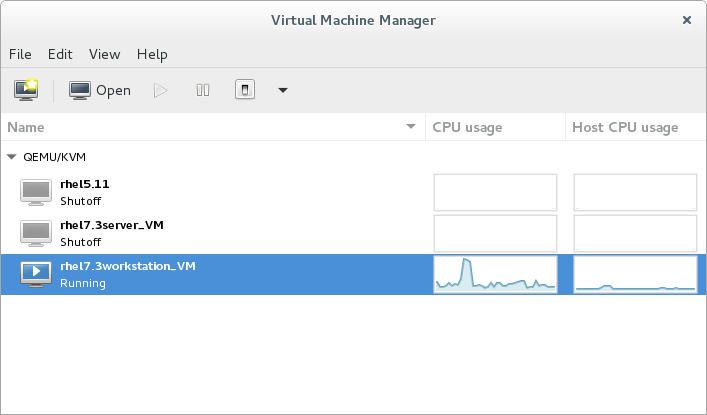

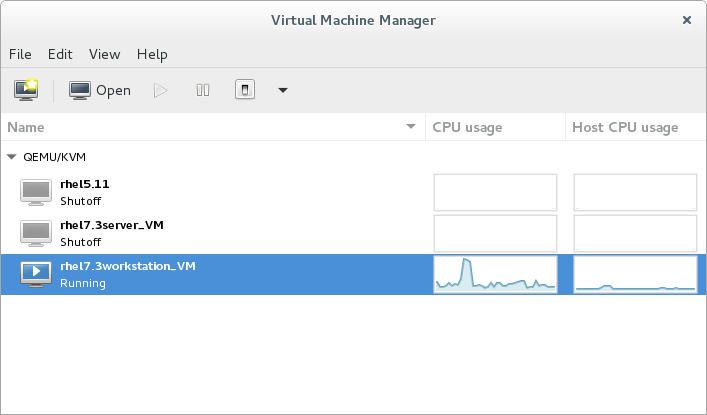

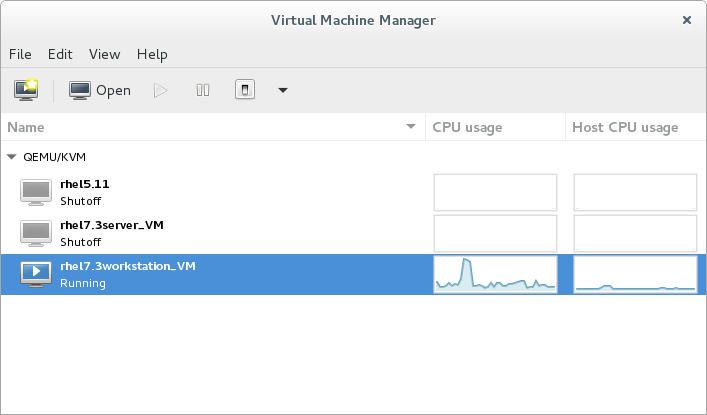

Various storage types may be used for storing guest virtual machines. However, for a virtual machine to be able to use migration features, the virtual machine must be created on networked storage.Red Hat Enterprise Linux 7 requires at least 1 GB of storage space. However, Red Hat recommends at least 5 GB of storage space for a Red Hat Enterprise Linux 7 installation and for the procedures in this guide.Open virt-manager and start the wizard

Open virt-manager by executing thevirt-managercommand as root or opening Applications → System Tools → Virtual Machine Manager.

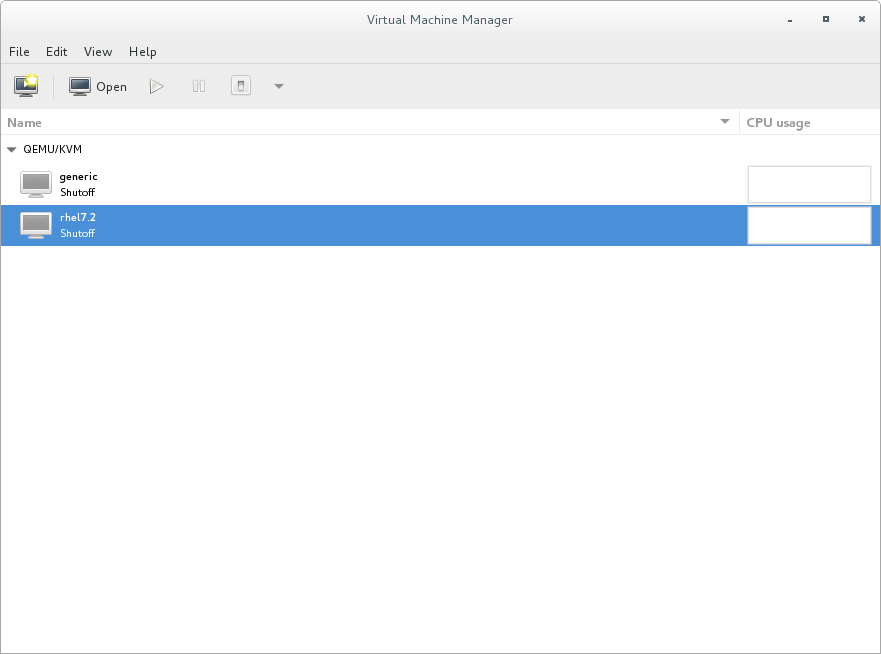

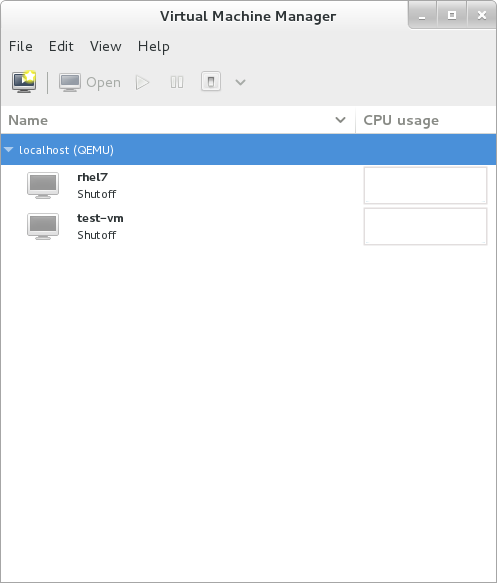

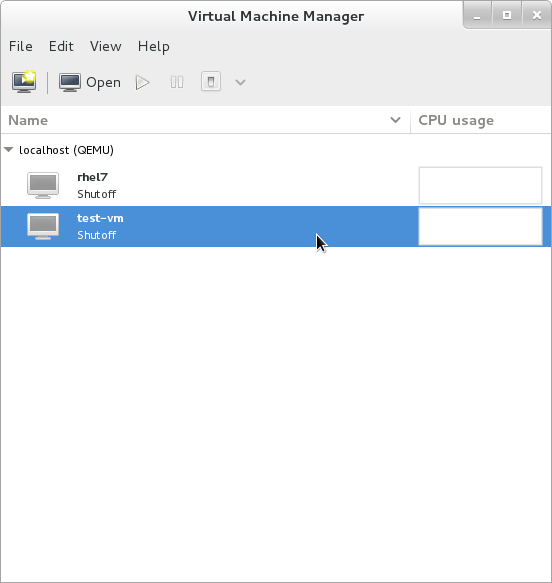

Figure 3.1. The Virtual Machine Manager window

Optionally, open a remote hypervisor by selecting the hypervisor and clicking the Connect button.Click to start the new virtualized guest wizard.

The New VM window opens.

to start the new virtualized guest wizard.

The New VM window opens.Specify installation type

Select the installation type:- Local install media (ISO image or CDROM)

- This method uses an image of an installation disk (for example,

.iso). However, using a host CD-ROM or a DVD-ROM device is not possible. - Network Install (HTTP, FTP, or NFS)

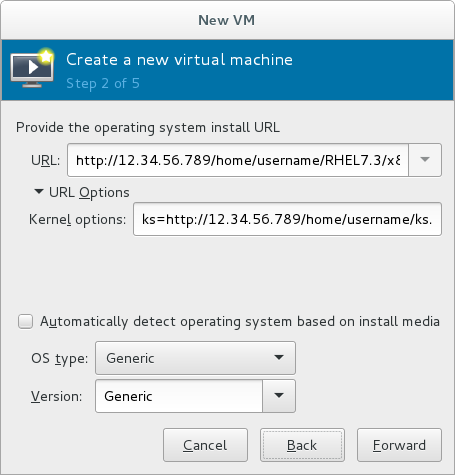

- This method involves the use of a mirrored Red Hat Enterprise Linux or Fedora installation tree to install a guest. The installation tree must be accessible through either HTTP, FTP, or NFS.If you select Network Install, provide the installation URL and also Kernel options, if required.

- Network Boot (PXE)

- This method uses a Preboot eXecution Environment (PXE) server to install the guest virtual machine. Setting up a PXE server is covered in the Red Hat Enterprise Linux 7 Installation Guide. To install using network boot, the guest must have a routable IP address or shared network device.If you select Network Boot, continue to STEP 5. After all steps are completed, a DHCP request is sent and if a valid PXE server is found the guest virtual machine's installation processes will start.

- Import existing disk image

- This method allows you to create a new guest virtual machine and import a disk image (containing a pre-installed, bootable operating system) to it.

Figure 3.2. Virtual machine installation method

Click Forward to continue.Select the installation source

- If you selected Local install media (ISO image or CDROM), specify your intended local installation media.

Figure 3.3. Local ISO image installation

Warning

Even though the option is currently present in the GUI, installing from a physical CD-ROM or DVD device on the host is not possible. Therefore, selecting the Use CDROM or DVD option will cause the VM installation to fail. For details, see the Red Hat Knowledge Base.To install from an ISO image, select Use ISO image and click the Browse... button to open the Locate media volume window.Select the installation image you wish to use, and click Choose Volume.If no images are displayed in the Locate media volume window, click the Browse Local button to browse the host machine for the installation image or DVD drive containing the installation disk. Select the installation image or DVD drive containing the installation disk and click Open; the volume is selected for use and you are returned to the Create a new virtual machine wizard.Important

For ISO image files and guest storage images, the recommended location to use is/var/lib/libvirt/images/. Any other location may require additional configuration by SELinux. See the Red Hat Enterprise Linux Virtualization Security Guide or the Red Hat Enterprise Linux SELinux User's and Administrator's Guide for more details on configuring SELinux. - If you selected

Network Install, input the URL of the installation source and also the required Kernel options, if any. The URL must point to the root directory of an installation tree, which must be accessible through either HTTP, FTP, or NFS.To perform a kickstart installation, specify the URL of a kickstart file in Kernel options, starting withks=

Figure 3.4. Network kickstart installation

Note

For a complete list of kernel options, see the Red Hat Enterprise Linux 7 Installation Guide.

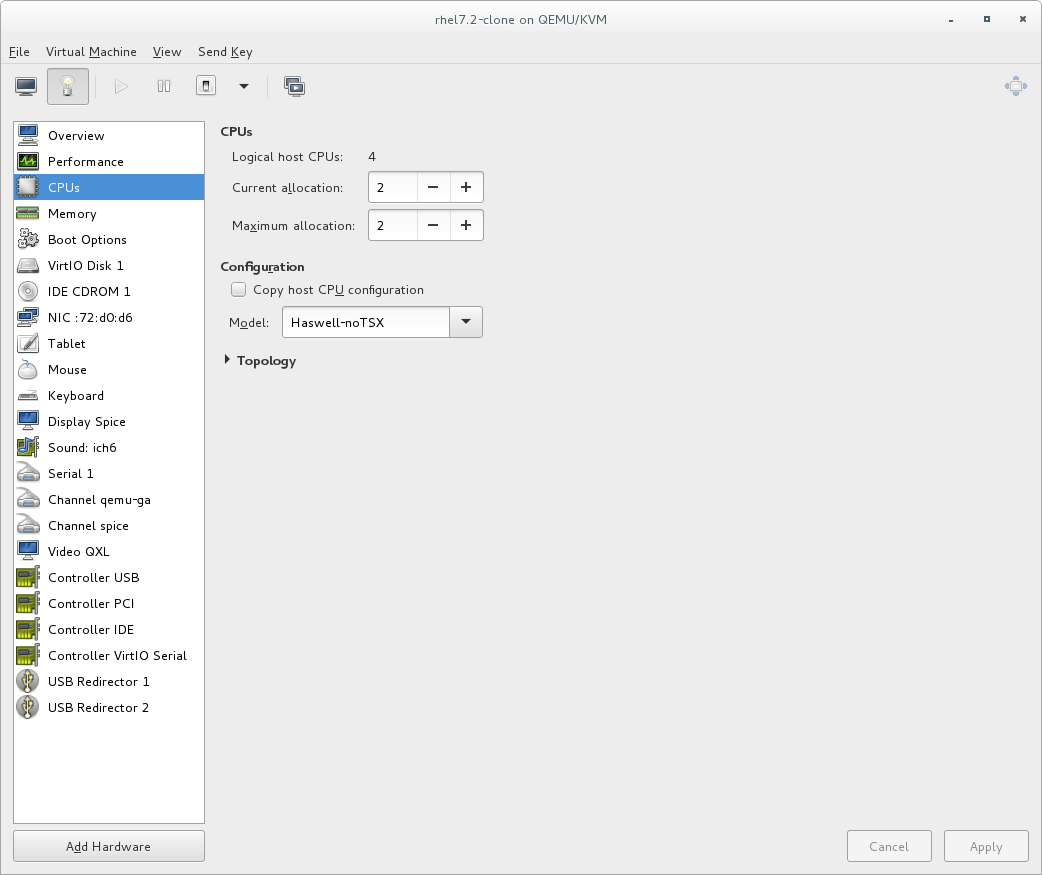

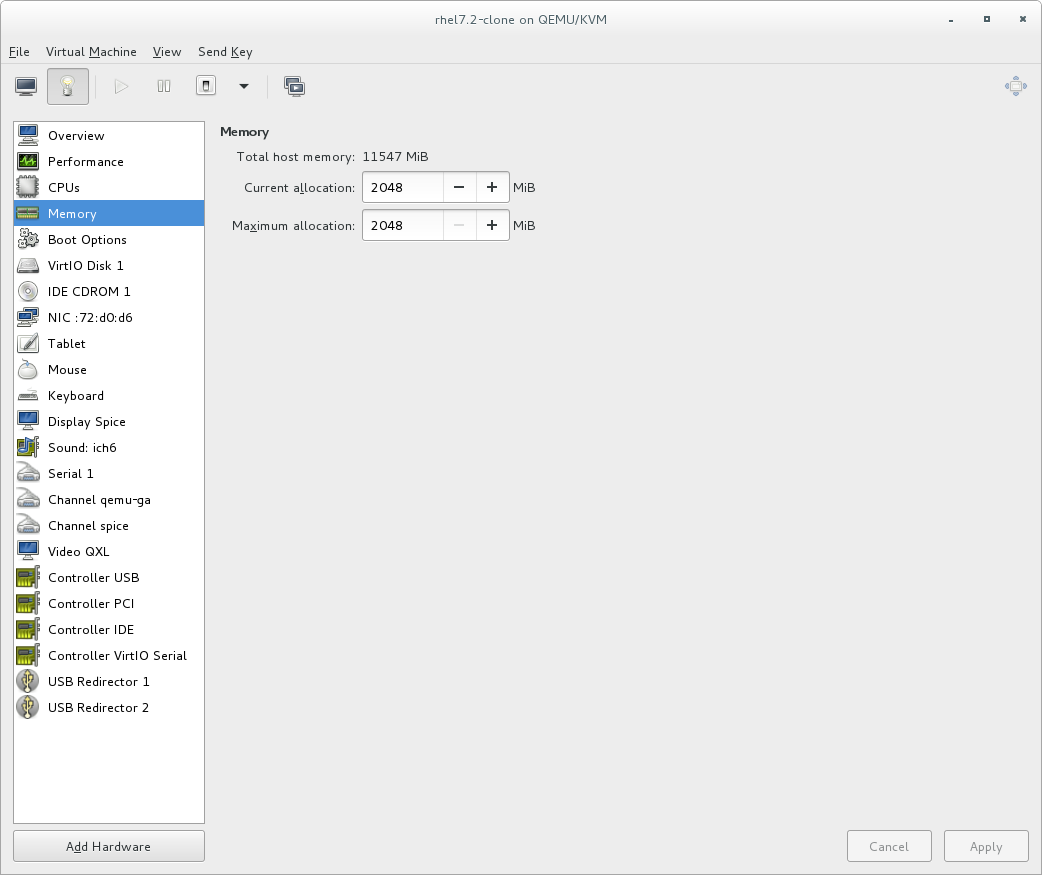

Next, configure the OS type and Version of the installation. Ensure that you select the appropriate operating system type for your virtual machine. This can be specified manually or by selecting the Automatically detect operating system based on install media check box.Click Forward to continue.Configure memory (RAM) and virtual CPUs

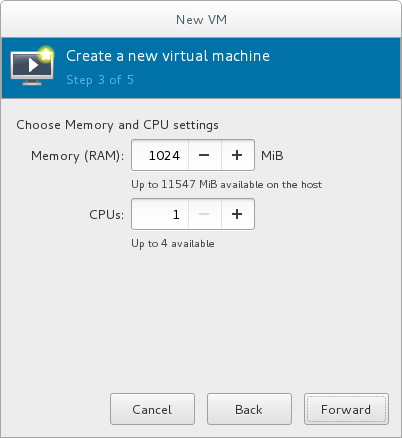

Specify the number of CPUs and amount of memory (RAM) to allocate to the virtual machine. The wizard shows the number of CPUs and amount of memory you can allocate; these values affect the host's and guest's performance.Virtual machines require sufficient physical memory (RAM) to run efficiently and effectively. Red Hat supports a minimum of 512MB of RAM for a virtual machine. Red Hat recommends at least 1024MB of RAM for each logical core.Assign sufficient virtual CPUs for the virtual machine. If the virtual machine runs a multi-threaded application, assign the number of virtual CPUs the guest virtual machine will require to run efficiently.You cannot assign more virtual CPUs than there are physical processors (or hyper-threads) available on the host system. The number of virtual CPUs available is noted in the Up to X available field.

Figure 3.5. Configuring Memory and CPU

After you have configured the memory and CPU settings, click Forward to continue.Note

Memory and virtual CPUs can be overcommitted. For more information on overcommitting, see Chapter 7, Overcommitting with KVM.Configure storage

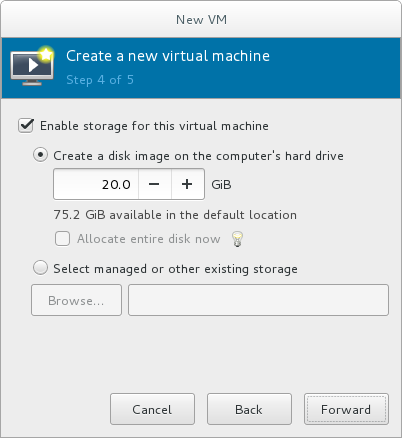

Enable and assign sufficient space for your virtual machine and any applications it requires. Assign at least 5 GB for a desktop installation or at least 1 GB for a minimal installation.

Figure 3.6. Configuring virtual storage

Note

Live and offline migrations require virtual machines to be installed on shared network storage. For information on setting up shared storage for virtual machines, see Section 15.4, “Shared Storage Example: NFS for a Simple Migration”.With the default local storage

Select the Create a disk image on the computer's hard drive radio button to create a file-based image in the default storage pool, the/var/lib/libvirt/images/directory. Enter the size of the disk image to be created. If the Allocate entire disk now check box is selected, a disk image of the size specified will be created immediately. If not, the disk image will grow as it becomes filled.Note

Although the storage pool is a virtual container it is limited by two factors: maximum size allowed to it by qemu-kvm and the size of the disk on the host physical machine. Storage pools may not exceed the size of the disk on the host physical machine. The maximum sizes are as follows:- virtio-blk = 2^63 bytes or 8 Exabytes(using raw files or disk)

- Ext4 = ~ 16 TB (using 4 KB block size)

- XFS = ~8 Exabytes

- qcow2 and host file systems keep their own metadata and scalability should be evaluated/tuned when trying very large image sizes. Using raw disks means fewer layers that could affect scalability or max size.

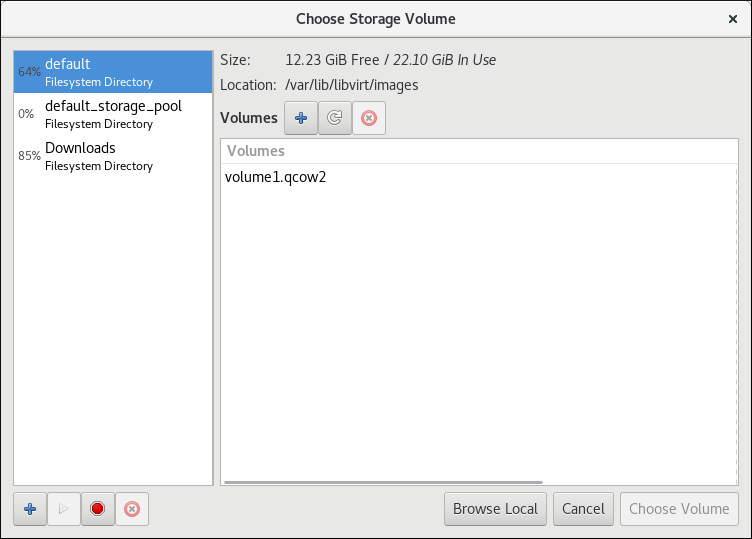

Click Forward to create a disk image on the local hard drive. Alternatively, select Select managed or other existing storage, then select Browse to configure managed storage.With a storage pool

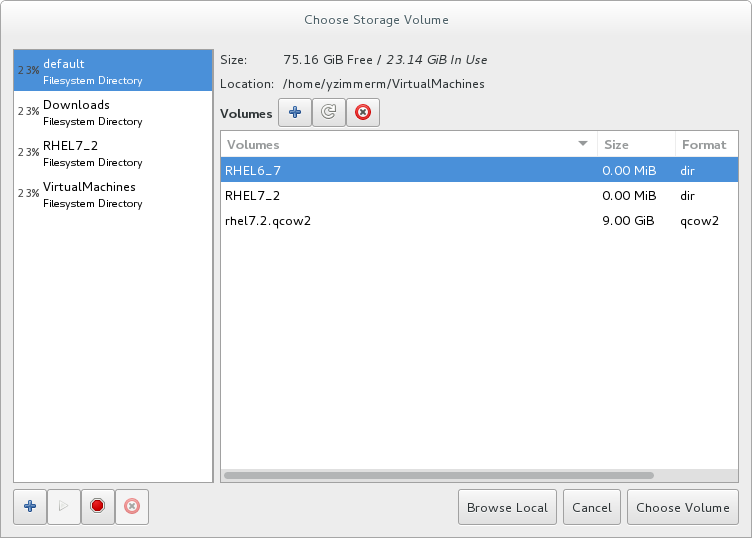

If you select Select managed or other existing storage to use a storage pool, click Browse to open the Locate or create storage volume window.

Figure 3.7. The Choose Storage Volume window

- Select a storage pool from the Storage Pools list.

- Optional: Click

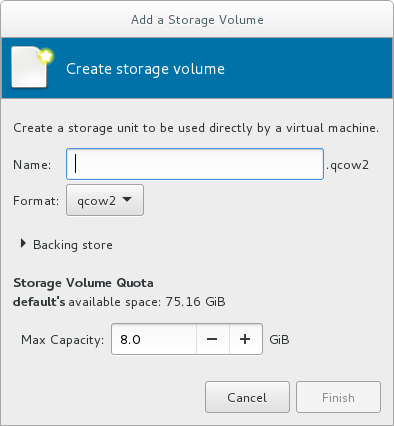

to create a new storage volume. The Add a Storage Volume screen will appear. Enter the name of the new storage volume.

Choose a format option from the Format drop-down menu. Format options include raw, qcow2, and qed. Adjust other fields as needed. Note that the qcow2 version used here is version 3. To change the qcow version see Section 23.19.2, “Setting Target Elements”

to create a new storage volume. The Add a Storage Volume screen will appear. Enter the name of the new storage volume.

Choose a format option from the Format drop-down menu. Format options include raw, qcow2, and qed. Adjust other fields as needed. Note that the qcow2 version used here is version 3. To change the qcow version see Section 23.19.2, “Setting Target Elements”

Figure 3.8. The Add a Storage Volume window

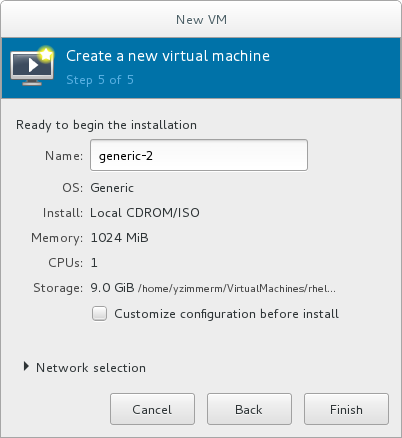

Select the new volume and click Choose volume. Next, click Finish to return to the New VM wizard. Click Forward to continue.Name and final configuration

Name the virtual machine. Virtual machine names can contain letters, numbers and the following characters: underscores (_), periods (.), and hyphens (-). Virtual machine names must be unique for migration and cannot consist only of numbers.By default, the virtual machine will be created with network address translation (NAT) for a network called 'default' . To change the network selection, clickNetwork selectionand select a host device and source mode.Verify the settings of the virtual machine and click Finish when you are satisfied; this will create the virtual machine with specified networking settings, virtualization type, and architecture.

Figure 3.9. Verifying the configuration

Or, to further configure the virtual machine's hardware, check the Customize configuration before install check box to change the guest's storage or network devices, to use the paravirtualized (virtio) drivers or to add additional devices. This opens another wizard that will allow you to add, remove, and configure the virtual machine's hardware settings.Note

Red Hat Enterprise Linux 4 or Red Hat Enterprise Linux 5 guest virtual machines cannot be installed using graphical mode. As such, you must select "Cirrus" instead of "QXL" as a video card.After configuring the virtual machine's hardware, click Apply.virt-managerwill then create the virtual machine with your specified hardware settings.Warning

When installing a Red Hat Enterprise Linux 7 guest virtual machine from a remote medium but without a configured TCP/IP connection, the installation fails. However, when installing a guest virtual machine of Red Hat Enterprise Linux 5 or 6 in such circumstances, the installer opens a "Configure TCP/IP" interface.For further information about this difference, see the related knowledgebase article.Click Finish to continue into the Red Hat Enterprise Linux installation sequence. For more information on installing Red Hat Enterprise Linux 7, see the Red Hat Enterprise Linux 7 Installation Guide.

3.4. Comparison of virt-install and virt-manager Installation options

virt-install and virt-manager installation options for when installing a virtual machine.

virt-install options are not required. The minimum requirements are --name, --memory, guest storage (--disk, --filesystem or --disk none), and an install method (--location, --cdrom, --pxe, --import, or boot). These options are further specified with arguments; to see a complete list of command options and related arguments, enter the following command:

# virt-install --helpTable 3.1. virt-install and virt-manager configuration comparison for guest installations

| Configuration on virtual machine | virt-install option | virt-manager installation wizard label and step number |

|---|---|---|

| Virtual machine name | --name, -n | Name (step 5) |

| RAM to allocate (MiB) | --ram, -r | Memory (RAM) (step 3) |

| Storage - specify storage media | --disk | Enable storage for this virtual machine → Create a disk image on the computer's hard drive, or Select managed or other existing storage (step 4) |

| Storage - export a host directory to the guest | --filesystem | Enable storage for this virtual machine → Select managed or other existing storage (step 4) |

| Storage - configure no local disk storage on the guest | --nodisks | Deselect the Enable storage for this virtual machine check box (step 4) |

| Installation media location (local install) | --file | Local install media → Locate your install media (steps 1-2) |

| Installation using a distribution tree (network install) | --location | Network install → URL (steps 1-2) |

| Install guest with PXE | --pxe | Network boot (step 1) |

| Number of vCPUs | --vcpus | CPUs (step 3) |

| Host network | --network | Advanced options drop-down menu (step 5) |

| Operating system variant/version | --os-variant | Version (step 2) |

| Graphical display method | --graphics, --nographics | * virt-manager provides GUI installation only |

Chapter 4. Cloning Virtual Machines

- Clones are instances of a single virtual machine. Clones can be used to set up a network of identical virtual machines, and they can also be distributed to other destinations.

- Templates are instances of a virtual machine that are designed to be used as a source for cloning. You can create multiple clones from a template and make minor modifications to each clone. This is useful in seeing the effects of these changes on the system.

- Platform level information and configurations include anything assigned to the virtual machine by the virtualization solution. Examples include the number of Network Interface Cards (NICs) and their MAC addresses.

- Guest operating system level information and configurations include anything configured within the virtual machine. Examples include SSH keys.

- Application level information and configurations include anything configured by an application installed on the virtual machine. Examples include activation codes and registration information.

Note

This chapter does not include information about removing the application level, because the information and approach is specific to each application.

Note

4.1. Preparing Virtual Machines for Cloning

Procedure 4.1. Preparing a virtual machine for cloning

Setup the virtual machine

- Build the virtual machine that is to be used for the clone or template.

- Install any software needed on the clone.

- Configure any non-unique settings for the operating system.

- Configure any non-unique application settings.

Remove the network configuration

- Remove any persistent udev rules using the following command:

# rm -f /etc/udev/rules.d/70-persistent-net.rules

Note

If udev rules are not removed, the name of the first NIC may be eth1 instead of eth0. - Remove unique network details from ifcfg scripts by making the following edits to

/etc/sysconfig/network-scripts/ifcfg-eth[x]:- Remove the HWADDR and Static lines

Note

If the HWADDR does not match the new guest's MAC address, the ifcfg will be ignored. Therefore, it is important to remove the HWADDR from the file.DEVICE=eth[x] BOOTPROTO=none ONBOOT=yes #NETWORK=10.0.1.0 <- REMOVE #NETMASK=255.255.255.0 <- REMOVE #IPADDR=10.0.1.20 <- REMOVE #HWADDR=xx:xx:xx:xx:xx <- REMOVE #USERCTL=no <- REMOVE # Remove any other *unique* or non-desired settings, such as UUID.

- Ensure that a DHCP configuration remains that does not include a HWADDR or any unique information.

DEVICE=eth[x] BOOTPROTO=dhcp ONBOOT=yes

- Ensure that the file includes the following lines:

DEVICE=eth[x] ONBOOT=yes

- If the following files exist, ensure that they contain the same content:

/etc/sysconfig/networking/devices/ifcfg-eth[x]/etc/sysconfig/networking/profiles/default/ifcfg-eth[x]

Note

If NetworkManager or any special settings were used with the virtual machine, ensure that any additional unique information is removed from the ifcfg scripts.

Remove registration details

- Remove registration details using one of the following:

- For Red Hat Network (RHN) registered guest virtual machines, use the following command:

#

rm /etc/sysconfig/rhn/systemid - For Red Hat Subscription Manager (RHSM) registered guest virtual machines:

- If the original virtual machine will not be used, use the following commands:

#

subscription-manager unsubscribe --all#subscription-manager unregister#subscription-manager clean - If the original virtual machine will be used, run only the following command:

#

subscription-manager cleanThe original RHSM profile remains in the Portal. To reactivate your RHSM registration on the virtual machine after it is cloned, do the following:- Obtain your customer identity code:

#

subscription-manager identitysubscription-manager identity: 71rd64fx-6216-4409-bf3a-e4b7c7bd8ac9 - Register the virtual machine using the obtained ID code:

#

subscription-manager register --consumerid=71rd64fx-6216-4409-bf3a-e4b7c7bd8ac9

Removing other unique details

- Remove any sshd public/private key pairs using the following command:

#

rm -rf /etc/ssh/ssh_host_*Note

Removing ssh keys prevents problems with ssh clients not trusting these hosts. - Remove any other application-specific identifiers or configurations that may cause conflicts if running on multiple machines.

Configure the virtual machine to run configuration wizards on the next boot

- Configure the virtual machine to run the relevant configuration wizards the next time it is booted by doing one of the following:

- For Red Hat Enterprise Linux 6 and below, create an empty file on the root file system called .unconfigured using the following command:

#

touch /.unconfigured - For Red Hat Enterprise Linux 7, enable the first boot and initial-setup wizards by running the following commands:

#

sed -ie 's/RUN_FIRSTBOOT=NO/RUN_FIRSTBOOT=YES/' /etc/sysconfig/firstboot#systemctl enable firstboot-graphical#systemctl enable initial-setup-graphical

Note

The wizards that run on the next boot depend on the configurations that have been removed from the virtual machine. In addition, on the first boot of the clone, it is recommended that you change the hostname.

4.2. Cloning a Virtual Machine

virt-clone or virt-manager.

4.2.1. Cloning Guests with virt-clone

virt-clone to clone virtual machines from the command line.

virt-clone to complete successfully.

virt-clone command provides a number of options that can be passed on the command line. These include general options, storage configuration options, networking configuration options, and miscellaneous options. Only the --original is required. To see a complete list of options, enter the following command:

# virt-clone --helpvirt-clone man page also documents each command option, important variables, and examples.

Example 4.1. Using virt-clone to clone a guest

# virt-clone --original demo --auto-cloneExample 4.2. Using virt-clone to clone a guest

# virt-clone --connect qemu:///system --original demo --name newdemo --file /var/lib/libvirt/images/newdemo.img --file /var/lib/libvirt/images/newdata.img4.2.2. Cloning Guests with virt-manager

Procedure 4.2. Cloning a Virtual Machine with virt-manager

Open virt-manager

Start virt-manager. Launch the Virtual Machine Manager application from the Applications menu and System Tools submenu. Alternatively, run thevirt-managercommand as root.Select the guest virtual machine you want to clone from the list of guest virtual machines in Virtual Machine Manager.Right-click the guest virtual machine you want to clone and select Clone. The Clone Virtual Machine window opens.

Figure 4.1. Clone Virtual Machine window

Configure the clone

- To change the name of the clone, enter a new name for the clone.

- To change the networking configuration, click Details.Enter a new MAC address for the clone.Click OK.

Figure 4.2. Change MAC Address window

- For each disk in the cloned guest virtual machine, select one of the following options:

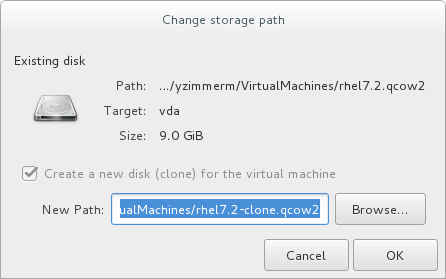

Clone this disk- The disk will be cloned for the cloned guest virtual machineShare disk with guest virtual machine name- The disk will be shared by the guest virtual machine that will be cloned and its cloneDetails- Opens the Change storage path window, which enables selecting a new path for the disk

Figure 4.3. Change

storage pathwindow

Clone the guest virtual machine

Click Clone.

Chapter 5. KVM Paravirtualized (virtio) Drivers

virtio package. The virtio package supports block (storage) devices and network interface controllers.

Note

5.1. Using KVM virtio Drivers for Existing Storage Devices

virtio driver instead of the virtualized IDE driver. The example shown in this section edits libvirt configuration files. Note that the guest virtual machine does not need to be shut down to perform these steps, however the change will not be applied until the guest is completely shut down and rebooted.

Procedure 5.1. Using KVM virtio drivers for existing devices

- Ensure that you have installed the appropriate driver (

viostor), before continuing with this procedure. - Run the

virsh edit guestnamecommand as root to edit the XML configuration file for your device. For example,virsh edit guest1. The configuration files are located in the/etc/libvirt/qemu/directory. - Below is a file-based block device using the virtualized IDE driver. This is a typical entry for a virtual machine not using the virtio drivers.

<disk type='file' device='disk'> ... <source file='/var/lib/libvirt/images/disk1.img'/> <target dev='hda' bus='ide'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </disk>

- Change the entry to use the virtio device by modifying the bus= entry to

virtio. Note that if the disk was previously IDE, it has a target similar tohda,hdb, orhdc. When changing to bus=virtio the target needs to be changed tovda,vdb, orvdcaccordingly.<disk type='file' device='disk'> ... <source file='/var/lib/libvirt/images/disk1.img'/> <target dev='vda' bus='virtio'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0'/> </disk>

- Remove the address tag inside the disk tags. This must be done for this procedure to work. Libvirt will regenerate the address tag appropriately the next time the virtual machine is started.

virt-manager, virsh attach-disk or virsh attach-interface can add a new device using the virtio drivers.

5.2. Using KVM virtio Drivers for New Storage Devices

virt-manager.

virsh attach-disk or virsh attach-interface commands can be used to attach devices using the virtio drivers.

Important

Procedure 5.2. Adding a storage device using the virtio storage driver

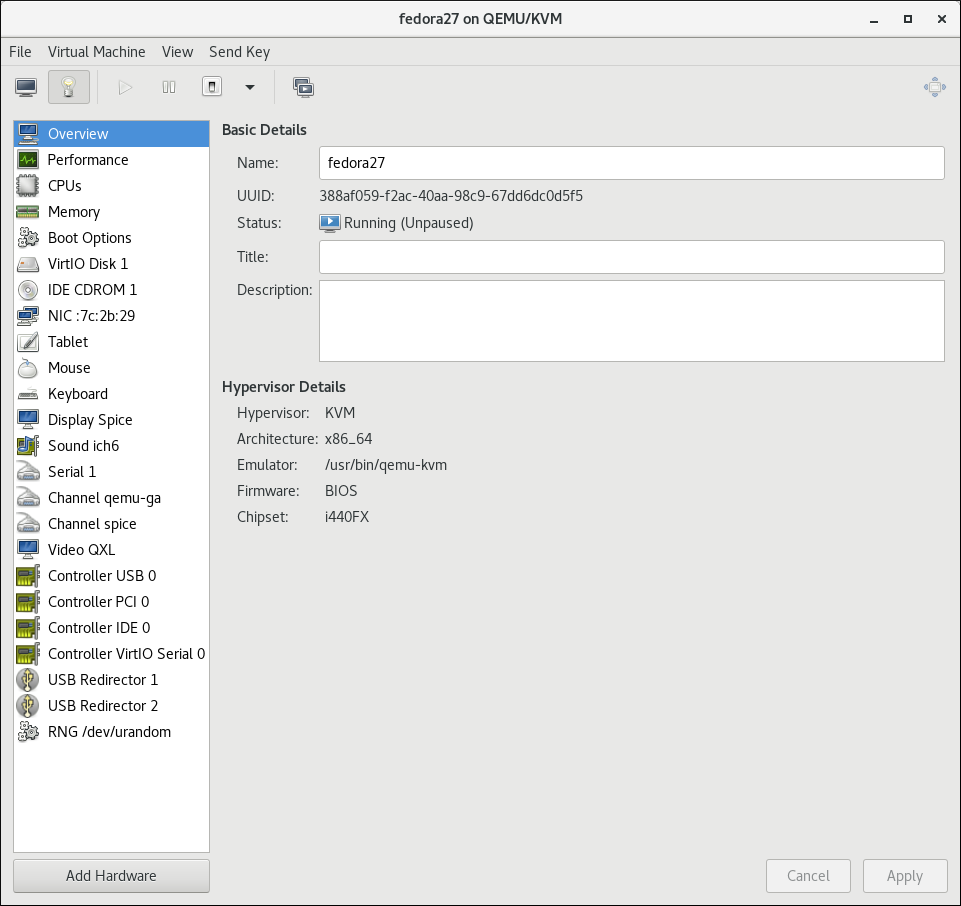

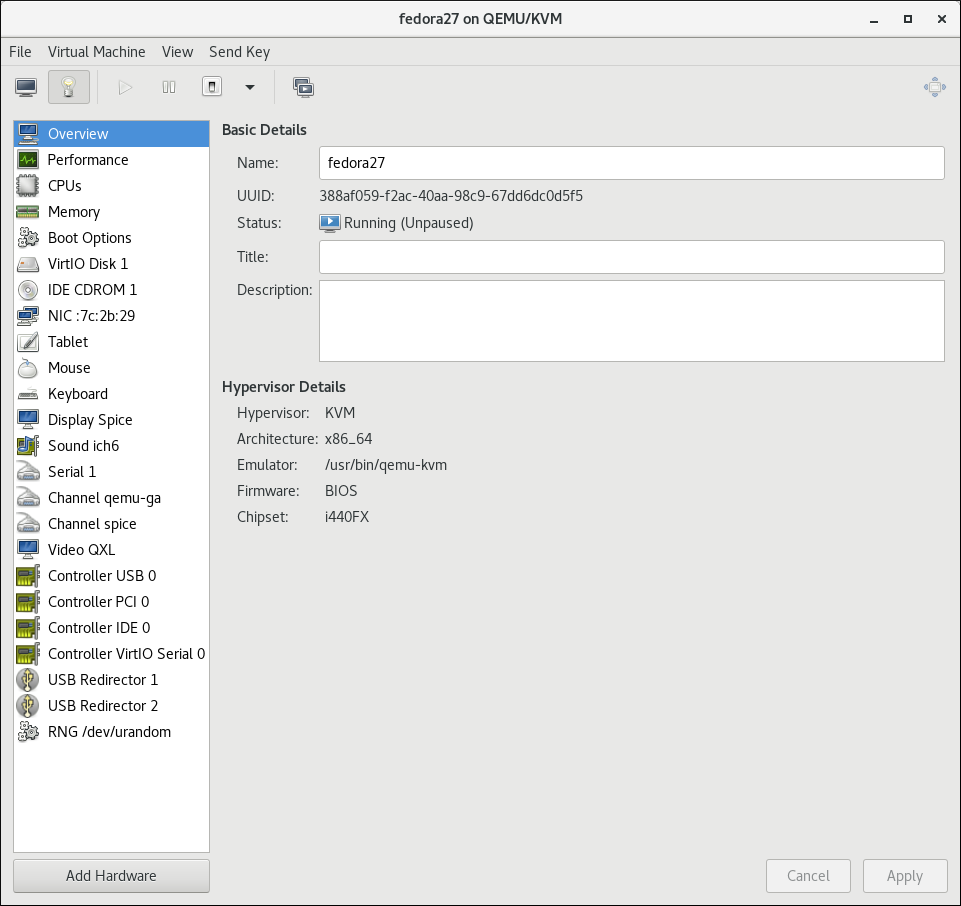

- Open the guest virtual machine by double clicking the name of the guest in

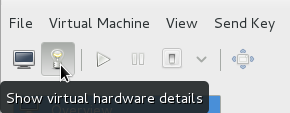

virt-manager. - Open the Show virtual hardware details tab by clicking

.

.

- In the Show virtual hardware details tab, click the Add Hardware button.

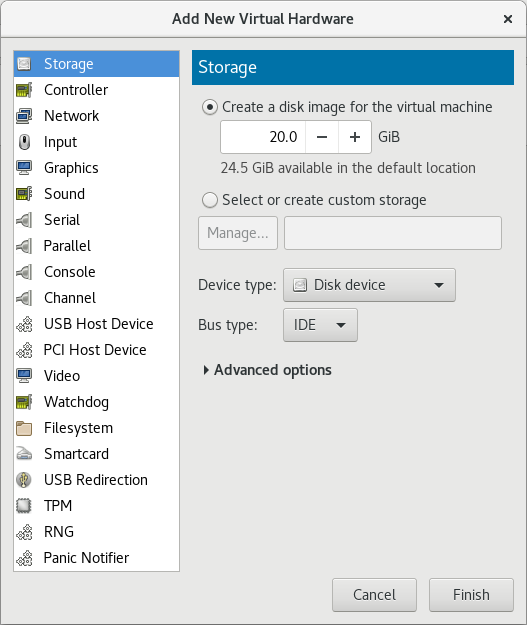

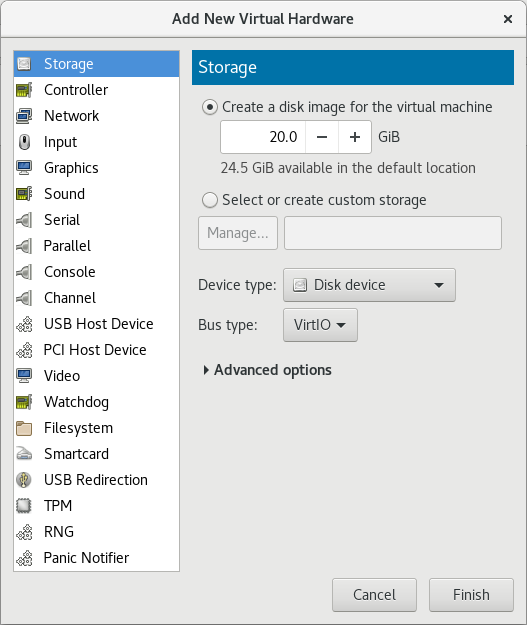

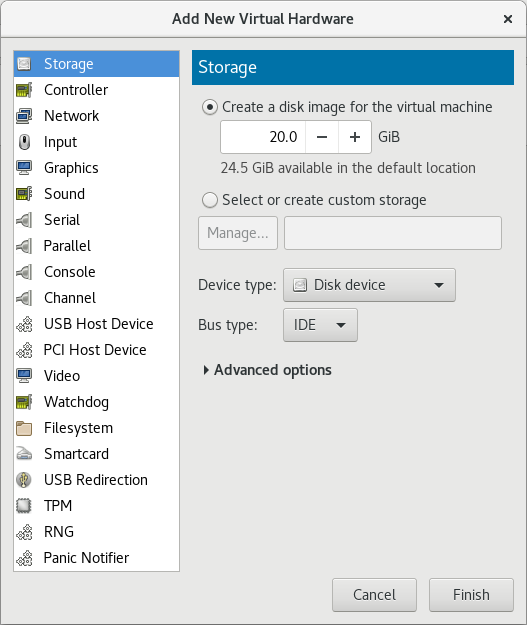

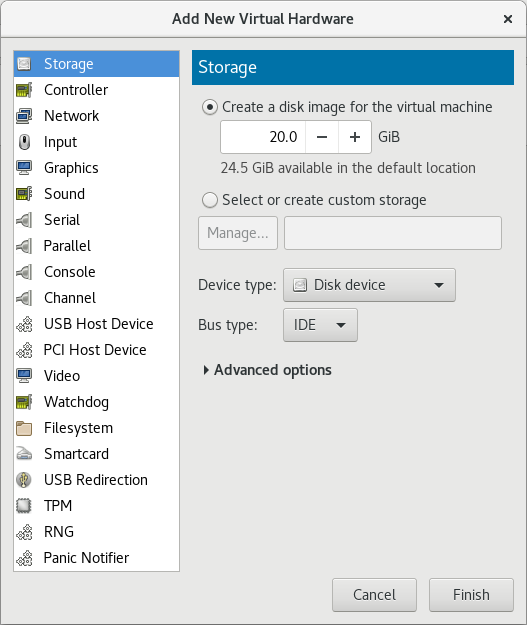

Select hardware type

Select Storage as the Hardware type.

Figure 5.1. The Add new virtual hardware wizard

Select the storage device and driver

Create a new disk image or select a storage pool volume.Set the Device type to Disk device and the Bus type to VirtIO to use the virtio drivers.

Figure 5.2. The Add New Virtual Hardware wizard

Click Finish to complete the procedure.

Procedure 5.3. Adding a network device using the virtio network driver

- Open the guest virtual machine by double clicking the name of the guest in

virt-manager. - Open the Show virtual hardware details tab by clicking

.

.

- In the Show virtual hardware details tab, click the Add Hardware button.

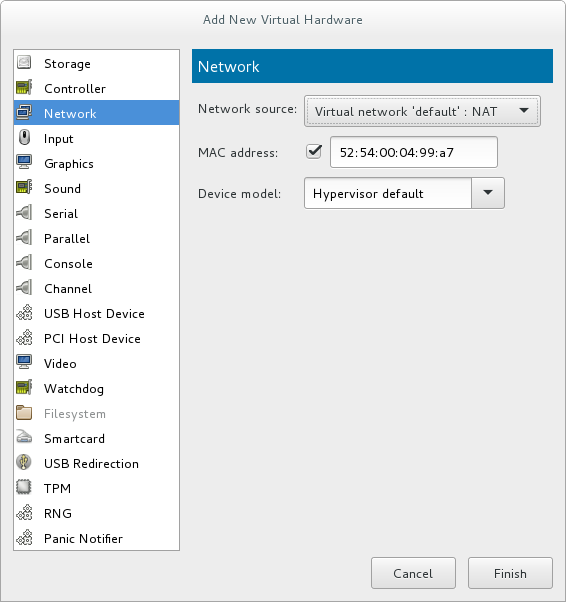

Select hardware type

Select Network as the Hardware type.

Figure 5.3. The Add new virtual hardware wizard

Select the network device and driver

Set the Device model to virtio to use the virtio drivers. Choose the required Host device.

Figure 5.4. The Add new virtual hardware wizard

Click Finish to complete the procedure.

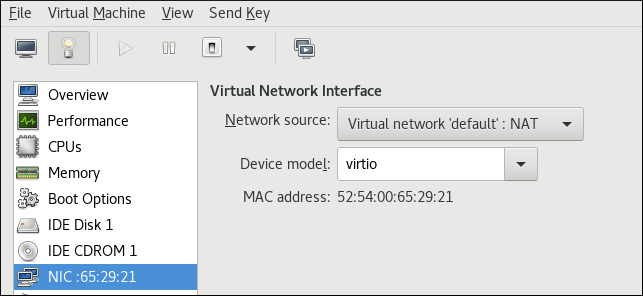

5.3. Using KVM virtio Drivers for Network Interface Devices

- To attach a virtio network device to a guest, use the

virsh attach-interfacecommand with themodel --virtiooption.Alternatively, in the virt-manager interface, navigate to the guest's Virtual hardware details screen and click Add Hardware. In the Add New Virtual Hardware screen, select Network, and change Device model tovirtio:

- To change the type of an existing interface to virtio, use the

virsh editcommand to edit the XML configuration of the intended guest, and change themodel typeattribute tovirtio, for example as follows:<devices> <interface type='network'> <source network='default'/> <target dev='vnet1'/> <model type='virtio'/> <driver name='vhost' txmode='iothread' ioeventfd='on' event_idx='off'/> </interface> </devices> ...Alternatively, in the virt-manager interface, navigate to the guest's Virtual hardware details screen, select theNICitem, and change Device model tovirtio:

Note

virtio-net. For details, see the Red Hat Knowledgebase.

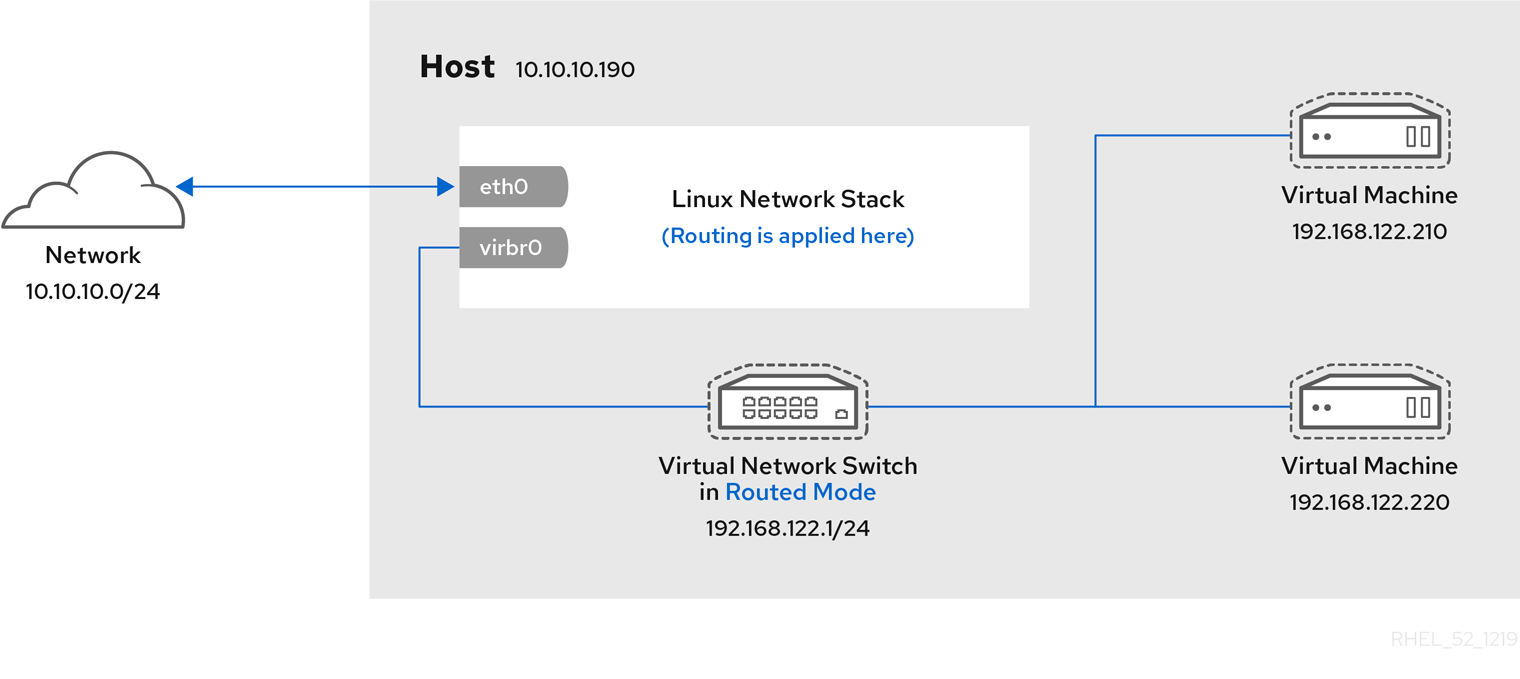

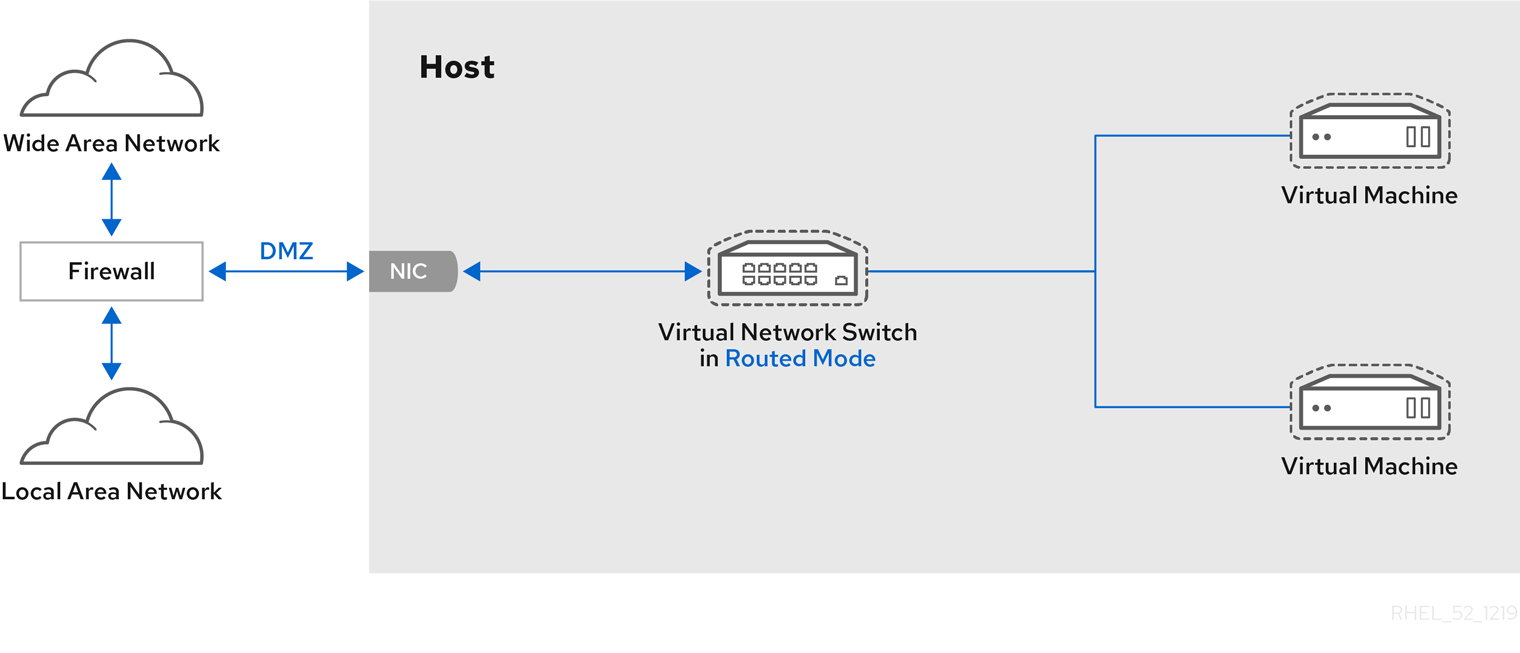

Chapter 6. Network Configuration

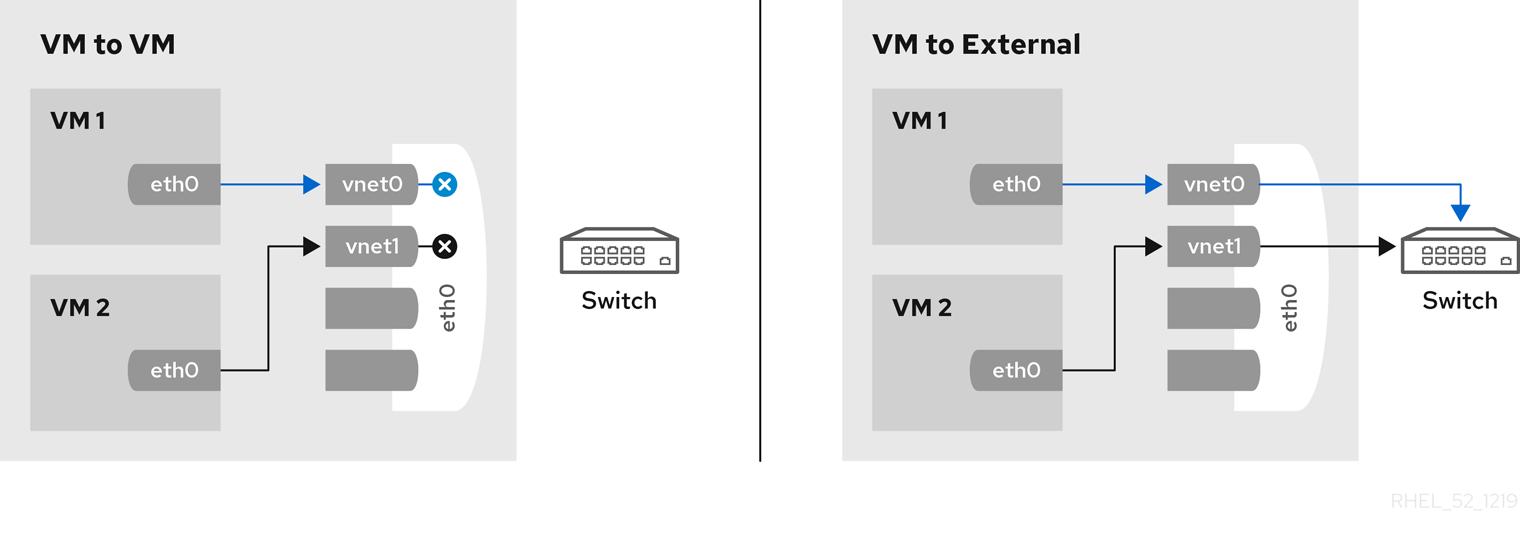

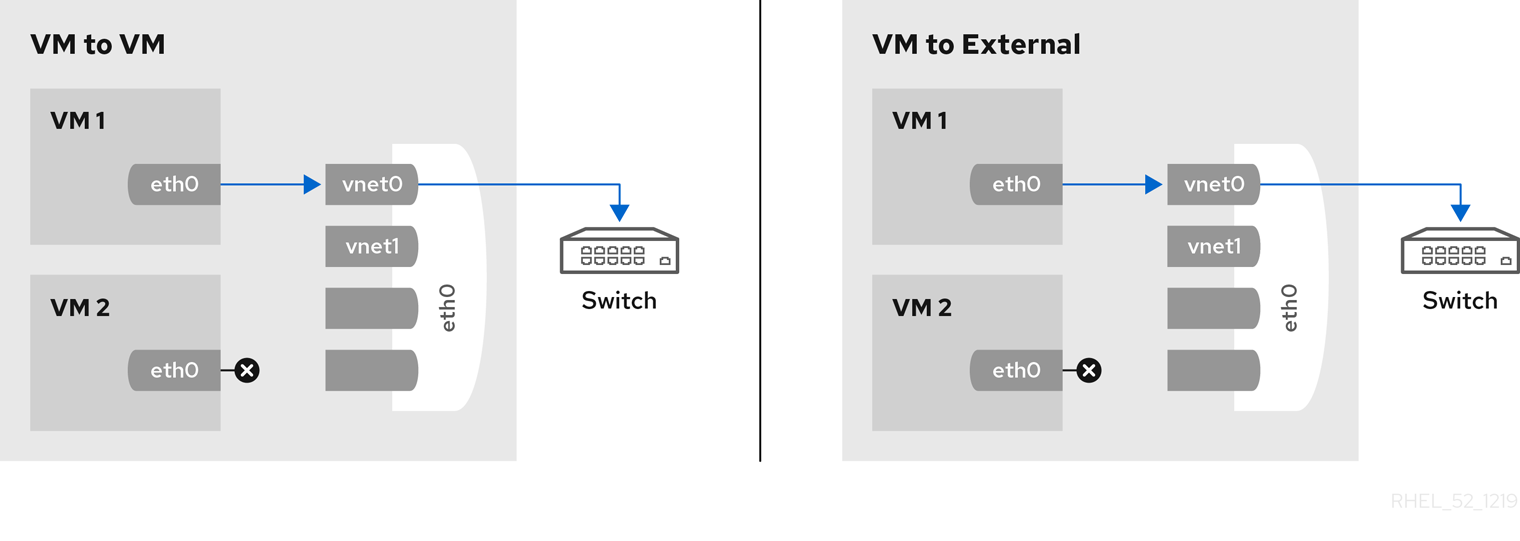

- virtual networks using Network Address Translation (NAT)

- directly allocated physical devices using PCI device assignment

- directly allocated virtual functions using PCIe SR-IOV

- bridged networks

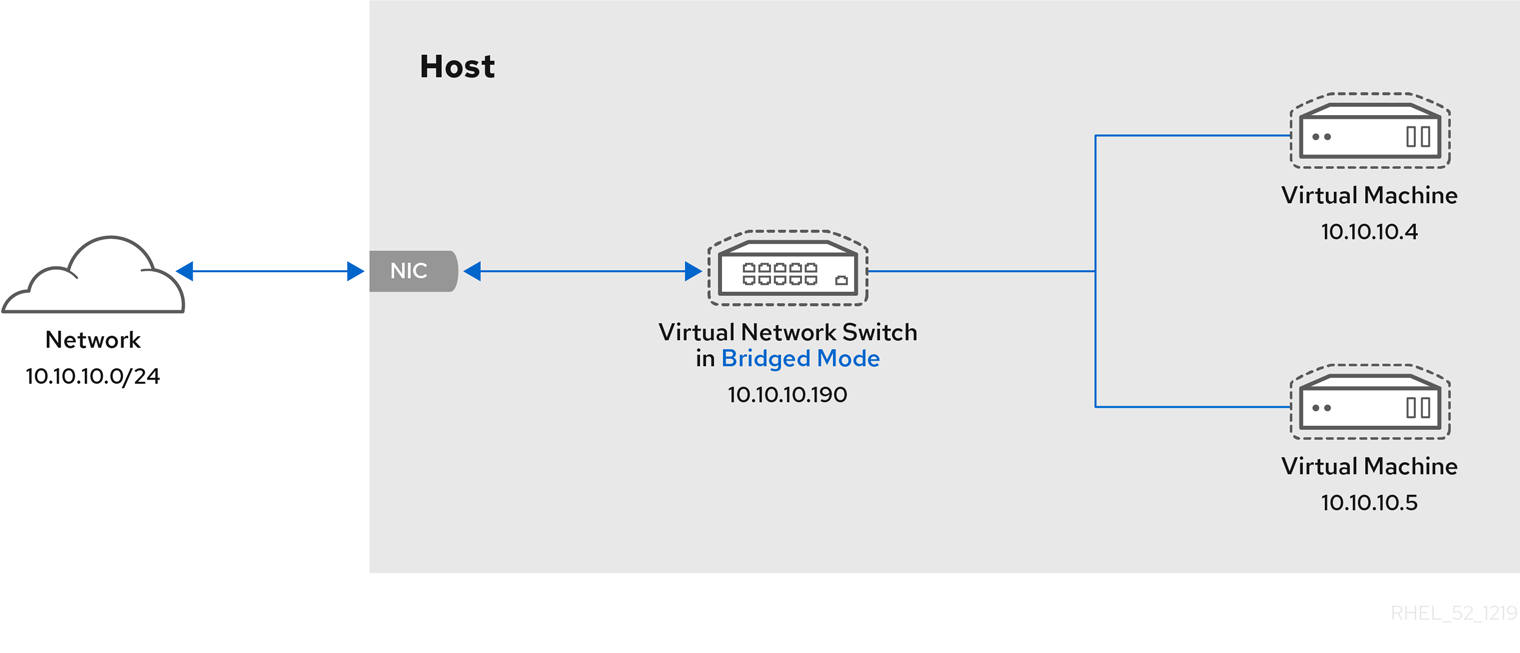

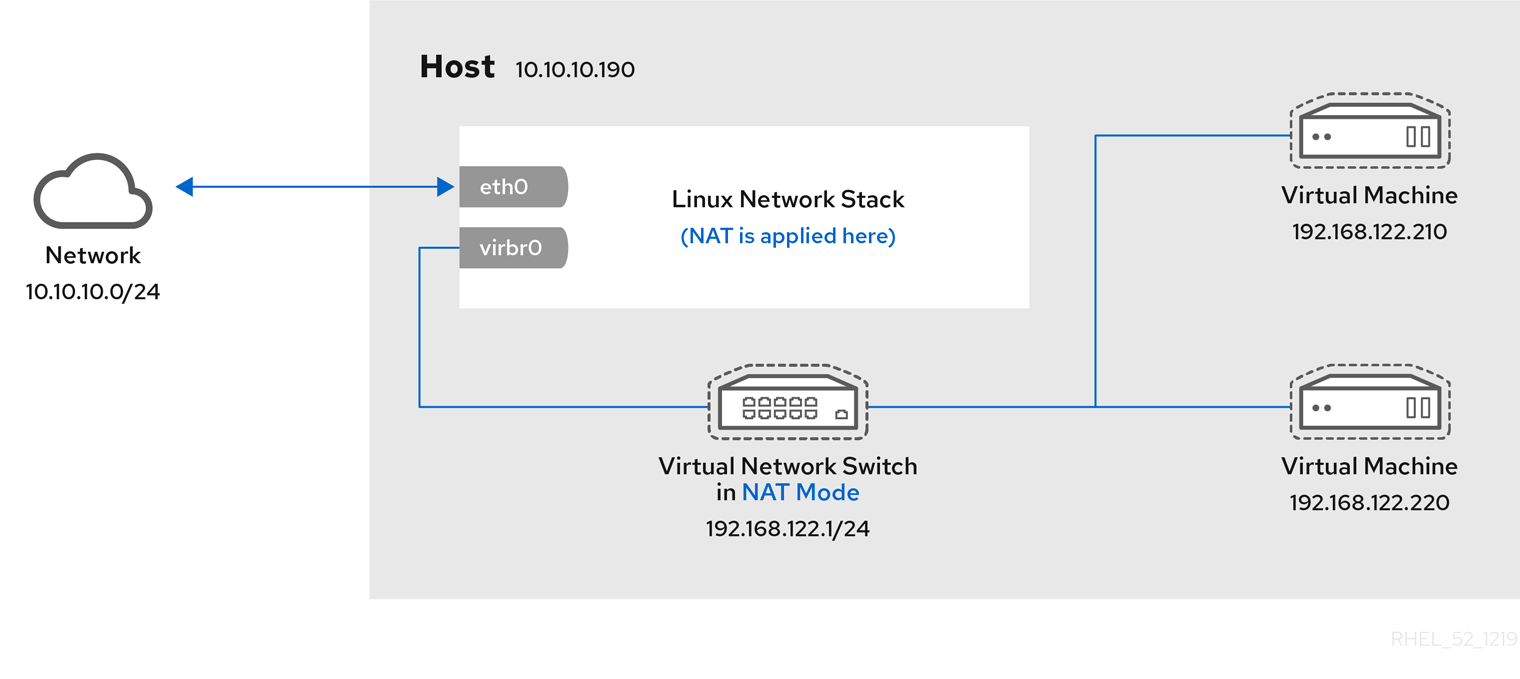

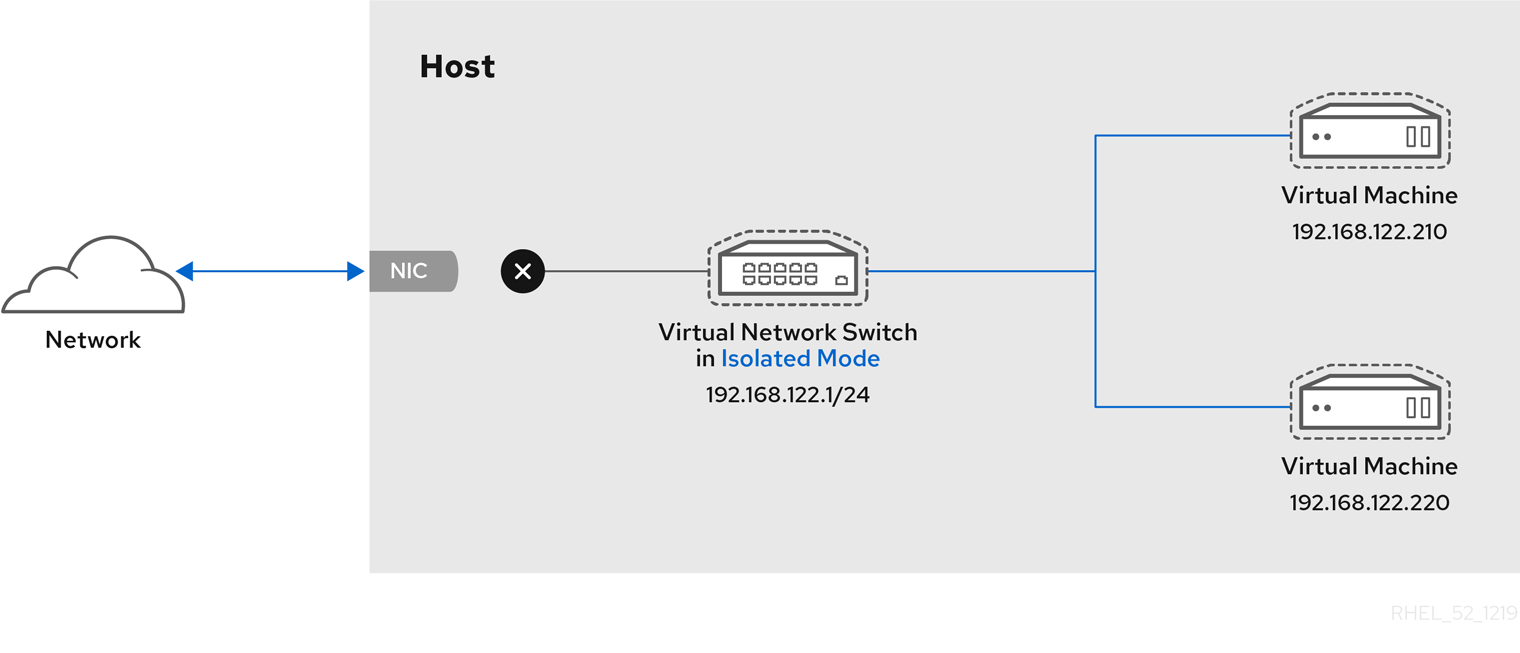

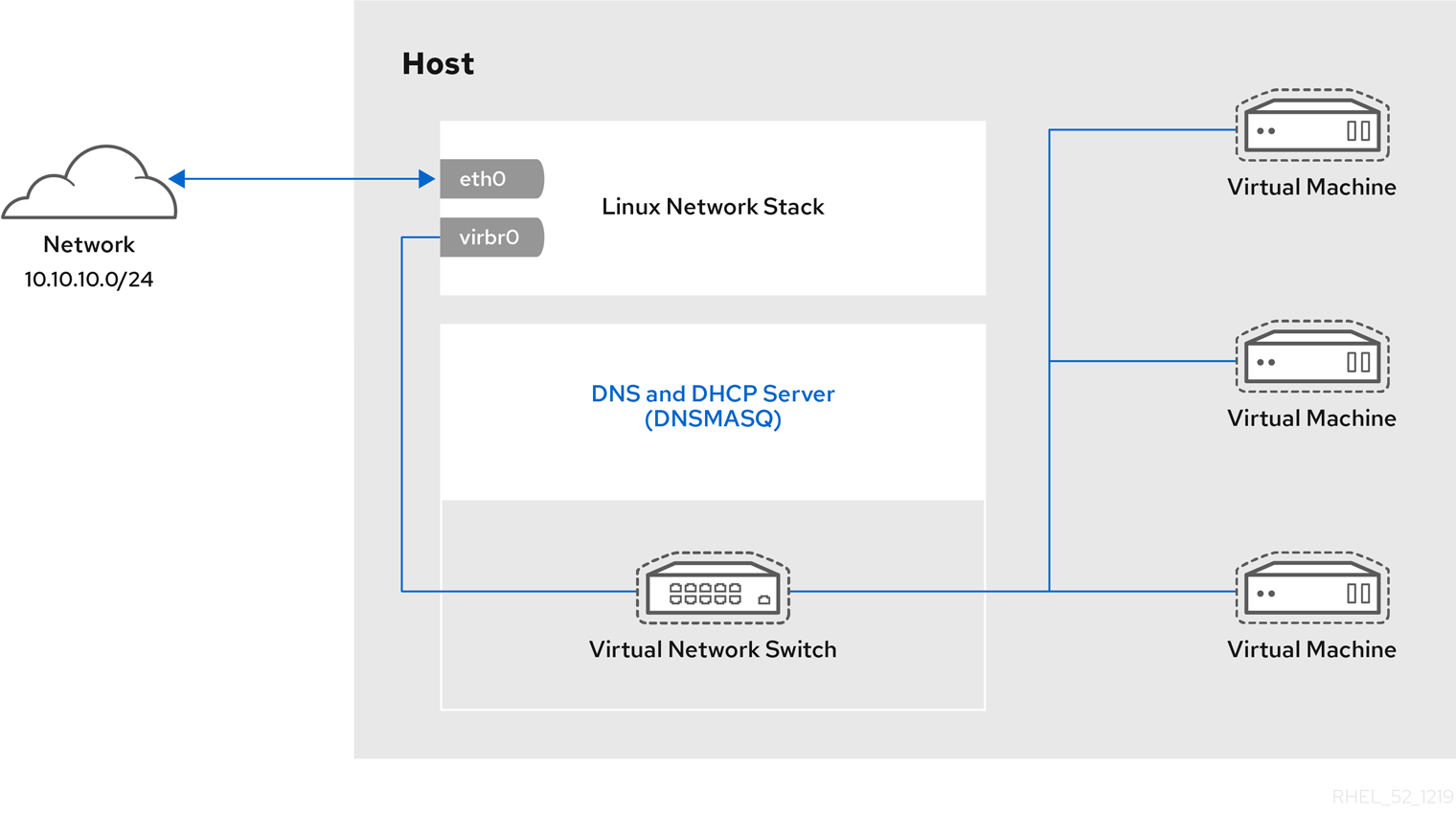

6.1. Network Address Translation (NAT) with libvirt

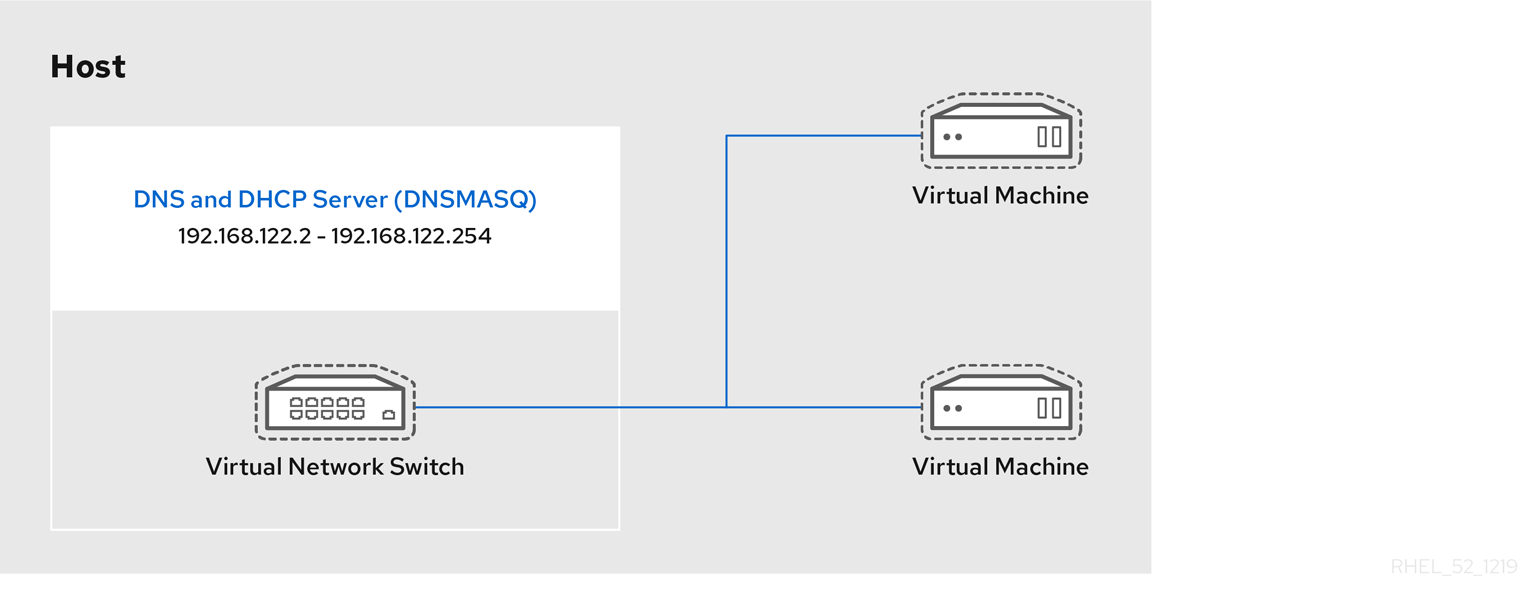

Every standard libvirt installation provides NAT-based connectivity to virtual machines as the default virtual network. Verify that it is available with the virsh net-list --all command.

# virsh net-list --all

Name State Autostart

-----------------------------------------

default active yes

# ll /etc/libvirt/qemu/

total 12

drwx------. 3 root root 4096 Nov 7 23:02 networks

-rw-------. 1 root root 2205 Nov 20 01:20 r6.4.xml

-rw-------. 1 root root 2208 Nov 8 03:19 r6.xml

/etc/libvirt/qemu/networks/default.xml

# virsh net-autostart default

Network default marked as autostarted# virsh net-start default

Network default startedlibvirt default network is running, you will see an isolated bridge device. This device does not have any physical interfaces added. The new device uses NAT and IP forwarding to connect to the physical network. Do not add new interfaces.

# brctl show

bridge name bridge id STP enabled interfaces

virbr0 8000.000000000000 yeslibvirt adds iptables rules which allow traffic to and from guest virtual machines attached to the virbr0 device in the INPUT, FORWARD, OUTPUT and POSTROUTING chains. libvirt then attempts to enable the ip_forward parameter. Some other applications may disable ip_forward, so the best option is to add the following to /etc/sysctl.conf.

net.ipv4.ip_forward = 1

Once the host configuration is complete, a guest virtual machine can be connected to the virtual network based on its name. To connect a guest to the 'default' virtual network, the following can be used in the XML configuration file (such as /etc/libvirtd/qemu/myguest.xml) for the guest:

<interface type='network'> <source network='default'/> </interface>

Note

<interface type='network'> <source network='default'/> <mac address='00:16:3e:1a:b3:4a'/> </interface>

6.2. Disabling vhost-net

vhost-net module is a kernel-level back end for virtio networking that reduces virtualization overhead by moving virtio packet processing tasks out of user space (the QEMU process) and into the kernel (the vhost-net driver). vhost-net is only available for virtio network interfaces. If the vhost-net kernel module is loaded, it is enabled by default for all virtio interfaces, but can be disabled in the interface configuration if a particular workload experiences a degradation in performance when vhost-net is in use.

vhost-net causes the UDP socket's receive buffer to overflow more quickly, which results in greater packet loss. It is therefore better to disable vhost-net in this situation to slow the traffic, and improve overall performance.

vhost-net, edit the <interface> sub-element in the guest virtual machine's XML configuration file and define the network as follows:

<interface type="network"> ... <model type="virtio"/> <driver name="qemu"/> ... </interface>

qemu forces packet processing into QEMU user space, effectively disabling vhost-net for that interface.

6.3. Enabling vhost-net zero-copy

vhost-net.conf to /etc/modprobe.d with the following content:

options vhost_net experimental_zcopytx=1

modprobe -r vhost_net

modprobe vhost_net experimental_zcopytx=0

cat /sys/module/vhost_net/parameters/experimental_zcopytx. It should show:

$ cat /sys/module/vhost_net/parameters/experimental_zcopytx

0

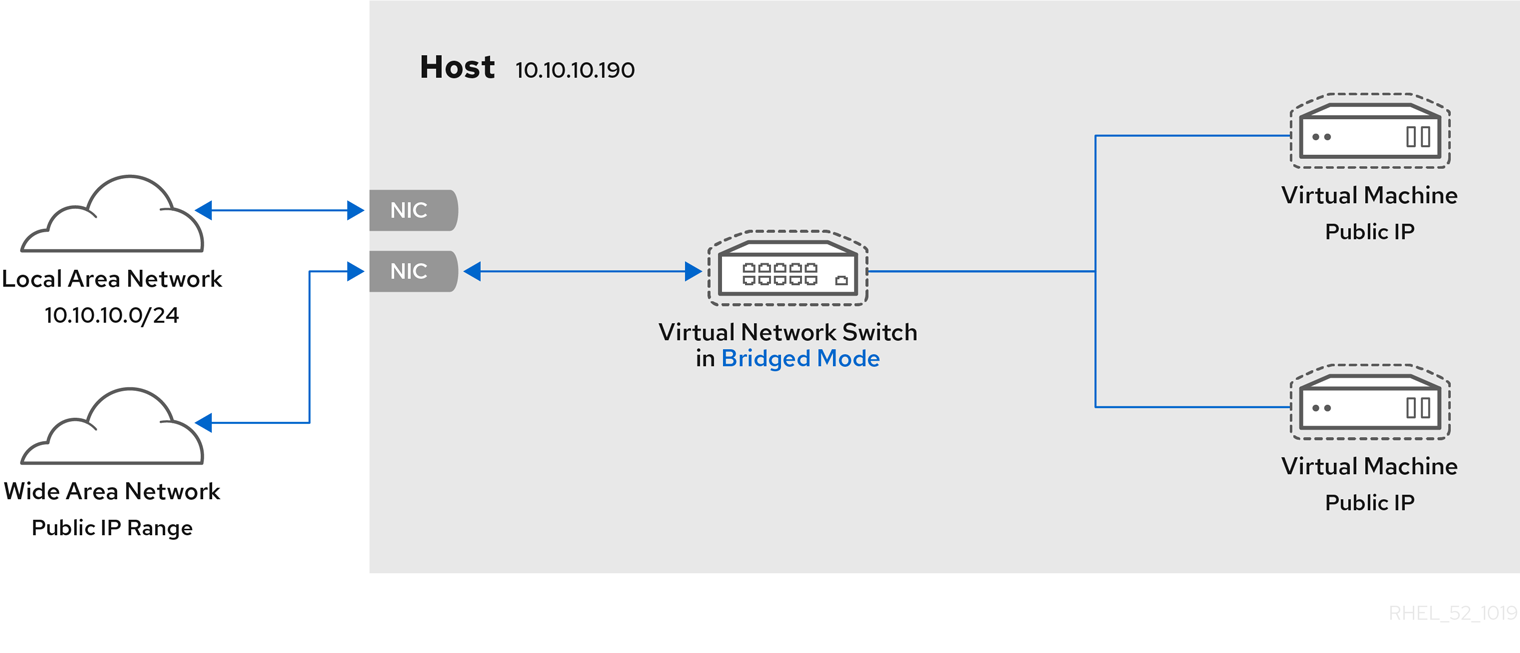

6.4. Bridged Networking

6.4.1. Configuring Bridged Networking on a Red Hat Enterprise Linux 7 Host

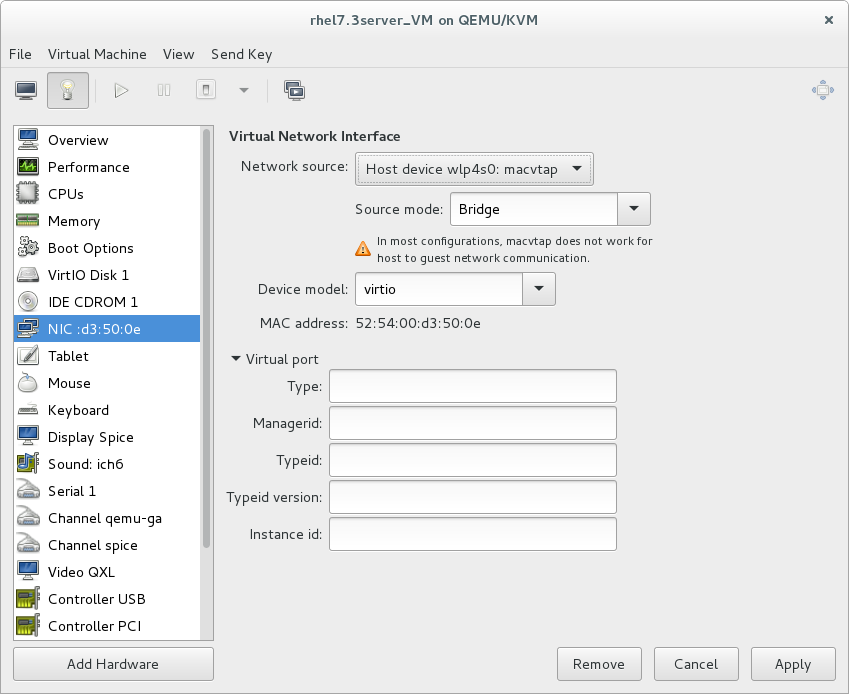

6.4.2. Bridged Networking with Virtual Machine Manager

Note

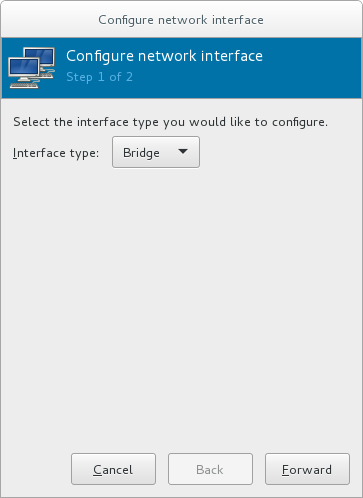

Procedure 6.1. Creating a bridge with virt-manager

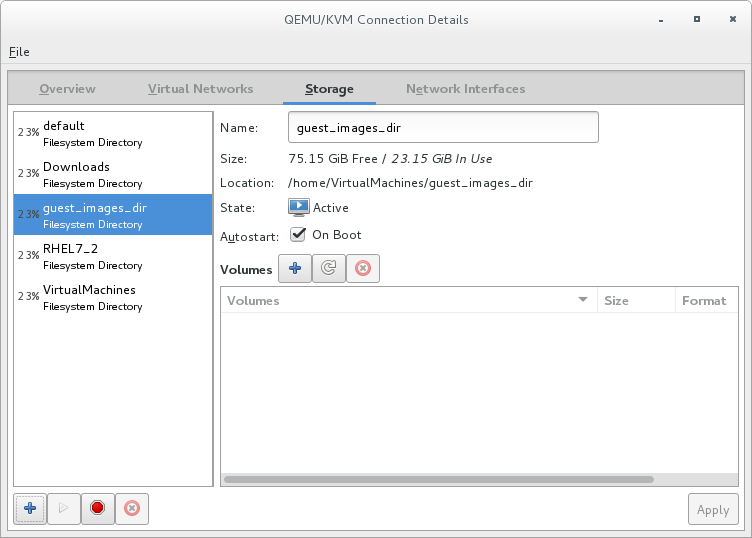

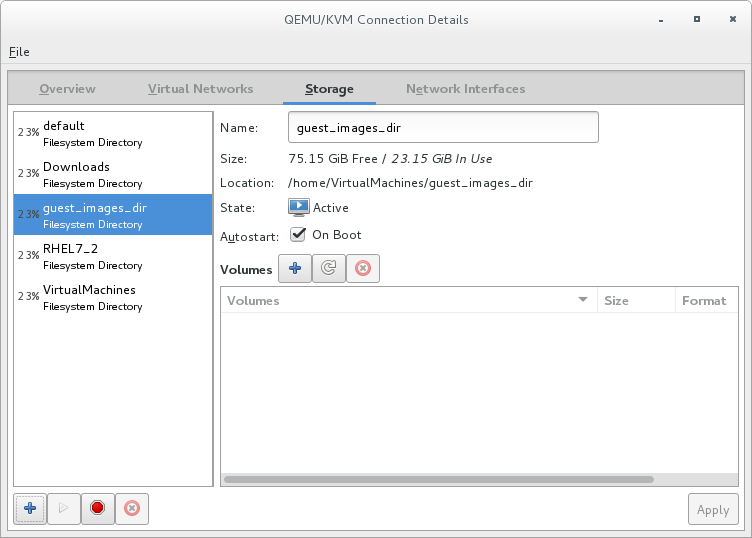

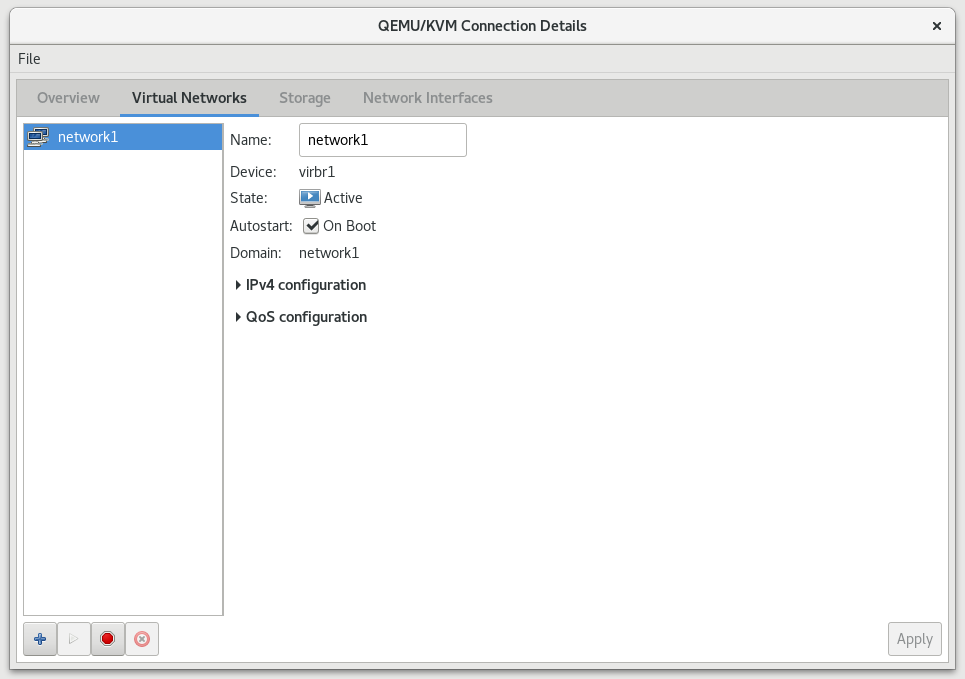

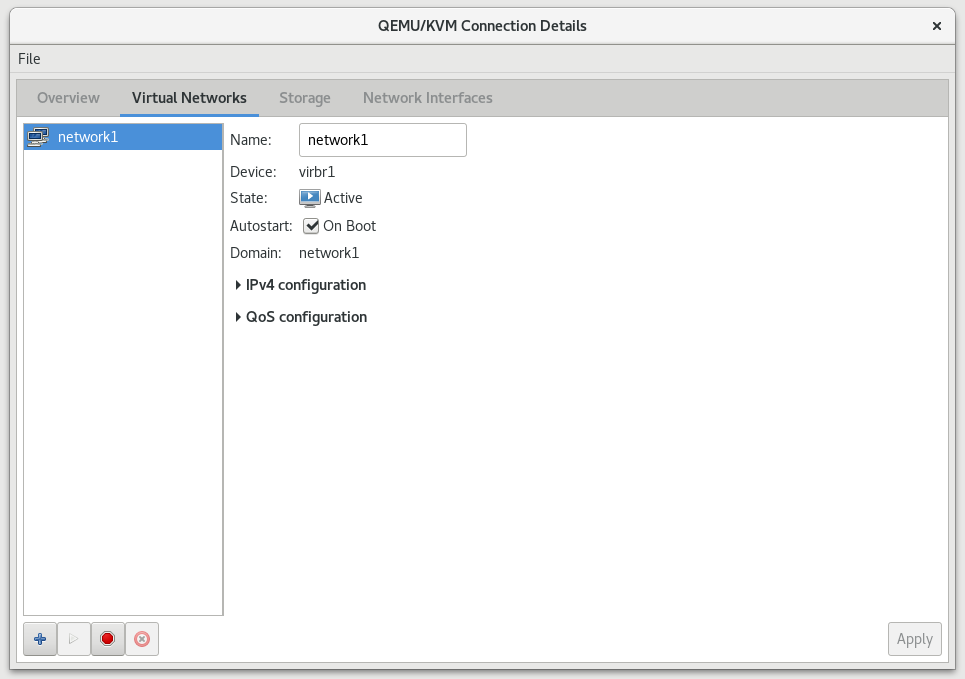

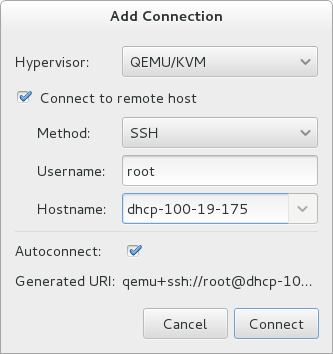

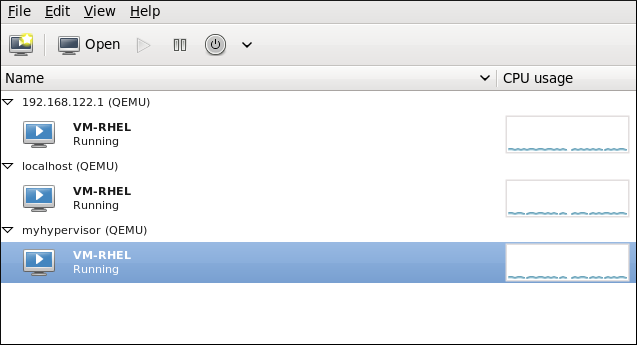

- From the virt-manager main menu, click Edit ⇒ Connection Details to open the Connection Details window.

- Click the Network Interfaces tab.

- Click the + at the bottom of the window to configure a new network interface.

- In the Interface type drop-down menu, select Bridge, and then click Forward to continue.

Figure 6.1. Adding a bridge

- In the Name field, enter a name for the bridge, such as br0.

- Select a Start mode from the drop-down menu. Choose from one of the following:

- none - deactivates the bridge

- onboot - activates the bridge on the next guest virtual machine reboot

- hotplug - activates the bridge even if the guest virtual machine is running

- Check the Activate now check box to activate the bridge immediately.

- To configure either the IP settings or Bridge settings, click the appropriate Configure button. A separate window will open to specify the required settings. Make any necessary changes and click OK when done.

- Select the physical interface to connect to your virtual machines. If the interface is currently in use by another guest virtual machine, you will receive a warning message.

- Click Finish and the wizard closes, taking you back to the Connections menu.

Figure 6.2. Adding a bridge

6.4.3. Bridged Networking with libvirt

Important

macTableManager attribute of a network's <bridge> element to 'libvirt' in the host's XML configuration file:

<bridge name='br0' macTableManager='libvirt'/>This will turn off learning (flood) mode on all bridge ports, and libvirt will add or remove entries to the FDB as necessary. Along with removing the overhead of learning the proper forwarding ports for MAC addresses, this also allows the kernel to disable promiscuous mode on the physical device that connects the bridge to the network, which further reduces overhead.

Chapter 7. Overcommitting with KVM

7.1. Introduction

7.2. Overcommitting Memory

Important

Important

7.3. Overcommitting Virtualized CPUs

Important

Chapter 8. KVM Guest Timing Management

- Interrupts cannot always be delivered simultaneously and instantaneously to all guest virtual machines. This is because interrupts in virtual machines are not true interrupts. Instead, they are injected into the guest virtual machine by the host machine.

- The host may be running another guest virtual machine, or a different process. Therefore, the precise timing typically required by interrupts may not always be possible.

Important

ntpd service. For more information, see the Red Hat Enterprise 6 Deployment Guide.

ntpd or by the chronyd service. Note that Chrony has some advantages on virtual machines. For more information, see the Configuring NTP Using the chrony Suite and Configuring NTP Using ntpd sections in the Red Hat Enterprise Linux 7 System Administrator's Guide.

By default, the guest synchronizes its time with the hypervisor as follows:

- When the guest system boots, the guest reads the time from the emulated Real Time Clock (RTC).

- When the NTP protocol is initiated, it automatically synchronizes the guest clock. Afterwards, during normal guest operation, NTP performs clock adjustments in the guest.

- When a guest is resumed after a pause or a restoration process, a command to synchronize the guest clock to a specified value should be issued by the management software (such as virt-manager). This synchronization works only if the QEMU guest agent is installed in the guest and supports the feature. The value to which the guest clock synchronizes is usually the host clock value.

Modern Intel and AMD CPUs provide a constant Time Stamp Counter (TSC). The count frequency of the constant TSC does not vary when the CPU core itself changes frequency, for example to comply with a power-saving policy. A CPU with a constant TSC frequency is necessary in order to use the TSC as a clock source for KVM guests.

constant_tsc flag is present. To determine if your CPU has the constant_tsc flag enter the following command:

$ cat /proc/cpuinfo | grep constant_tscconstant_tsc bit. If no output is given, follow the instructions below.

Systems without a constant TSC frequency cannot use the TSC as a clock source for virtual machines, and require additional configuration. Power management features interfere with accurate time keeping and must be disabled for guest virtual machines to accurately keep time with KVM.

Important

constant_tsc bit, disable all power management features . Each system has several timers it uses to keep time. The TSC is not stable on the host, which is sometimes caused by cpufreq changes, deep C state, or migration to a host with a faster TSC. Deep C sleep states can stop the TSC. To prevent the kernel using deep C states append processor.max_cstate=1 to the kernel boot. To make this change persistent, edit values of the GRUB_CMDLINE_LINUX key in the /etc/default/grubfile. For example. if you want to enable emergency mode for each boot, edit the entry as follows:

GRUB_CMDLINE_LINUX="emergency"

cpufreq (only necessary on hosts without the constant_tsc), install kernel-tools and enable the cpupower.service (systemctl enable cpupower.service). If you want to disable this service every time the guest virtual machine boots, change the configuration file in /etc/sysconfig/cpupower and change the CPUPOWER_START_OPTS and CPUPOWER_STOP_OPTS. Valid limits can be found in the /sys/devices/system/cpu/cpuid/cpufreq/scaling_available_governors files. For more information on this package or on power management and governors, see the Red Hat Enterprise Linux 7 Power Management Guide.

8.1. Host-wide Time Synchronization

Important

- Set the

ptp_kvmmodule to load after reboot.#

echo ptp_kvm > /etc/modules-load.d/ptp_kvm.conf - Add the

/dev/ptp0clock as a reference to the chrony configuration:#

echo "refclock PHC /dev/ptp0 poll 2" >> /etc/chrony.conf - Restart the chrony daemon:

#

systemctl restart chronyd - To verify the host-guest time synchronization has been configured correctly, use the

chronyc sourcescommand on a guest. The output should look similar to the following:#

chronyc sources210 Number of sources = 1 MS Name/IP address Stratum Poll Reach LastRx Last sample =============================================================================== #* PHC0 0 2 377 4 -6ns[ -6ns] +/- 726ns

8.2. Required Time Management Parameters for Red Hat Enterprise Linux Guests

/kernel line in the /etc/grub2.cfg file of the guest virtual machine.

Note

Table 8.1. Kernel parameter requirements

| Red Hat Enterprise Linux version | Additional guest kernel parameters |

|---|---|

| 7.0 and later on AMD64 and Intel 64 systems with kvm-clock | Additional parameters are not required |

| 6.1 and later on AMD64 and Intel 64 systems with kvm-clock | Additional parameters are not required |

| 6.0 on AMD64 and Intel 64 systems with kvm-clock | Additional parameters are not required |

| 6.0 on AMD64 and Intel 64 systems without kvm-clock | notsc lpj=n |

Note

lpj parameter requires a numeric value equal to the loops per jiffy value of the specific CPU on which the guest virtual machine runs. If you do not know this value, do not set the lpj parameter.

8.3. Steal Time Accounting

/proc/stat. It is automatically reported by utilities such as top and vmstat. It is displayed as "%st", or in the "st" column. Note that it cannot be switched off.

Chapter 9. Network Booting with libvirt

Warning

9.1. Preparing the Boot Server

- A PXE Server (DHCP and TFTP) - This can be a libvirt internal server, manually-configured dhcpd and tftpd, dnsmasq, a server configured by Cobbler, or some other server.

- Boot images - for example, PXELINUX configured manually or by Cobbler.

9.1.1. Setting up a PXE Boot Server on a Private libvirt Network

Procedure 9.1. Configuring the PXE boot server

- Place the PXE boot images and configuration in

/var/lib/tftpboot. - enter the following commands:

#

virsh net-destroy default#virsh net-edit default - Edit the

<ip>element in the configuration file for the default network to include the appropriate address, network mask, DHCP address range, and boot file, where BOOT_FILENAME represents the file name you are using to boot the guest virtual machine.<ip address='192.168.122.1' netmask='255.255.255.0'> <tftp root='/var/lib/tftpboot' /> <dhcp> <range start='192.168.122.2' end='192.168.122.254' /> <bootp file='BOOT_FILENAME' /> </dhcp> </ip> - Run:

#

virsh net-start default - Boot the guest using PXE (refer to Section 9.2, “Booting a Guest Using PXE”).

9.2. Booting a Guest Using PXE

9.2.1. Using bridged networking

Procedure 9.2. Booting a guest using PXE and bridged networking

- Ensure bridging is enabled such that the PXE boot server is available on the network.

- Boot a guest virtual machine with PXE booting enabled. You can use the

virt-installcommand to create a new virtual machine with PXE booting enabled, as shown in the following example command:virt-install --pxe --network bridge=breth0 --prompt

Alternatively, ensure that the guest network is configured to use your bridged network, and that the XML guest configuration file has a<boot dev='network'/>element inside the<os>element, as shown in the following example:<os> <type arch='x86_64' machine='pc-i440fx-rhel7.0.0'>hvm</type> <boot dev='network'/> <boot dev='hd'/> </os> <interface type='bridge'> <mac address='52:54:00:5a:ad:cb'/> <source bridge='breth0'/> <target dev='vnet0'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </interface>

9.2.2. Using a Private libvirt Network

Procedure 9.3. Using a private libvirt network

- Configure PXE booting on libvirt as shown in Section 9.1.1, “Setting up a PXE Boot Server on a Private libvirt Network”.

- Boot a guest virtual machine using libvirt with PXE booting enabled. You can use the

virt-installcommand to create/install a new virtual machine using PXE:virt-install --pxe --network network=default --prompt

<boot dev='network'/> element inside the <os> element, as shown in the following example:

<os> <type arch='x86_64' machine='pc-i440fx-rhel7.0.0'>hvm</type> <boot dev='network'/> <boot dev='hd'/> </os>

<interface type='network'> <mac address='52:54:00:66:79:14'/> <source network='default'/> <target dev='vnet0'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </interface>

Chapter 10. Registering the Hypervisor and Virtual Machine

- Subscriptions specific to virtual systems are readily available and can be applied to all of the associated guest VMs.

- All subscription benefits that can be inherited from the hypervisor are readily available and can be applied to all of the associated guest VMs.

Note

10.1. Installing virt-who on the Host Physical Machine

Register the KVM hypervisor

Register the KVM Hypervisor by running thesubscription-manager register [options]command in a terminal as the root user on the host physical machine. More options are available using the #subscription-manager register --helpmenu. In cases where you are using a user name and password, use the credentials that are known to the Subscription Manager application. If this is your very first time subscribing and you do not have a user account, contact customer support. For example to register the VM as 'admin' with 'secret' as a password, you would send the following command:[root@rhel-server ~]#

subscription-manager register --username=admin --password=secret --auto-attachInstall the virt-who packages

Install the virt-who packages, by running the following command on the host physical machine:#

yum install virt-whoCreate a virt-who configuration file

For each hypervisor, add a configuration file in the/etc/virt-who.d/directory. At a minimum, the file must contain the following snippet:[libvirt] type=libvirt

For more detailed information on configuringvirt-who, see Section 10.1.1, “Configuringvirt-who”.Start the virt-who service

Start the virt-who service by running the following command on the host physical machine:#

systemctl start virt-who.service#systemctl enable virt-who.serviceConfirm virt-who service is receiving guest information

At this point, the virt-who service will start collecting a list of domains from the host. Check the/var/log/rhsm/rhsm.logfile on the host physical machine to confirm that the file contains a list of the guest VMs. For example:2015-05-28 12:33:31,424 DEBUG: Libvirt domains found: [{'guestId': '58d59128-cfbb-4f2c-93de-230307db2ce0', 'attributes': {'active': 0, 'virtWhoType': 'libvirt', 'hypervisorType': 'QEMU'}, 'state': 5}]

Procedure 10.1. Managing the subscription on the customer portal

Subscribing the hypervisor

As the virtual machines will be receiving the same subscription benefits as the hypervisor, it is important that the hypervisor has a valid subscription and that the subscription is available for the VMs to use.Log in to the Customer Portal

Provide your Red Hat account credentials at the Red Hat Customer Portal to log in.Click the Systems link

Go to the Systems section of the My Subscriptions interface.Select the hypervisor

On the Systems page, there is a table of all subscribed systems. Click the name of the hypervisor (for examplelocalhost.localdomain). In the details page that opens, click Attach a subscription and select all the subscriptions listed. Click Attach Selected. This will attach the host's physical subscription to the hypervisor so that the guests can benefit from the subscription.

Subscribing the guest virtual machines - first time use

This step is for those who have a new subscription and have never subscribed a guest virtual machine before. If you are adding virtual machines, skip this step. To consume the subscription assigned to the hypervisor profile on the machine running the virt-who service, auto subscribe by running the following command in a terminal on the guest virtual machine.[root@virt-who ~]#

subscription-manager attach --autoSubscribing additional guest virtual machines

If you just subscribed a virtual machine for the first time, skip this step. If you are adding additional virtual machines, note that running this command will not necessarily re-attach the same subscriptions to the guest virtual machine. This is because removing all subscriptions then allowing auto-attach to resolve what is necessary for a given guest virtual machine may result in different subscriptions consumed than before. This may not have any effect on your system, but it is something you should be aware about. If you used a manual attachment procedure to attach the virtual machine, which is not described below, you will need to re-attach those virtual machines manually as the auto-attach will not work. Use the following command to first remove the subscriptions for the old guests, and then use the auto-attach to attach subscriptions to all the guests. Run these commands on the guest virtual machine.[root@virt-who ~]#

subscription-manager remove --all[root@virt-who ~]#subscription-manager attach --autoConfirm subscriptions are attached

Confirm that the subscription is attached to the hypervisor by running the following command on the guest virtual machine:[root@virt-who ~]#

subscription-manager list --consumedOutput similar to the following will be displayed. Pay attention to the Subscription Details. It should say 'Subscription is current'.[root@virt-who ~]#

subscription-manager+-------------------------------------------+ Consumed Subscriptions +-------------------------------------------+ Subscription Name: Awesome OS with unlimited virtual guests Provides: Awesome OS Server Bits SKU: awesomeos-virt-unlimited Contract: 0 Account: ######### Your account number ##### Serial: ######### Your serial number ###### Pool ID: XYZ123list --consumed Provides Management: No

Active: True

Quantity Used: 1

Service Level:

Service Type:

Status Details: Subscription is current

Provides Management: No

Active: True

Quantity Used: 1

Service Level:

Service Type:

Status Details: Subscription is current  Subscription Type:

Starts: 01/01/2015

Ends: 12/31/2015

System Type: Virtual

Subscription Type:

Starts: 01/01/2015

Ends: 12/31/2015

System Type: Virtual

The ID for the subscription to attach to the system is displayed here. You will need this ID if you need to attach the subscription manually.

The ID for the subscription to attach to the system is displayed here. You will need this ID if you need to attach the subscription manually. Indicates if your subscription is current. If your subscription is not current, an error message appears. One example is Guest has not been reported on any host and is using a temporary unmapped guest subscription. In this case the guest needs to be subscribed. In other cases, use the information as indicated in Section 10.5.2, “I have subscription status errors, what do I do?”.

Indicates if your subscription is current. If your subscription is not current, an error message appears. One example is Guest has not been reported on any host and is using a temporary unmapped guest subscription. In this case the guest needs to be subscribed. In other cases, use the information as indicated in Section 10.5.2, “I have subscription status errors, what do I do?”.Register additional guests

When you install new guest VMs on the hypervisor, you must register the new VM and use the subscription attached to the hypervisor, by running the following commands on the guest virtual machine:#

subscription-manager register#subscription-manager attach --auto#subscription-manager list --consumed

10.1.1. Configuring virt-who

virt-who service is configured using the following files:

/etc/virt-who.conf- Contains general configuration information including the interval for checking connected hypervisors for changes./etc/virt-who.d/hypervisor_name.conf- Contains configuration information for a specific hypervisor.

virt-who.conf. To run the wizard, browse to Red Hat Virtualization Agent (virt-who) Configuration Helper on the Customer Portal.

- Where does your virt-who report to?:

Subscription Asset Manager - Hypervisor Type:

libvirt

virt-who will automatically provide the selected subscriptions to existing and future guests on the specified hypervisor.

virt-who-config man page.

10.2. Registering a New Guest Virtual Machine

[root@virt-server ~]# subscription-manager register --username=admin --password=secret --auto-attach10.3. Removing a Guest Virtual Machine Entry

[root@virt-guest ~]# subscription-manager unregisterLogin to the Subscription Manager

The Subscription Manager is located on the Red Hat Customer Portal. Login to the Customer Portal using your user name and password, by clicking the login icon at the top of the screen.Click the Subscriptions tab

Click the Subscriptions tab.Click the Systems link

Scroll down the page and click the Systems link.Delete the system

To delete the system profile, locate the specified system's profile in the table, select the check box beside its name and click Delete.

10.4. Installing virt-who Manually

Procedure 10.2. How to attach a subscription manually

List subscription information and find the Pool ID

First you need to list the available subscriptions which are of the virtual type. Enter the following command:[root@server1 ~]#

subscription-manager listSubscription Name: Red Hat Enterprise Linux ES (Basic for Virtualization) Provides: Red Hat Beta Oracle Java (for RHEL Server) Red Hat Enterprise Linux Server SKU: ------- Pool ID: XYZ123 Available: 40 Suggested: 1 Service Level: Basic Service Type: L1-L3 Multi-Entitlement: No Ends: 01/02/2017 System Type: Virtual--avail --match-installed | grep 'Virtual' -B12Note the Pool ID displayed. Copy this ID as you will need it in the next step.Attach the subscription with the Pool ID

Using the Pool ID you copied in the previous step run the attach command. Replace the Pool ID XYZ123 with the Pool ID you retrieved. Enter the following command:[root@server1 ~]#

subscription-manager attachSuccessfully attached a subscription for: Red Hat Enterprise Linux ES (Basic for Virtualization)--pool=XYZ123

10.5. Troubleshooting virt-who

10.5.1. Why is the hypervisor status red?

10.5.2. I have subscription status errors, what do I do?

- System not properly subscribed

- Status unknown

- Late binding of a guest to a hypervisor through virt-who (host/guest mapping)

rhsm.log, located in the /var/log/rhsm/ directory.

Chapter 11. Enhancing Virtualization with the QEMU Guest Agent and SPICE Agent

Note

11.1. QEMU Guest Agent

Important

11.1.1. Setting up Communication between the QEMU Guest Agent and Host

Note

11.1.1.1. Configuring the QEMU Guest Agent on a Linux Guest

virsh or virt-manager can be used to configure communication between the guest and the QEMU guest agent. The following instructions describe how to configure the QEMU guest agent on a Linux guest.

Procedure 11.1. Setting up communication between guest agent and host with virsh on a shut down Linux guest

Shut down the virtual machine

Ensure the virtual machine (named rhel7 in this example) is shut down before configuring the QEMU guest agent:#

virsh shutdown rhel7Add the QEMU guest agent channel to the guest XML configuration

Edit the guest's XML file to add the QEMU guest agent details:#

virsh edit rhel7Add the following to the guest's XML file and save the changes:<channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> </channel>

Start the virtual machine

#

virsh start rhel7Install the QEMU guest agent on the guest

Install the QEMU guest agent if not yet installed in the guest virtual machine:#

yum install qemu-guest-agentStart the QEMU guest agent in the guest

Start the QEMU guest agent service in the guest:#

systemctl start qemu-guest-agent

Procedure 11.2. Setting up communication between guest agent and host on a running Linux guest

Create an XML file for the QEMU guest agent

#

cat agent.xml<channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> </channel>Attach the QEMU guest agent to the virtual machine

Attach the QEMU guest agent to the running virtual machine (named rhel7 in this example) with this command:#

virsh attach-device rhel7 agent.xmlInstall the QEMU guest agent on the guest

Install the QEMU guest agent if not yet installed in the guest virtual machine:#

yum install qemu-guest-agentStart the QEMU guest agent in the guest

Start the QEMU guest agent service in the guest:#

systemctl start qemu-guest-agent

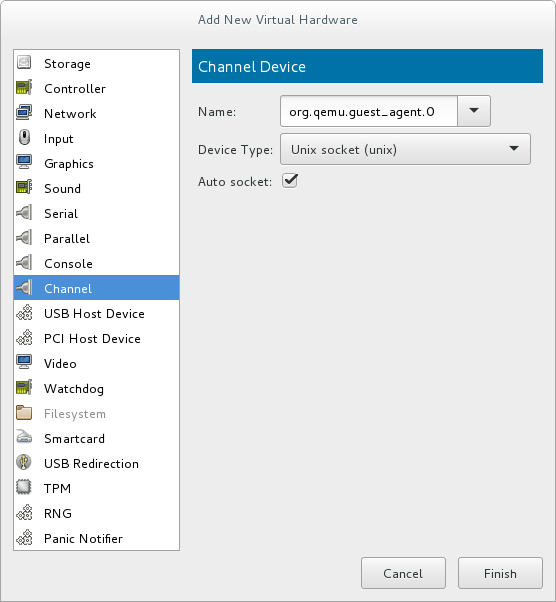

Procedure 11.3. Setting up communication between the QEMU guest agent and host with virt-manager

Shut down the virtual machine

Ensure the virtual machine is shut down before configuring the QEMU guest agent.To shut down the virtual machine, select it from the list of virtual machines in Virtual Machine Manager, then click the light switch icon from the menu bar.Add the QEMU guest agent channel to the guest

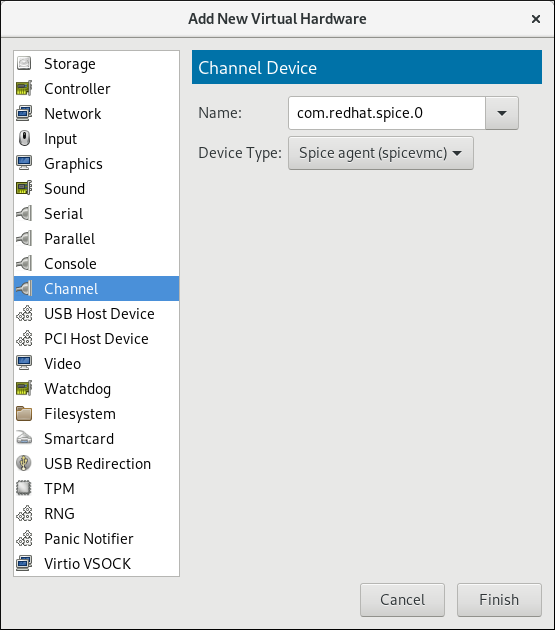

Open the virtual machine's hardware details by clicking the lightbulb icon at the top of the guest window.Click the Add Hardware button to open the Add New Virtual Hardware window, and select Channel.Select the QEMU guest agent from the Name drop-down list and click Finish:

Figure 11.1. Selecting the QEMU guest agent channel device

Start the virtual machine

To start the virtual machine, select it from the list of virtual machines in Virtual Machine Manager, then click on the menu bar.

on the menu bar.

Install the QEMU guest agent on the guest

Open the guest with virt-manager and install the QEMU guest agent if not yet installed in the guest virtual machine:#

yum install qemu-guest-agentStart the QEMU guest agent in the guest

Start the QEMU guest agent service in the guest:#

systemctl start qemu-guest-agent

11.2. Using the QEMU Guest Agent with libvirt

virsh commands:

virsh shutdown --mode=agent- This shutdown method is more reliable thanvirsh shutdown --mode=acpi, asvirsh shutdownused with the QEMU guest agent is guaranteed to shut down a cooperative guest in a clean state. If the agent is not present, libvirt must instead rely on injecting an ACPI shutdown event, but some guests ignore that event and thus will not shut down.Can be used with the same syntax forvirsh reboot.virsh snapshot-create --quiesce- Allows the guest to flush its I/O into a stable state before the snapshot is created, which allows use of the snapshot without having to perform a fsck or losing partial database transactions. The guest agent allows a high level of disk contents stability by providing guest co-operation.virsh domfsfreezeandvirsh domfsthaw- Quiesces the guest filesystem in isolation.virsh domfstrim- Instructs the guest to trim its filesystem.virsh domtime- Queries or sets the guest's clock.virsh setvcpus --guest- Instructs the guest to take CPUs offline.virsh domifaddr --source agent- Queries the guest operating system's IP address via the guest agent.virsh domfsinfo- Shows a list of mounted filesystems within the running guest.virsh set-user-password- Sets the password for a user account in the guest.

11.2.1. Creating a Guest Disk Backup

- File system applications / databases flush working buffers to the virtual disk and stop accepting client connections

- Applications bring their data files into a consistent state

- Main hook script returns

- qemu-guest-agent freezes the filesystems and the management stack takes a snapshot

- Snapshot is confirmed

- Filesystem function resumes

virsh snapshot-create --quiesce --disk-only command (alternatively, run virsh snapshot-create-as guest_name --quiesce --disk-only, explained in further detail in Section 20.39.2, “Creating a Snapshot for the Current Guest Virtual Machine”).

Note

restorecon -FvvR command listed in Table 11.1, “QEMU guest agent package contents” in the table row labeled /etc/qemu-ga/fsfreeze-hook.d/.

Table 11.1. QEMU guest agent package contents

| File name | Description |

|---|---|

/usr/lib/systemd/system/qemu-guest-agent.service | Service control script (start/stop) for the QEMU guest agent. |

/etc/sysconfig/qemu-ga | Configuration file for the QEMU guest agent, as it is read by the /usr/lib/systemd/system/qemu-guest-agent.service control script. The settings are documented in the file with shell script comments. |

/usr/bin/qemu-ga | QEMU guest agent binary file. |

/etc/qemu-ga | Root directory for hook scripts. |

/etc/qemu-ga/fsfreeze-hook | Main hook script. No modifications are needed here. |

/etc/qemu-ga/fsfreeze-hook.d | Directory for individual, application-specific hook scripts. The guest system administrator should copy hook scripts manually into this directory, ensure proper file mode bits for them, and then run restorecon -FvvR on this directory. |

/usr/share/qemu-kvm/qemu-ga/ | Directory with sample scripts (for example purposes only). The scripts contained here are not executed. |

/etc/qemu-ga/fsfreeze-hook logs its own messages, as well as the application-specific script's standard output and error messages, in the following log file: /var/log/qemu-ga/fsfreeze-hook.log. For more information, see the libvirt upstream website.

11.3. SPICE Agent

11.3.1. Setting up Communication between the SPICE Agent and Host

virsh or virt-manager can be used to configure communication between the guest and the SPICE agent. The following instructions describe how to configure the SPICE agent on a Linux guest.

Procedure 11.4. Setting up communication between guest agent and host with virsh on a Linux guest

Shut down the virtual machine

Ensure the virtual machine (named rhel7 in this example) is shut down before configuring the SPICE agent:#

virsh shutdown rhel7Add the SPICE agent channel to the guest XML configuration

Edit the guest's XML file to add the SPICE agent details:#

virsh edit rhel7Add the following to the guest's XML file and save the changes:<channel type='spicevmc'> <target type='virtio' name='com.redhat.spice.0'/> </channel>

Start the virtual machine

#

virsh start rhel7Install the SPICE agent on the guest

Install the SPICE agent if not yet installed in the guest virtual machine:#

yum install spice-vdagentStart the SPICE agent in the guest

Start the SPICE agent service in the guest:#

systemctl start spice-vdagent

Procedure 11.5. Setting up communication between SPICE agent and host on a running Linux guest

Create an XML file for the SPICE agent

#

cat agent.xml<channel type='spicevmc'> <target type='virtio' name='com.redhat.spice.0'/> </channel>Attach the SPICE agent to the virtual machine

Attach the SPICE agent to the running virtual machine (named rhel7 in this example) with this command:#

virsh attach-device rhel7 agent.xmlInstall the SPICE agent on the guest

Install the SPICE agent if not yet installed in the guest virtual machine:#

yum install spice-vdagentStart the SPICE agent in the guest

Start the SPICE agent service in the guest:#

systemctl start spice-vdagent

Procedure 11.6. Setting up communication between the SPICE agent and host with virt-manager

Shut down the virtual machine

Ensure the virtual machine is shut down before configuring the SPICE agent.To shut down the virtual machine, select it from the list of virtual machines in Virtual Machine Manager, then click the light switch icon from the menu bar.Add the SPICE agent channel to the guest

Open the virtual machine's hardware details by clicking the lightbulb icon at the top of the guest window.Click the Add Hardware button to open the Add New Virtual Hardware window, and select Channel.Select the SPICE agent from the Name drop-down list, edit the channel address, and click Finish:

Figure 11.2. Selecting the SPICE agent channel device

Start the virtual machine

To start the virtual machine, select it from the list of virtual machines in Virtual Machine Manager, then click on the menu bar.

on the menu bar.

Install the SPICE agent on the guest

Open the guest with virt-manager and install the SPICE agent if not yet installed in the guest virtual machine:#

yum install spice-vdagentStart the SPICE agent in the guest

Start the SPICE agent service in the guest:#

systemctl start spice-vdagent

Chapter 12. Nested Virtualization

12.1. Overview

12.2. Setup

- Enable: The feature is disabled by default. To enable it, use the following procedure on the L0 host physical machine.For Intel:

- Check whether nested virtualization is available on your host system.

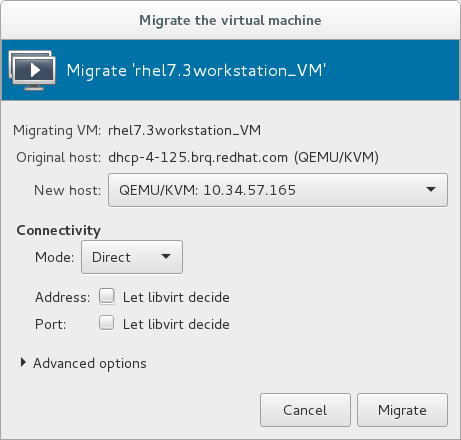

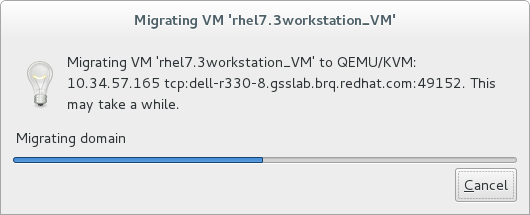

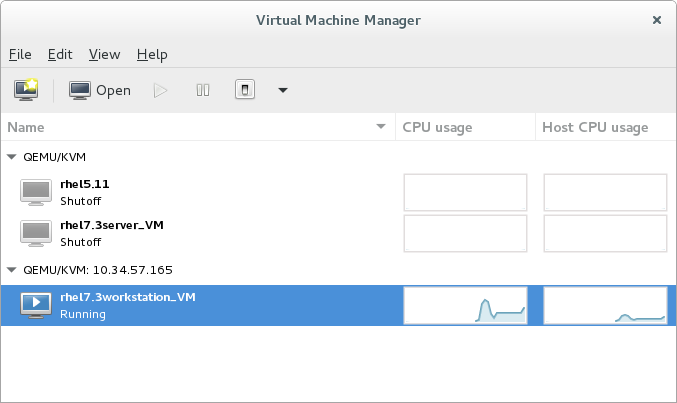

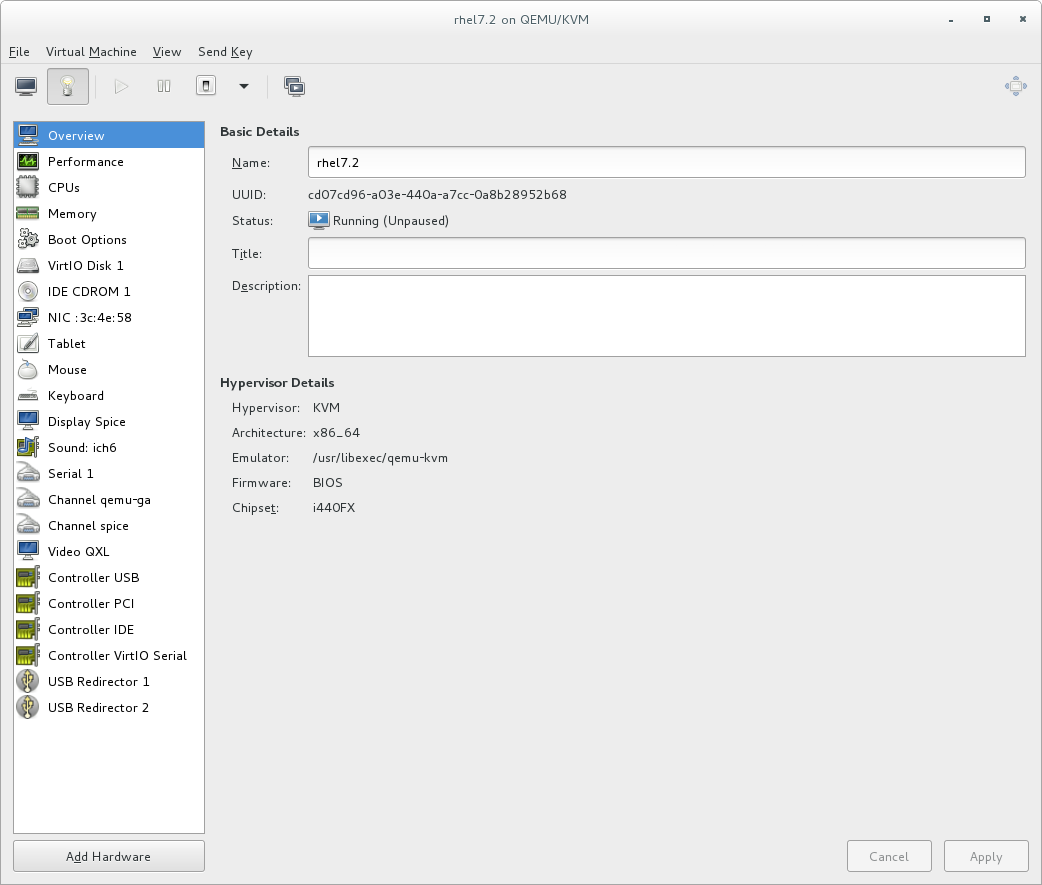

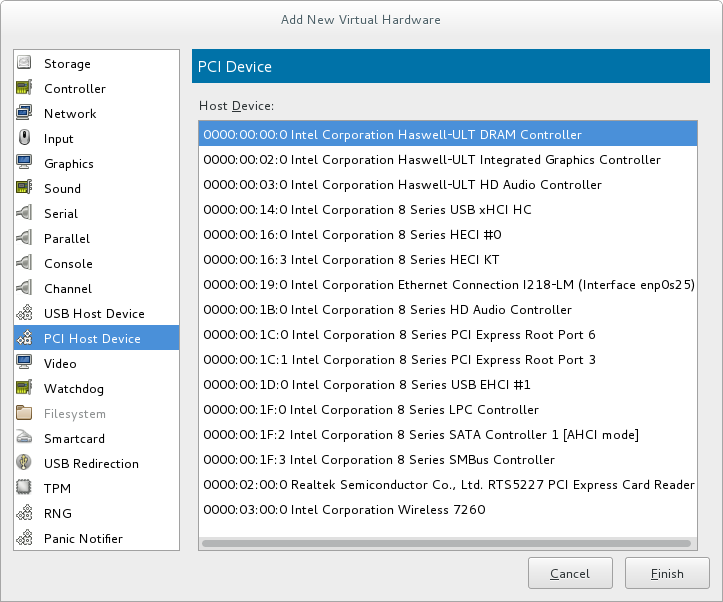

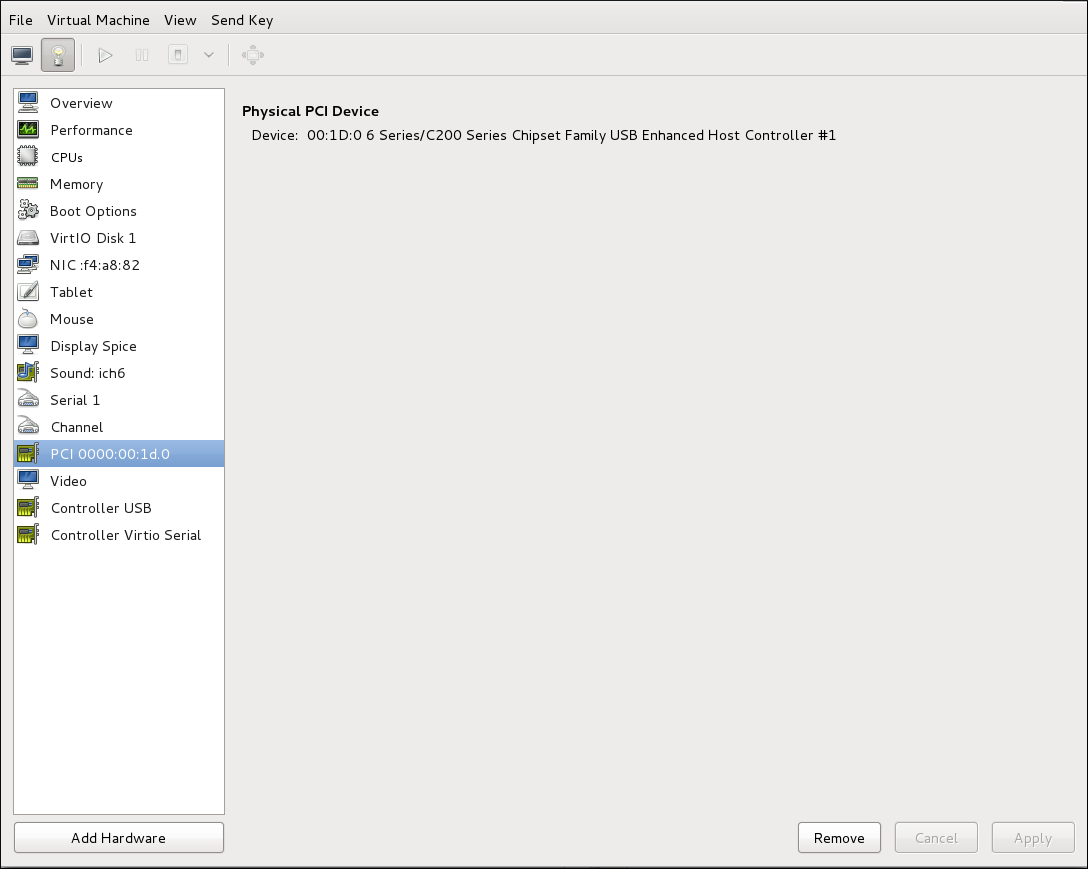

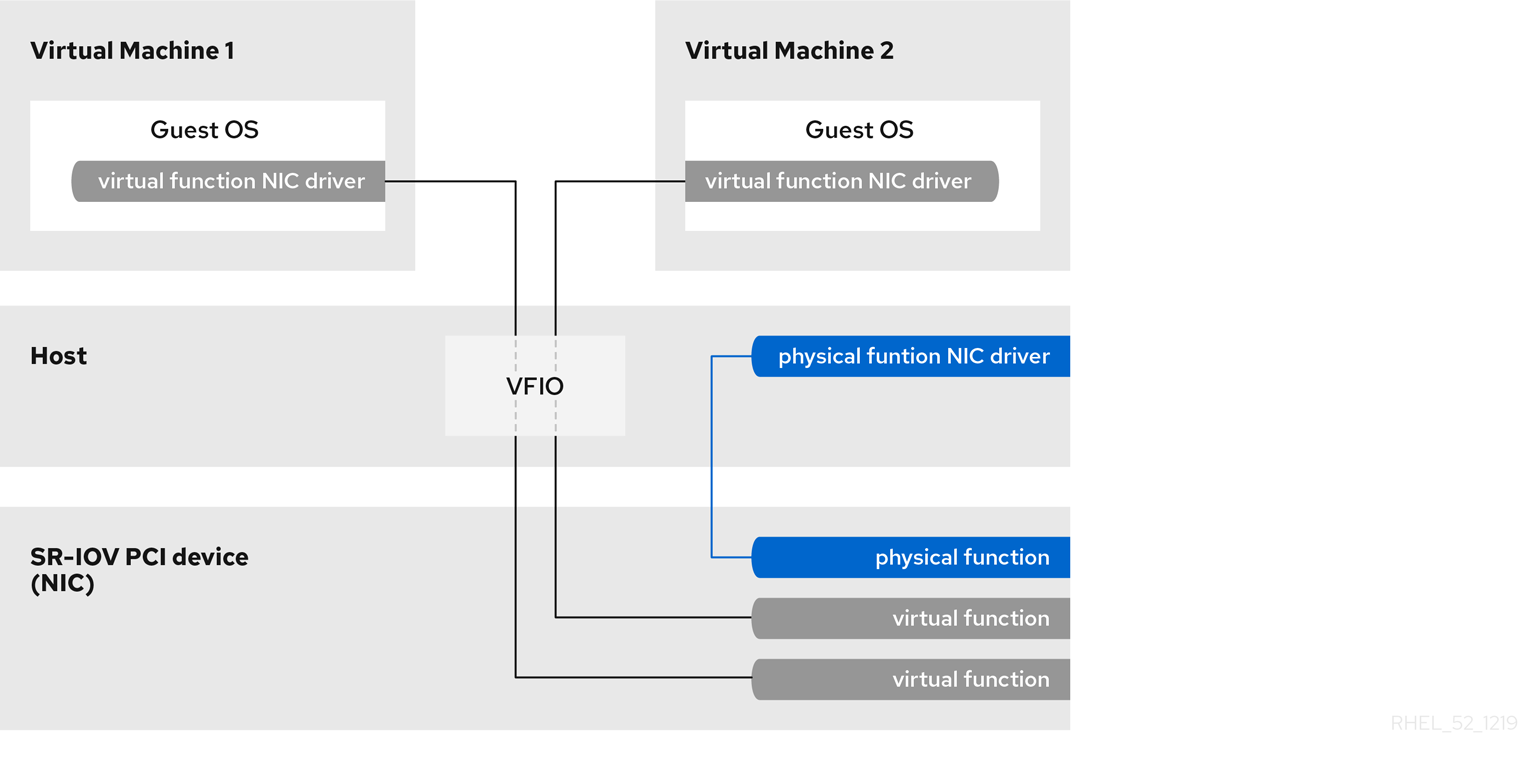

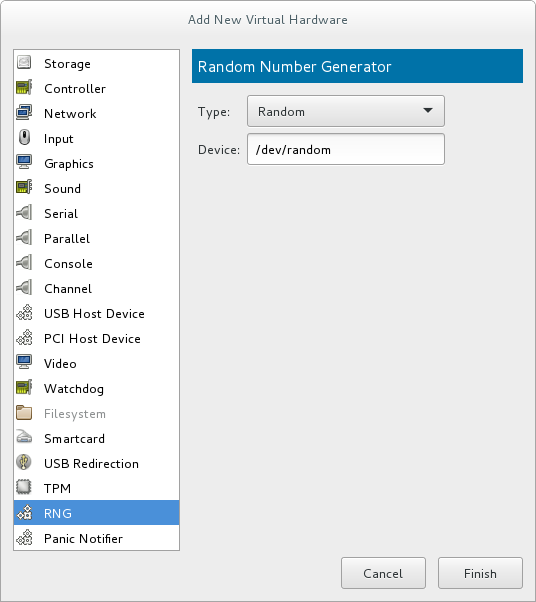

$