HornetQ User Guide

for use with JBoss Enterprise Application Platform 5

Edition 5.2.0

Andy Taylor

Jared Morgan

Laura Bailey

Rebecca Newton

Edited by

Eva Kopalova

Edited by

Petr Penicka

Edited by

Russell Dickenson

Edited by

Scott Mumford

Abstract

Chapter 1. Introduction

Chapter 2. Migrating to HornetQ

2.1. Before You Migrate

Important

Note

Warning

2.1.1. Back up relevant data

2.1.1.1. JBoss Messaging database tables

- JBM_MSG_REF, JBM_MSG

- These tables store persistent messages and their states.

- JBM_TX, JBM_TX_EX

- These tables store transaction states.

- JBM_USER, JBM_ROLE

- These tables store user and role information.

- JBM_POSTOFFICE

- This table holds bindings information.

2.1.1.2. JBoss Messaging configuration files

$JBOSS_HOME/server/$PROFILE/deploy/messaging, assuming your JBoss Messaging server profile is messaging. Applications can choose other locations in which to deploy some configuration files.

- Connection Factory service configuration files

- Contain JMS connection factories deployed with the JBoss Messaging server.

- Destination service configuration files

- Contain JMS queues and topics deployed with the JBoss Messaging server.

- Bridge service configuration files

- Contain bridge services deployed with the JBoss Messaging server.

messaging-service.xml and the database persistence configuration file, are JBoss Messaging MBeans configurations. The HornetQ implementation consists only of Plain Old Java Objects (POJOs), so these configuration files are not migration targets.

2.2. Application Code

Table 2.1. Implementation class mapping between JBoss Messaging and HornetQ

| org.jboss.jms.client. Classname | HornetQ Equivalent Classname |

|---|---|

JBossConnectionFactory | org.hornetq.jms.client.HornetQConnectionFactory |

JBossConnection | org.hornetq.jms.client.HornetQConnection |

JBossSession | org.hornetq.jms.client.HornetQSession |

JBossMessageProducer | org.hornetq.jms.client.HornetQMessageProducer |

JBossMessageConsumer | org.hornetq.jms.client.HornetQMessageConsumer |

Note

2.3. Client-side Failure Handling

producer.send(createMessage(session, i));

System.out.println("Message: " + i);try {

producer.send(createMessage(session, i));

System.out.println("Message: " + i);

} catch (Exception e) {

Thread.sleep(1000);

producer.send(createMessage(session, i));

}2.4. Installing HornetQ

2.5. Server Configuration Migration

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml. The full set of supported directives in this file are documented in Appendix A, Configuration Reference

Table 2.2. Server attribute mapping between JBoss Messaging and HornetQ

| JBoss Messaging Server Attributes (Server Peer MBean) | Equivalent HornetQ Server Attributes |

|---|---|

ServerPeerID | N/A - HornetQ does not require a specified server ID |

DefaultQueueJNDIContext, DefaultTopicJNDIContext | N/A |

PostOffice | N/A |

DefaultDLQ | N/A - HornetQ defines dead letter addresses at the core level. There is no default dead letter address for an address unless you specify one. |

DefaultMaxDeliveryAttempts | N/A - In HornetQ, the default is always 10. |

DefaultExpiryQueue | N/A - HornetQ defines expiry addresses at the core level. There is no default expiry address for an address unless you specify one. |

DefaultRedeliveryDelay | N/A - HornetQ's default redelivery delay is always 0 (no delay). |

MessageCounterSamplePeriod | message-counter-sample-period |

FailoverStartTimeout | N/A |

FailoverCompleteTimeout | N/A |

DefaultMessageCounterHistoryDayLimit | N/A |

ClusterPullConnectionFactory | N/A |

DefaultPreserveOrdering | N/A |

RecoverDeliveriesTimeout | N/A |

EnableMessageCounters | message-counter-enabled |

SuckerPassword | cluster-password |

SuckerConnectionRetryTimes | bridges.reconnect-attempts |

SuckerConnectionRetryInterval | bridges.reconnect-interval |

StrictTCK | N/A |

Destinations, MessageCounters, MessageStatistics | N/A - These are part of HornetQ's management functions. Refer to the appropriate chapter for details. |

SupportsFailover | N/A |

PersistenceManager | N/A - HornetQ uses its built-in high-performance journal as its persistence utility. |

JMSUserManager | N/A |

SecurityStore | N/A - The security manager is configured in hornetq-beans.xml or hornetq-jboss-beans.xml. |

2.6. Migrating JMS-administered Objects and Bridges

JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml. Appendix A, Configuration Reference contains all supported directives for hornetq-jms.xml.

Table 2.3. JMS Connection Factory Configuration Mappings

| JBoss Messaging ConnectionFactory Attributes | HornetQ JMS ConnectionFactory Attributes |

|---|---|

ClientID | connection-factory.client-id |

JNDIBindings | connection-factory.entries |

PrefetchSize | connection-factory.consumer-window-size |

SlowConsumers | N/A - equivalent to consumer-window-size=0 |

StrictTck | N/A |

SendAcksAsync | connection-factory.block-on-acknowledge |

DefaultTempQueueFullSize, DefaultTempQueuePageSize, DefaultTempQueueDownCacheSize | N/A |

DupsOKBatchSize | connection-factory.dups-ok-batch-size |

SupportsLoadBalancing | N/A |

SupportsFailover | N/A |

DisableRemotingChecks | N/A |

LoadBalancingFactory | connection-factory.connection-load-balancing-policy-class-name |

Connector | connection-factory.connectors |

EnableOrderingGroup, DefaultOrderingGroup | N/A |

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml. If not specified in hornetq-configuration.xml, they are specified in JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml.

Table 2.4. JMS Queue Configuration Mappings

| JBoss Messaging Queue Attributes | HornetQ JMS Queue Attributes |

|---|---|

Name | queue.name - defined in hornetq-jms.xml |

JNDIName | queue.entry - defined in hornetq-jms.xml |

DLQ | address-settings.dead-letter-address |

ExpiryQueue | address-settings.expiry-address |

RedeliveryDelay | address-settings.redelivery-delay |

MaxDeliveryAttempts | address-settings.max-delivery-attempts |

SecurityConfig | security-settings |

FullSize | address-settings.max-size-bytes - HornetQ paging attributes do not exactly match JBoss Messaging paging attributes. Refer to the appropriate chapter for details. |

PageSize | address-settings.page-size-bytes - HornetQ paging attributes do not exactly match JBoss Messaging paging attributes. Refer to the appropriate chapter for details. |

DownCacheSize | Not Supported |

CreatedProgrammatically | Refer to org.hornetq.api.jms.management.JMSQueueControl to retrieve this attribute. |

MessageCount | Refer to org.hornetq.api.jms.management.JMSQueueControl to retrieve this attribute. |

ScheduledMessageCount | Refer to org.hornetq.api.jms.management.JMSQueueControl to retrieve this attribute. |

MessageCounter | Refer to org.hornetq.api.jms.management.JMSQueueControl to retrieve this attribute. |

MessageCounterStatistics | Refer to org.hornetq.api.jms.management.JMSQueueControl to retrieve this attribute. |

ConsumerCount | Refer to org.hornetq.api.jms.management.JMSQueueControl to retrieve this attribute. |

DropOldMessageOnRedeploy | Not Supported |

MaxSize | Not Supported |

Clustered | Not Supported |

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml describes how JBoss Messaging Topic attributes map to HornetQ JMS Topic attributes. Unless otherwise specified, these attributes are defined in JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml.

Table 2.5. JMS Topic Configuration Mappings

| JBoss Messaging Topic Attributes | HornetQ JMS Topic Attributes |

|---|---|

Name | topic.name - defined in hornetq-jms.xml |

JNDIName | topic.entry - defined in hornetq-jms.xml |

DLQ | address-settings.dead-letter-address |

ExpiryQueue | address-settings.expiry-address |

RedeliveryDelay | address-settings.redelivery-delay |

MaxDeliveryAttempts | address-settings.max-delivery-attempts |

SecurityConfig | security-settings |

FullSize | address-settings.max-size-bytes - HornetQ paging attributes do not exactly match JBoss Messaging paging attributes. Refer to the appropriate chapter for details. |

PageSize | address-settings.page-size-bytes - HornetQ paging attributes do not exactly match JBoss Messaging paging attributes. Refer to the appropriate chapter for details. |

DownCacheSize | N/A |

CreatedProgrammatically | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

MessageCounterHistoryDayLimit | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

MessageCounters | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

AllMessageCount | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

DurableMessageCount | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

NonDurableMessageCount | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

AllSubscriptionsCount | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

DurableSubscriptionsCount | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

NonDurableSubscriptionsCount | Refer to org.hornetq.api.jms.management.TopicControl to retrieve this attribute. |

MaxSize | N/A |

Clustered | N/A |

DropOldMessageOnRedeploy | N/A |

Table 2.6. JMS Bridge Configuration Mappings

| JBoss Messaging Topic Attributes | HornetQ JMS Topic Attributes |

|---|---|

SourceProviderLoader | SourceCFF |

TargetProviderLoader | TargetCFF |

SourceDestinationLookup | SourceDestinationFactory |

TargetDestinationLookup | TargetDestinationFactory |

SourceUsername | Source user name parameter |

SourcePassword | Source user password parameter |

TargetUsername | Target user name parameter |

TargetPassword | Target password parameter |

QualityOfServiceMode | Quality of Service parameter |

Selector | Selector parameter |

MaxBatchSize | Max batch size parameter |

MaxBatchTime | Max batch time parameter |

SubName | Subscription name parameter |

ClientID | Client ID parameter |

FailureRetryInterval | Failure retry interval parameter |

MaxRetries | Max retry times parameter |

AddMessageIDInHeader | Add Message ID in Header parameter |

2.7. Other Configuration in JBoss Messaging

2.8. Migrating Existing Messages

2.9. Applications that use management APIs

- JMX

- JMX is the standard method of managing Java applications.

- Core API

- Management operations are sent to the HornetQ server using Core messages.

- JMS API

- Management operations are sent to the HornetQ server using JMS messages

Table 2.7. JBoss Messaging and HornetQ Management Object API Mappings

| org.jboss.jms.server. Class | org.hornetq.api.jms.management. Class |

|---|---|

ServerPeer | JMSServerControl |

connectionfactory.ConnectionFactory | ConnectionFactoryControl |

destination.QueueService | JMSQueueControl |

destination.TopicService | TopicControl |

Note

JDBCPersistenceManagerService because HornetQ does not require a datasource as JBoss Messaging does.

Chapter 3. Messaging Concepts

3.1. Messaging Concepts

3.2. Messaging styles

3.2.1. The Point-To-Point Pattern

3.2.2. The Publish-Subscribe Pattern

3.3. Delivery guarantees

3.4. Transactions

XA: JTA.

3.5. Durability

3.6. Messaging APIs and protocols

3.6.1. Java Message Service (JMS)

3.6.2. System specific APIs

3.7. High Availability

3.8. Clusters

3.9. Bridges and routing

Chapter 4. Core Architecture

netty.jar. This is because some of the netty buffer classes are used internally.

- Core Client API

- This is a simple intuitive Java API that allows the full set of messaging functionality without some of the complexities of JMS.

- JMS Client API

- The standard JMS API is available at the client side.

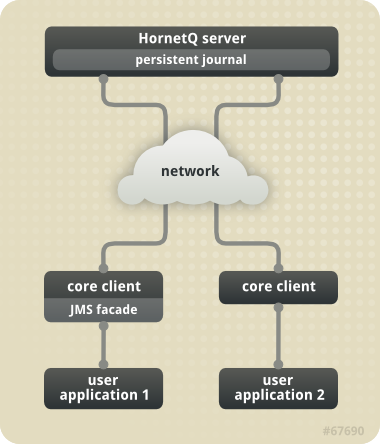

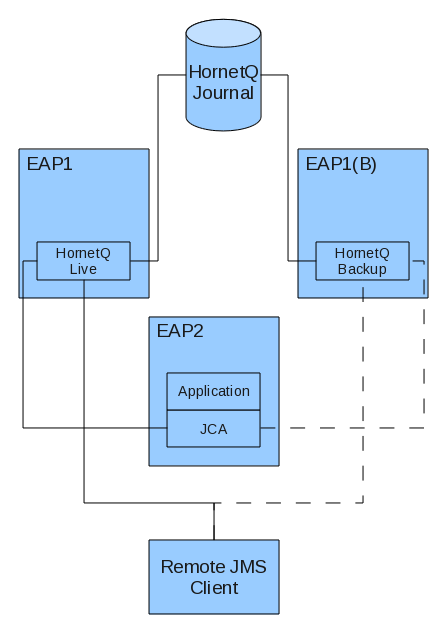

Figure 4.1. HornetQ Application Interaction Schematic

Chapter 5. Using the Server

5.1. Library Path

java.library.path as a property on your Java options. This is done automatically in the run.sh script.

java.library.path at your Java options then the JVM will use the environment variable LD_LIBRARY_PATH.

5.2. System properties

5.3. Configuration files

Files located in /deploy/hornetq/

- hornetq-configuration.xml

- This is the main HornetQ configuration file. All the parameters in this file are described in Appendix A, Configuration Reference. Refer to Section 5.4, “The Main Configuration File” for more information on this file.

Note

The propertyfile-deployment-enabledin thehornetq-configuration.xmlconfiguration when set to false means that the other configuration files are not loaded. By default, this is set to true. - hornetq-jboss-beans.xml

- This is the JBoss Microcontainer beans file which defines what beans the Microcontainer should create and what dependencies to enforce between them.

- hornetq-jms.xml

- The distribution configuration by default includes a server side JMS service which mainly deploys JMS Queues, Topics and Connection Factories from this file into JNDI. If you are not using JMS, or you do not need to deploy JMS objects on the server side, then you do not need this file. For more information on using JMS, refer to Chapter 6, Using JMS.

Files located in /conf/props/

- hornetq-users.properties

- HornetQ ships with a security manager implementation that obtains user credentials from the

hornetq-users.propertiesfile. This file contains user and password information. For more information on security, refer to Chapter 29, Security. - hornetq-roles.properties

- This file contains user names defined in

hornetq-users.propertieswith the roles they have permission to use. For more information on security, refer to Chapter 29, Security.

<connector name="netty">

<factory-class>org.hornetq.core.remoting.impl.netty.NettyConnectorFactory

</factory-class>

<param key="host" value="${hornetq.remoting.netty.host:localhost}" type="String"/>

<param key="port" value="${hornetq.remoting.netty.port:5445}" type="Integer"/>

</connector>hornetq.remoting.netty.host and hornetq.remoting.netty.port. These values will be replaced by the value found in the system property if there is one. If not, they default back to localhost or 5445 respectively. It is also possible to not supply a default. That is, ${hornetq.remoting.netty.host}, however the system property must be supplied in that case.

5.4. The Main Configuration File

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml. This is what the FileConfiguration bean uses to configure the messaging server.

Chapter 6. Using JMS

6.1. A Simple Ordering System - Configuration Example

OrderQueue, with a single MessageProducer sending an order messages to the queue. A single MessageConsumer consumes the order message from the queue.

durable, (it will survive a server restart or crash).

6.1.1. JMS Server Configuration

hornetq-jms.xml on the server classpath (in standard configurations, JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml) contains any JMS queue, topic and ConnectionFactory instances that we wish to create and make available to lookup via the JNDI.

<configuration xmlns="urn:hornetq" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="urn:hornetq ../schemas/hornetq-jms.xsd ">

<connection-factory name="NettyConnectionFactory">

<connectors>

<connector-ref connector-name="netty"/>

</connectors>

<entries>

<entry name="/ConnectionFactory"/>

</entries>

</connection-factory>

<queue name="OrderQueue">

<entry name="queues/OrderQueue"/>

</queue>

</configuration>

ConnectionFactory is deployed and bound in just one place in JNDI as given by the entry element. ConnectionFactory instances can be bound in many places in JNDI if it is required.

Note

connector called netty. This is a reference to a connector object deployed in the main core configuration file <JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml, which defines the transport and parameters used to actually connect to the server.

6.1.2. Connection Factory Types

signature attribute and a xa parameter, the combination of which determines the type of the factory.

signature has three possible string values (generic, queue and topic).

xa is a boolean type parameter. The following table gives their configuration values for different connection factory interfaces.

Table 6.1. Configuration for Connection Factory Types

| signature | xa | Connection Factory Type |

|---|---|---|

| generic (default) | false (default) | javax.jms.ConnectionFactory |

| generic | true | javax.jms.XAConnectionFactory |

| queue | false | javax.jms.QueueConnectionFactory |

| queue | true | javax.jms.XAQueueConnectionFactory |

| topic | false | javax.jms.TopicConnectionFactory |

| topic | true | javax.jms.XATopicConnectionFactory |

<configuration xmlns="urn:hornetq"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:hornetq ../schemas/hornetq-jms.xsd ">

<connection-factory name="ConnectionFactory" signature="queue">

<xa>true</xa>

<connectors>

<connector-ref connector-name="netty"/>

</connectors>

<entries>

<entry name="/ConnectionFactory"/>

</entries>

</connection-factory>

</configuration>

6.1.3. The code

InitialContect ic = new InitialContext();

ConnectionFactory cf = (ConnectionFactory)ic.lookup("/ConnectionFactory");Queue orderQueue = (Queue)ic.lookup("/queues/OrderQueue");Connection connection = cf.createConnection();

Session session = connection.createSession(false, Session.AUTO_ACKNOWLEDGE);

MessageProducer producer = session.createProducer(orderQueue);

MessageConsumer consumer = session.createConsumer(orderQueue);

connection.start();

TextMessage message = session.createTextMessage("This is an order");

producer.send(message);TextMessage receivedMessage = (TextMessage)consumer.receive();

System.out.println("Got order: " + receivedMessage.getText());Warning

6.2. Directly Instantiating JMS Resources Without Using JNDI

TransportConfiguration transportConfiguration = new TransportConfiguration(NettyConnectorFactory.class.getName()); ConnectionFactory cf = HornetQJMSClient.createConnectionFactory(transportConfiguration);

Queue orderQueue = HornetQJMSClient.createQueue("OrderQueue");Connection connection = cf.createConnection();

Session session = connection.createSession(false, Session.AUTO_ACKNOWLEDGE);

MessageProducer producer = session.createProducer(orderQueue);

MessageConsumer consumer = session.createConsumer(orderQueue);

connection.start();

TextMessage message = session.createTextMessage("This is an order");

producer.send(message);TextMessage receivedMessage = (TextMessage)consumer.receive();

System.out.println("Got order: " + receivedMessage.getText());6.3. Setting The Client ID

JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml, and can be set via the <client-id> directive. Any connection created by this connection factory will have this set as its client ID.

6.4. Setting The Batch Size for DUPS_OK

DUPS_OK it is possible to configure the consumer so that it sends acknowledgments in batches rather that one at a time, saving valuable bandwidth. This can be configured via the connection factory via the dups-ok-batch-size element and is set in bytes. The default is 1024 * 1024 bytes = 1 MiB (Mebibyte).

6.5. Setting The Transaction Batch Size

transaction-batch-size element. The default is 1024 * 1024 (bytes).

Chapter 7. Using Core

7.1. Core Messaging Concepts

7.1.1. Message

- A message is the unit of data that is sent between clients and servers.

- A message has a body which is a buffer containing convenient methods for reading and writing data into it.

- A message has a set of properties which are key-value pairs. Each property key is a string and property values can be of type integer, long, short, byte, byte[], String, double, float or boolean.

- A message has an address it is being sent to. When the message arrives on the server it is routed to any queues that are bound to the address - if the queues are bound with any filter, the message will only be routed to that queue if the filter matches. An address may have many queues bound to it or none. There may also be entities other than queues, like diverts bound to addresses.

- Messages can be either durable or non durable. Durable messages in a durable queue will survive a server crash or restart. Non durable messages will not survive a server crash or restart.

- Messages can be specified with a priority value between 0 and 9. 0 represents the lowest priority and 9 represents the highest. HornetQ will attempt to deliver higher priority messages before lower priority ones.

- Messages can be specified with an optional expiry time. HornetQ will not deliver messages after its expiry time has been exceeded.

- Messages have an optional time stamp which represents the time the message was sent.

- HornetQ also supports the sending or consuming of very large messages - much larger than can fit in available RAM at any one time.

7.1.2. Address

Note

7.1.3. Queue

7.1.4. ClientSessionFactory

ClientSessionFactory instances to create ClientSession instances. ClientSessionFactory instances know how to connect to the server to create sessions, and are configurable with many settings.

ClientSessionFactory instances are created using the HornetQClient factory class.

7.1.5. ClientSession

XAResource interface so messaging operations can be performed as part of a JTA transaction.

SendAcknowledgementHandler. This allows your client code to be notified asynchronously when sent messages have successfully reached the server. This feature ensures sent messages have reached the server without having to block on each message sent until a response is received.

7.1.6. ClientConsumer

ClientConsumer instances to consume messages from a queue. Core Messaging supports both synchronous and asynchronous message consumption semantics. ClientConsumer instances can be configured with an optional filter expression and will only consume messages which match that expression.

7.1.7. ClientProducer

ClientProducer instances on ClientSession instances so they can send messages. ClientProducer instances can specify an address to which all sent messages are routed, or they can have no specified address, and the address is specified at send time for the message.

Warning

7.2. Simple Core Example

ClientSessionFactory factory = HornetQClient.createClientSessionFactory(

new TransportConfiguration(

InVMConnectorFactory.class.getName()));

ClientSession session = factory.createSession();

session.createQueue("example", "example", true);

ClientProducer producer = session.createProducer("example");

ClientMessage message = session.createMessage(true);

message.getBodyBuffer().writeString("Hello");

producer.send(message);

session.start();

ClientConsumer consumer = session.createConsumer("example");

ClientMessage msgReceived = consumer.receive();

System.out.println("message = " + msgReceived.getBodyBuffer().readString());

session.close();Chapter 8. Mapping JMS Concepts to the Core API

jms.queue. prepended to it. For example, the JMS Queue with the name "orders.europe" would map to the core queue with the name "jms.queue.orders.europe". The address at which the core queue is bound is also given by the core queue name.

<!-- expired messages in JMS Queue "orders.europe"

will be sent to the JMS Queue "expiry.europe" -->

<address-setting match="jms.queue.orders.europe">

<expiry-address>jms.queue.expiry.europe</expiry-address>

...

</address-setting>Chapter 9. The Client Classpath

Warning

$JBOSS_HOME/client directory of the HornetQ distribution. Be sure you only use the jars from the correct version of the release, you must not mix and match versions of jars from different HornetQ versions. Mixing and matching different jar versions may cause subtle errors and failures to occur.

9.1. HornetQ Core Client

hornetq-core-client.jar and netty.jar on your client classpath.

9.2. JMS Client

hornetq-jms-client.jar and jboss-javaee.jar.

Note

jboss-javaee.jar only contains Java EE API interface classes needed for the javax.jms.* classes. If you already have a jar with these interface classes on your classpath, you will not need it.

9.3. JMS Client with JNDI

jnp-client.jar jar on your client classpath as well as any other jars mentioned previously.

Chapter 10. Routing Messages With Wild Cards

queue.news.#, for example, then it will receive any messages sent to addresses that match this. Take these, for example: queue.news.europe or queue.news.usa or queue.news.usa.sport. If you create a consumer on this queue, this allows a consumer to consume messages which are sent to a hierarchy of addresses.

Note

wild-card-routing-enabled in the <JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml file to true. This is true by default.

Chapter 11. Understanding the HornetQ Wildcard Syntax

.' (full stop).

#' and '*' also have special meaning and can take the place of a word.

#' means "match any sequence of zero or more words".

*' means "match a single word".

Chapter 12. Filter Expressions

- Predefined Queues. When pre-defining a queue, either in

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xmlorJBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xmla filter expression can be defined for a queue. Only messages that match the filter expression will enter the queue. - Core bridges can be defined with an optional filter expression, only matching messages will be bridged (see Chapter 34, Core Bridges).

- Diverts can be defined with an optional filter expression. Only matching messages will be diverted (see Chapter 33, Diverting and Splitting Message Flows).

- Filters are also used programmatically when creating consumers, queues and in several places as described in Chapter 28, Management.

- HQPriority

- To refer to the priority of a message. Message priorities are integers with valid values from

0 - 9.0is the lowest priority and9is the highest. For example,HQPriority = 3 and department = 'payroll'. This refers to a message with a priority of three and a department of 'payroll'. - HQExpiration

- To refer to the expiration time of a message. The value is a long integer.

- HQDurable

- To refer to whether a message is durable or not. The value is a string with valid values:

DURABLEorNON_DURABLE. - HQTimestamp

- The time stamp of when the message was created. The value is a long integer.

- HQSize

- The size of a message in bytes. The value is an integer.

Chapter 13. Persistence

- Java Non-blocking IO (NIO)

- Uses standard Java NIO to interface with the file system. This provides extremely good performance and runs on any platform with a Java 6 or later runtime.

- Linux Asynchronous IO (AIO)

- Uses a native code wrapper to talk to the Linux asynchronous IO library (AIO). With AIO, HornetQ receives a message when data has been persisted. This removes the need for explicit syncs. AIO will typically provide better performance than Java NIO, but requires Linux kernel 2.6 or later and libaio.AIO also requires

ext2,ext3,ext4,jfsorxfstype file systems. On NFS, AIO falls back to slower, synchronous behavior.Note

On Red Hat Enterprise Linux, install libaio with the following command:yum install libaio

- bindings journal

- Stores bindings-related data, including the set of queues deployed on the server and their attributes. It also stores data such as ID sequence counters. The bindings journal is always a NIO journal, as it typically has low throughput in comparison to the message journal.The files on this journal are prefixed as

hornetq-bindings. Each file has abindingsextension. File size is1048576bytes, and it is located in the bindings folder. - JMS journal

- Stores all JMS-related data, for example, any JMS queues, topics or connection factories and any JNDI bindings for these resources. Any JMS resources created with the management API are persisted to this journal. Any resources configured with configuration files are not. This journal is created only if JMS is in use.

- message journal

- Stores all message-related data, including messages themselves and

duplicate-idcaches. By default, HornetQ uses AIO for this journal. If AIO is not available, it will automatically fall back to NIO.

13.1. Configuring the bindings journal

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml.

-

bindings-directory - The location of the bindings journal. The default value is

data/bindings. -

create-bindings-dir - If

true, and the bindings directory does not exist, the bindings directory is created automatically at the location specified inbindings-directory. The default value istrue.

13.2. Configuring the JMS journal

13.3. Configuring the message journal

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml.

-

journal-directory - The location of the message journal. The default value is

data/journal. For best performance, this journal should be located on its own physical volume to minimize disk head movement. If this journal is stored on a storage area network, each journal instance on the network should have its own logical unit. -

create-journal-dir - If

true, the journal directory is created at the location specified injournal-directory. The default value istrue. -

journal-type - Valid values are

NIOorASYNCIO. IfNIO, the Java NIO journal is used. IfASYNCIO, Linux asynchronous IO is used. IfASYNCIOis set on a non-Linux or non-libaio system, HornetQ detects this and falls back toNIO. -

journal-sync-transactional - If

true, HornetQ ensures all transaction data is flushed to disk on transaction boundaries (commit, prepare, and rollback). The default istrue. -

journal-sync-non-transactional - If

true, HornetQ ensures non-transactional message data (sends and acknowledgments) are flushed to disk. The default istrue. -

journal-file-size - The size of each journal file in bytes. The default value is

10485760bytes (10 megabytes). -

journal-min-files - The minimum number of files the journal maintains. When HornetQ starts and there is no initial data, HornetQ pre-creates this number of files. Creating and padding journal files is an expensive operation, so to be avoided at run-time as files are filled. Pre-creating files means that as one is filled the journal can immediately resume with the next file without pausing to create it.

-

journal-max-io - The maximum number of write requests to hold in the IO queue. Write requests are queued here before being submitted to the system for execution. If the queue fills, writes are blocked until space becomes available in the queue. For NIO, this must be

1. For AIO, this should be500. A different default value is maintained depending on whether NIO or AIO is used (1for NIO,500for AIO). The total max AIO must not be higher than what is configured at the operating system level (/proc/sys/fs/aio-max-nr), generally at 65536. -

journal-buffer-timeout - HornetQ maintains a buffer of flush requests, and flushes the entire buffer either when it is full or when this timeout expires - whichever is soonest. This is used for both NIO and AIO and allows improved scaling when many concurrent writes and flushes are required.

-

journal-buffer-size - The size of the timed buffer on AIO. The default value is

490kilobytes. -

journal-compact-min-files - The minimum number of files before the journal will be compacted. The default value is

10. -

journal-compact-percentage - When less than this percentage of a journal is considered live data compacting will occur. The default value is

30.journal-compact-min-filesmust also be fulfilled before compacting.

Warning

disabled. This can have negative effects on performance, but ensures data integrity.

13.4. Configuring HornetQ for Zero Persistence

persistence-enabled parameter in <JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml to false.

Important

false, no persistence will occur. No bindings data, message data, large message data, duplicate ID caches, or paging data will be persisted.

13.5. Import/Export the Journal Data

hornetq-core.jar, you can export the journal as a text file by using this command:

java -cp hornetq-core.jar org.hornetq.core.journal.impl.ExportJournal <JournalDirectory> <JournalPrefix> <FileExtension> <FileSize> <FileOutput>

netty.jar):

java -cp hornetq-core.jar:netty.jar org.hornetq.core.journal.impl.ImportJournal <JournalDirectory> <JournalPrefix> <FileExtension> <FileSize> <FileInput>

- JournalDirectory: Use the configured folder for your selected folder. Example: ./hornetq/data/journal

- JournalPrefix: Use the prefix for your selected journal, as discussed

- FileExtension: Use the extension for your selected journal, as discussed

- FileSize: Use the size for your selected journal, as discussed

- FileOutput: text file that will contain the exported data

Chapter 14. Configuring the Transport

14.1. Understanding Acceptors

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml using the following directives.

<acceptor name="netty">

<factory-class>

org.hornetq.core.remoting.impl.netty.NettyAcceptorFactory</factory-class>

<param key="host" value="${jboss.bind.address:localhost}"/>

<param key="port" value="${hornetq.remoting.netty.port:5445}"/>

</acceptor>acceptors element. Multiple acceptors can be defined in one acceptors element. There is no upper limit to the number of acceptors per server.

acceptor that uses Netty to listen for connections on port 5445.

acceptor element contains a sub-element factory-class which defines the factory used to create acceptor instances. In this case Netty is used to listen for connections, so the Netty implementation of AcceptorFactory is being used. The factory-class element determines which pluggable transport listens.

acceptor element can also be configured with zero or more param sub-elements. Each param element defines a key-value pair. These key-value pairs are used to configure the specific transport, the set of valid key-value pairs depends on the specific transport be used and are passed straight through to the underlying transport.

14.2. Understanding Connectors

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml file:

<connector name="netty">

<factory-class>org.hornetq.core.remoting.impl.netty.NettyConnectorFactory</factory-class>

<param key="host" value="${jboss.bind.address:localhost}"/>

<param key="port" value="${hornetq.remoting.netty.port:5445}"/>

</connector>- Sometimes the server acts as a client itself when it connects to another server, for example when one server is bridged to another, or when a server takes part in a cluster. In these cases the server needs to know how to connect to other servers. This is defined by connectors.

- If JMS and the server-side JMS service are used to instantiate JMS ConnectionFactory instances and bind them in JNDI, the JMS service needs to know which server the

HornetQConnectionFactorywill create connections to at the connection factory's creation.This is defined by the <connector-ref> element in theJBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xmlfile on the server side. The following snippet from ahornetq-jms.xmlfile shows a JMS connection factory that references the netty connector defined in the<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xmlfile:<connection-factory name="NettyConnectionFactory"> <connectors> <connector-ref connector-name="netty"/> </connectors> <entries> <entry name="/ConnectionFactory"/> <entry name="/XAConnectionFactory"/> </entries> </connection-factory>

14.3. Configuring the transport directly from the client side

ClientSessionFactory to connect with a server.

ClientSessionFactory to talk to a server. In this case, it is unnecessary to define a connector in the server-side configuration. Instead, create the parameters and configure the connector factory to be used by ClientSessionFactory.

ClientSessionFactory connects directly to the acceptor defined previously in this chapter. It uses the standard Netty TCP transport, and will attempt to connect on port 5446 to localhost (the default).

Map<String, Object> connectionParams =

new HashMap<String, Object>();

connectionParams.put(

org.hornetq.core.remoting.impl.netty.TransportConstants.PORT_PROP_NAME,

5446

);

TransportConfiguration transportConfiguration =

new TransportConfiguration(

"org.hornetq.core.remoting.impl.netty.NettyConnectorFactory",

connectionParams

);

ClientSessionFactory sessionFactory =

HornetQClient.createClientSessionFactory(transportConfiguration);

ClientSession session = sessionFactory.createSession(...);JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml:

Map<String, Object> connectionParams =

new HashMap<String, Object>();

connectionParams.put(

org.hornetq.core.remoting.impl.netty.TransportConstants.PORT_PROP_NAME,

5446

);

TransportConfiguration transportConfiguration =

new TransportConfiguration(

"org.hornetq.core.remoting.impl.netty.NettyConnectorFactory",

connectionParams

);

ConnectionFactory connectionFactory =

HornetQJMSClient.createConnectionFactory(transportConfiguration);

Connection jmsConnection = connectionFactory.createConnection();14.4. Configuring the Netty transport

14.4.1. Configuring Netty TCP

Warning

Important

org.hornetq.core.remoting.impl.netty.TransportConstants. Most parameters can be used with acceptors and connectors. Some only work with acceptors. The following parameters can be used to configure Netty for simple TCP:

-

use-nio - If this is

truethen Java non-blocking NIO will be used. If set tofalsethe older, blocking Java IO will be used. If handling many concurrent connections to the server is a requirement, the non-blocking Java NIO method is highly recommended. Java NIO does not maintain a thread per connection so can scale to many more concurrent connections than the older blocking IO method. If handling many concurrent connections is not required, slightly better performance might be gained by using older blocking IO method. The default value for this property isfalseon the server side andfalseon the client side. -

host - The host name or IP address to connect to (for connectors) or listen on (for acceptors). The default value is

localhost.Important

The default for this variable islocalhost. This is not accessible from remote nodes and must be modified for the server to accept incoming connections.Acceptors can be configured with multiple comma-delimited hosts or IP addresses. Multiple addresses are not valid for connectors.0.0.0.0specifies that all network interfaces of a host should be accepted. -

port - Specifies the port to connect to (for connectors) or listen on (for acceptors). The default value is

5445. -

tcp-no-delay - If

true, Nagle's algorithm is enabled. The default value istrue. -

tcp-send-buffer-size - Defines the size of the TCP send buffer in bytes. The default value is

32768(32 kilobytes). TCP buffer size should be tuned according to the bandwidth and latency of your network. The buffer size in bytes should be equal to the bandwidth in bytes-per-second multiplied by the network round-trip-time (RTT) in seconds. RTT can be measured using the ping utility. For fast networks, you may wish to increase the buffer size from the default value. -

tcp-receive-buffer-size - Defines the size of the TCP receive buffer in bytes. The default value is

32768(32 kilobytes). -

batch-delay - HornetQ can be configured to place write operations into batches for up to

batch-delaymilliseconds. This can increase overall throughput for very small messages, but does so at the expense of an increase in average latency for message transfer. The default value is0milliseconds. -

direct-deliver - When a message arrives on the server and is delivered to consumers, by default the delivery occurs on a different thread to that in which the message arrived. This gives the best overall throughput and scalability, especially on multi-core machines. However, it also introduces additional latency due to the context switch required. For the lowest latency (and possible reduction of throughput), set

direct-delivertotrue(the default). For highest throughput, set tofalse. -

nio-remoting-threads - When configured to use NIO, by default HornetQ uses three times the number of threads as cores (or hyper-threads) reported by

Runtime.getRuntime().availableProcessors()to process incoming packets.nio-remoting-threadsoverrides this and defines the number of threads to use. The default is-1, which represents three times the value fromRuntime.getRuntime().availableProcessors().

14.4.2. Configuring Netty SSL

-

ssl-enabled - Set to

trueto enable SSL. -

key-store-path - Defines the path to the SSL key store on the client that holds the client certificates.

-

key-store-password - Defines the password for the client certificate key store on the client.

-

trust-store-path - Defines the path to the trusted client certificate store on the server.

-

trust-store-password - Defines the password to the trusted client certificate store on the server.

14.4.3. Configuring Netty HTTP

-

http-enabled - Set to

trueto enable HTTP. -

http-client-idle-time - The period of time (in milliseconds) a client can be idle before sending an empty HTTP request to keep the connection alive.

-

http-client-idle-scan-period - How often, in milliseconds, to scan for idle clients.

-

http-response-time - The period of time in milliseconds the server should wait before sending an empty HTTP response to keep the connection alive.

-

http-server-scan-period - How often, in milliseconds, to scan for clients requiring responses.

-

http-requires-session-id - When

true, the client waits to receive a session ID after the first call. This is used when the HTTP connector is connecting to the servlet acceptor (not recommended).

14.4.4. Configuring Netty Servlet

Configuring a servlet engine for the Netty Servlet transport

- Deploy the servlet. A web application using the servlet might have a

web.xmlfile similar to the following:<?xml version="1.0" encoding="UTF-8"?> <web-app xmlns="http://java.sun.com/xml/ns/j2ee" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://java.sun.com/xml/ns/j2ee http://java.sun.com/xml/ns/j2ee/web-app_2_4.xsd" version="2.4"> <servlet> <servlet-name>HornetQServlet</servlet-name> <servlet-class>org.jboss.netty.channel.socket.http.HttpTunnelingServlet</servlet-class> <init-param> <param-name>endpoint</param-name> <param-value>local:org.hornetq</param-value> </init-param> <load-on-startup>1</load-on-startup> </servlet> <servlet-mapping> <servlet-name>HornetQServlet</servlet-name> <url-pattern>/HornetQServlet</url-pattern> </servlet-mapping> </web-app> - Add the

netty-invmacceptor to the server-side configuration in<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml:<acceptors> <acceptor name="netty-invm"> <factory-class> org.hornetq.core.remoting.impl.netty.NettyAcceptorFactory </factory-class> <param key="use-invm" value="true"/> <param key="host" value="org.hornetq"/> </acceptor> </acceptors> - Define a connector for the client in

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml:<connectors> <connector name="netty-servlet"> <factory-class> org.hornetq.core.remoting.impl.netty.NettyConnectorFactory </factory-class> <param key="host" value="localhost"/> <param key="port" value="8080"/> <param key="use-servlet" value="true"/> <param key="servlet-path" value="/messaging/HornetQServlet"/> </connector> </connectors>

Init Parameters

-

endpoint - Defines the netty acceptor to which the servlet forwards its packets. Matches the name of the

hostparameter.

web.xml is the path of the URL that is used. The connector parameter servlet-path on the connector configuration must match this using the application context of the web application if there is one.

<connector name="netty-servlet">

<factory-class>

org.hornetq.core.remoting.impl.netty.NettyConnectorFactory

</factory-class>

<param key="host" value="localhost"/>

<param key="port" value="8443"/>

<param key="use-servlet" value="true"/>

<param key="servlet-path" value="/messaging/HornetQServlet"/>

<param key="ssl-enabled" value="true"/>

<param key="key-store-path" value="path to a keystore"/>

<param key="key-store-password" value="keystore password"/>

</connector>server/default/deploy/jbossweb.sar/server.xml like so:

<Connector protocol="HTTP/1.1" SSLEnabled="true"

port="8443" address="${jboss.bind.address}"

scheme="https" secure="true" clientAuth="false"

keystoreFile="path to a keystore"

keystorePass="keystore password" sslProtocol = "TLS" />Chapter 15. Detecting Dead Connections

15.1. Cleaning up Dead Connection Resources on the Server

finally block.

Example 15.1. Well Behaved Core Client Application

ClientSessionFactory sf = null;

ClientSession session = null;

try

{

sf = HornetQClient.createClientSessionFactory(...);

session = sf.createSession(...);

... do some stuff with the session...

}

finally

{

if (session != null)

{

session.close();

}

if (sf != null)

{

sf.close();

}

}Example 15.2. Well Behaved JMS Client Application

Connection jmsConnection = null;

try

{

ConnectionFactory jmsConnectionFactory = HornetQJMSClient.createConnectionFactory(...);

jmsConnection = jmsConnectionFactory.createConnection();

... do some stuff with the connection...

}

finally

{

if (connection != null)

{

connection.close();

}

}

connection TTL is configurable. Each ClientSessionFactory has a defined connection TTL. The TTL determines how long the server will keep a connection alive in the absence of any data arriving from the client.

- On the client side, specify the

ConnectionTTLattribute on aHornetQConnectionFactoryinstance (<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/jms-ra.rar/META-INF/ra.xml) - On the server side where connection factory instances are being deployed directly into JNDI, specify the

connection-ttlparameter for the<connection-factory>directive in theJBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xmlfile.

ConnectionTTL is 60000 (milliseconds). A value of -1 for the ConnectionTTL attribute means the server will never time out the connection on the server side.

connection-ttl-override attribute in JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml file. The default value for connection-ttl-override is -1, which allows clients to set their own values for connection TTL.

15.1.1. Closing core sessions or JMS connections that have failed to close

finally block when they are finished.

finally block, HornetQ will detect this at garbage collection time, and log a warning similar to the following in the logs (If you are using JMS the warning will involve a JMS connection not a client session):

[Finalizer] 20:14:43,244 WARNING [org.hornetq.core.client.impl.DelegatingSession] I'm closing a ClientSession you left open. Please make sure you close all ClientSessions explicitly before letting them go out of scope! [Finalizer] 20:14:43,244 WARNING [org.hornetq.core.client.impl.DelegatingSession] The session you did not close was created here: java.lang.Exception at org.hornetq.core.client.impl.DelegatingSession.<init>(DelegatingSession.java:83) at org.acme.yourproject.YourClass (YourClass.java:666)

15.2. Detecting failure from the client side.

client-failure-check-period milliseconds then it will consider the connection failed and will either initiate fail-over, or call any SessionFailureListener instances (or ExceptionListener instances if you are using JMS) depending on how it has been configured.

ClientFailureCheckPeriod attribute on a HornetQConnectionFactory instance. If JMS connection factory instances are being deployed directly into JNDI on the server side, it can be specified in the JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml configuration file, using the parameter client-failure-check-period.

30000ms (30 seconds). A value of -1 means the client will never fail the connection on the client side if no data is received from the server. Typically this is much lower than the connection TTL value to allow clients to reconnect in case of transitory failure.

15.3. Configuring Asynchronous Connection Execution

Note

async-connection-execution-enabled in <JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml is set to true to enable asynchronous connection execution.

Chapter 16. Resource Manager Configuration

transaction-timeout property in <JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml (value must be in milliseconds). The property transaction-timeout-scan-period configures how often, in milliseconds, to scan for old transactions.

Chapter 17. Flow Control

17.1. Consumer Flow Control

receive() method or asynchronously via a message listener. Messages can build up if the consumer cannot process messages as fast as they are being delivered and installed on the internal buffer. This can potentially lead to a lack of memory on the client if they cannot be processed in time.

17.1.1. Window-Based Flow Control

consumer-window-size parameter.

consumer-window-size is set to 1 MiB (1024 * 1024 bytes).

-1for an unbound buffer0to not buffer any messages.>0for a buffer with the given maximum size in bytes.

- Fast consumers

- Fast consumers can process messages as fast as they consume them.To allow fast consumers, set the

consumer-window-sizeto -1. This will allow unbound message buffering on the client side.Use this setting with caution: it can overflow the client memory if the consumer is not able to process messages as fast as it receives them. - Slow consumers

- Slow consumers take significant time to process each message and it is desirable to prevent buffering messages on the client side so that they can be delivered to another consumer instead.Consider a situation where a queue has two consumers; one of which is very slow. Messages are delivered in a circular fashion to both consumers; the fast consumer processes all of its messages very quickly until its buffer is empty. At this point there are still messages waiting to be processed in the buffer of the slow consumer which prevents them being processed by the fast consumer. The fast consumer is therefore sitting idle when it could be processing the other messages.To allow slow consumers, set the

consumer-window-sizeto 0 (for no buffer at all). This will prevent the slow consumer from buffering any messages on the client side. Messages will remain on the server side ready to be consumed by other consumers.Setting this to 0 can give deterministic distribution between multiple consumers on a queue.

consumer-window-size to optimize performance depends on the messaging use case and requires benchmarks to find the optimal value, but a value of 1MiB is fine in most cases.

17.1.1.1. Using Core API

ClientSessionFactory.setConsumerWindowSize() method and some of the ClientSession.createConsumer() methods.

17.1.1.2. Using JMS

JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml:

<connection-factory name="ConnectionFactory">

<connectors>

<connector-ref connector-name="netty-connector"/>

</connectors>

<entries>

<entry name="/ConnectionFactory"/>

</entries>

<!-- Set the consumer window size to 0 to have *no* buffer on the client side -->

<consumer-window-size>0</consumer-window-size>

</connection-factory>HornetQConnectionFactory.setConsumerWindowSize() method.

17.1.2. Rate limited flow control

-1 disables rate limited flow control. The default value is -1.

17.1.2.1. Using Core API

ClientSessionFactory.setConsumerMaxRate(int consumerMaxRate) method or alternatively via some of the ClientSession.createConsumer() methods.

17.1.2.2. Using JMS

JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml:

<connection-factory name="NettyConnectionFactory">

<connectors>

<connector-ref connector-name="netty-connector"/>

</connectors>

<entries>

<entry name="/ConnectionFactory"/>

</entries>

<!-- We limit consumers created on this connection factory to consume messages at a maximum rate of 10 messages per sec -->

<consumer-max-rate>10</consumer-max-rate>

</connection-factory>HornetQConnectionFactory.setConsumerMaxRate(int consumerMaxRate) method.

Note

17.2. Producer flow control

17.2.1. Window based flow control

17.2.1.1. Using Core API

ClientSessionFactory.setProducerWindowSize(int producerWindowSize) method.

17.2.1.2. Using JMS

JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml:

<connection-factory name="NettyConnectionFactory">

<connectors>

<connector-ref connector-name="netty-connector"/>

</connectors>

<entries>

<entry name="/ConnectionFactory"/>

</entries>

<producer-window-size>10</producer-window-size>

</connection-factory>HornetQConnectionFactory.setProducerWindowSize(int producerWindowSize) method.

17.2.1.3. Blocking producer window based flow control

max-size-bytes and address-full-policy

max-size-bytes. In the case of JMS topics this means the total memory of all subscriptions in the topic will not exceed max-size-bytes.

<address-settings>

<address-setting match="jms.queue.exampleQueue">

<max-size-bytes>100000</max-size-bytes>

<address-full-policy>PAGE</address-full-policy>

</address-setting>

</address-settings>BLOCK to enable blocking producer flow control.

17.2.2. Rate limited flow control

-1 disables rate limited flow control. The default value is -1.

17.2.2.1. Using Core API

ClientSessionFactory.setProducerMaxRate(int consumerMaxRate) method or alternatively via some of the ClientSession.createProducer() methods.

17.2.2.2. Using JMS

JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml:

<connection-factory name="NettyConnectionFactory">

<connectors>

<connector-ref connector-name="netty-connector"/>

</connectors>

<entries>

<entry name="ConnectionFactory"/>

</entries>

<!-- We limit producers created on this connection factory to produce messages at a maximum rate of 10 messages per sec -->

<producer-max-rate>10</producer-max-rate>

</connection-factory>HornetQConnectionFactory.setProducerMaxRate(int consumerMaxRate) method.

Chapter 18. Guarantees of sends and commits

18.1. Guarantees of Transaction Completion

journal-sync-transactional the server will ensure that the commit or rollback is durably persisted to storage before sending the response back to the client. If this parameter has the value false then commit or rollback may not actually get persisted to storage until some time after the response has been sent to the client. In the event of server failure this may mean the commit or rollback never gets persisted to storage. The default value of this parameter is true so the client can be sure all transaction commits or rollbacks have been persisted to storage by the time the call to commit or rollback returns.

false can improve performance at the expense of some loss of transaction durability.

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml.

18.2. Guarantees of Non Transactional Message Sends

-

BlockOnDurableSend - If this is set to

truethen all calls to send for durable messages on non transacted sessions will block until the message has reached the server, and a response has been sent back. The default value istrue. -

BlockOnNonDurableSend - If this is set to

truethen all calls to send for non-durable messages on non-transacted sessions will be blocked until the message has reached the server, and a response has been sent back. The default value isfalse.

true can reduce performance since each send requires a network round trip before the next send can be performed. This means the performance of sending messages will be limited by the network round trip time (RTT) of your network, rather than the bandwidth of your network. For better performance it is recommended that you either batch many message sends together in a transaction (since with a transactional session, only the commit/rollback does not block every send), or use the asynchronous send acknowledgments feature described in Section 18.4, “Asynchronous Send Acknowledgments”.

JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml using the elements block-on-durable-send and block-on-non-durable-send. If you are using JMS but not using JNDI then you can set these values directly on the HornetQConnectionFactory instance using the appropriate setter methods.

ClientSessionFactory instance using the appropriate setter methods.

journal-sync-non-transactional is set to true the server will not send a response back to the client until the message has been persisted and the server has a guarantee that the data has been persisted to disk. The default value for this parameter is true.

18.3. Guarantees of Non-Transactional Acknowledgments

BlockOnAcknowledge. If this is set to true then all calls to acknowledge on non-transacted sessions will block until the acknowledge has reached the server, and a response has been sent back. You might want to set this to true if you want to implement a strict at most once delivery policy. The default value is false

18.4. Asynchronous Send Acknowledgments

18.4.1. Asynchronous Send Acknowledgments

org.hornetq.api.core.client.SendAcknowledgementHandler and set a handler instance on your ClientSession.

ClientSession, and as messages reach the server, the server will send back an acknowledgment of the send asynchronously. HornetQ calls your handler's sendAcknowledged(ClientMessage message) method, passing in a reference to the message that was sent.

confirmation-window-size is set to a positive integer value (specified in bytes). For example, 10485760 (10 Mebibytes) .

Chapter 19. Message Redelivery and Undelivered Messages

- Delayed Redelivery

- Message delivery can be delayed to allow the client time to recover from transient failures and not overload its network or CPU resources.

- Dead Letter Address

- Configure a dead letter address, to which messages are sent after being determined undeliverable.

19.1. Delayed Redelivery

19.1.1. Configuring Delayed Redelivery

address-setting configuration:

<!-- delay redelivery of messages for 5s --> <address-setting match="jms.queue.exampleQueue"> <redelivery-delay>5000</redelivery-delay> </address-setting>

redelivery-delay is specified, HornetQ will wait that many milliseconds before redelivering the messages. Redelivery delay is enabled by default and set to 60000 (1 minute).

19.2. Dead Letter Addresses

19.2.1. Configuring Dead Letter Addresses

address-setting configuration:

<!-- undelivered messages in exampleQueue will be sent to the dead letter address deadLetterQueue after 3 unsuccessful delivery attempts--> <address-setting match="jms.queue.exampleQueue"> <dead-letter-address>jms.queue.deadLetterQueue</dead-letter-address> <max-delivery-attempts>3</max-delivery-attempts> </address-setting>

dead-letter-address is not specified, messages will be removed after max-delivery-attempts unsuccessful attempts.

max-delivery-attempts to -1 for infinite redeliveries.

max-delivery-attempts set to -1 for a specific address setting to allow infinite redeliveries only for this address.

19.2.2. Dead Letter Properties

-

HQ_ORIG_ADDRESS - A String property containing the original address of the dead letter message.

19.3. Delivery Count Persistence

redelivered set to false when it should be true.

persist-delivery-count-before-delivery to true in <JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml:

<persist-delivery-count-before-delivery> true </persist-delivery-count-before-delivery>

Chapter 20. Message Expiry

TimeToLive) when sending them.

20.1. Message Expiry

// message will expire in 5000ms from now message.setExpiration(System.currentTimeMillis() + 5000);

TimeToLive for the messages it sent:

// messages sent by this producer will be retained for 5s (5000ms) before expiration producer.setTimeToLive(5000);

-

HQ_ORIG_ADDRESS - a String property containing the original address of the expired message

-

HQ_ACTUAL_EXPIRY - a Long property containing the actual expiration time of the expired message

20.2. Configuring Expiry Addresses

<!-- expired messages in exampleQueue will be sent to the expiry address expiryQueue --> <address-setting match="jms.queue.exampleQueue"> <expiry-address>jms.queue.expiryQueue</expiry-address> </address-setting>

20.3. Configuring The Expiry Reaper Thread

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml.

-

message-expiry-scan-period - How often the queues will be scanned to detect expired messages (in milliseconds, default is 30000ms, set to

-1to disable the reaper thread) -

message-expiry-thread-priority - The reaper thread priority (it must be between zero and nine; nine being the highest priority. The default is three).

Chapter 21. Large Messages

InputStream on a message body. When that message is sent, HornetQ will read the InputStream. For example, a FileInputStream could be used to send a large message from a large file on disk.

InputStream is read, the data is sent to the server as a stream of fragments. The server persists these fragments to disk as it receives them. When the time comes to deliver them to a consumer they are read back off the disk, also in fragments, and re-transmitted. When the consumer receives a large message it initially receives just the message with an empty body. It can then set an OutputStream on the message to stream the large message body to a file on disk or elsewhere. At no time is the entire message body stored fully in memory, either on the client or the server.

21.1. Configuring the server

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml.

large-messages-directory specifies where large messages are stored.

<configuration xmlns="urn:hornetq"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:hornetq /schema/hornetq-configuration.xsd">

...

<large-messages-directory>${jboss.server.data.dir}/${hornetq.data.dir:hornetq}/largemessages</large-messages-directory>

...

</configuration>data/large-messages.

21.2. Setting the limits

min-large-message-size.

21.2.1. Using Core API

ClientSessionFactory.setMinLargeMessageSize.

ClientSessionFactory factory = HornetQClient.createClientSessionFactory(new TransportConfiguration(NettyConnectorFactory.class.getName()), null); factory.setMinLargeMessageSize(25 * 1024);

21.2.2. Using JMS

JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml.

...

<connection-factory name="NettyConnectionFactory">

<connectors>

<connector-ref connector-name="netty"/>

</connectors>

<entries>

<entry name="/ConnectionFactory"/>

<entry name="/XAConnectionFactory"/>

</entries>

<min-large-message-size>250000</min-large-message-size>

</connection-factory>

...HornetQConnectionFactory.setMinLargeMessageSize.

21.3. Streaming large messages

java.lang.io).

ClientMessage.saveOutputStream or alternatively using the method ClientMessage.setOutputstream which will asynchronously write the message to the stream. If you choose the latter the consumer must be kept alive until the message has been fully received.

- JDBC Blobs

SocketInputStream- Things recovered from

HTTPRequests, and so on.

java.io.InputStream for sending messages, or java.io.OutputStream for receiving them can be used.

21.3.1. Streaming over Core API

ClientMessage which are also available through JMS by the use of object properties.

Table 21.1. org.hornetq.api.core.client.ClientMessage API

| Name | Description | JMS Equivalent Property |

|---|---|---|

| setBodyInputStream (InputStream) | Set the InputStream used to read a message body when sending it. | JMS_HQ_InputStream |

| setOutputStream (OutputStream) | Set the OutputStream that will receive the body of a message. This method does not block. | JMS_HQ_OutputStream |

| saveToOutputStream (OutputStream) | Save the body of the message to the OutputStream. It will block until the entire content is transferred to the OutputStream. | JMS_HQ_SaveStream |

... ClientMessage msg = consumer.receive(...); // This will block here until the stream was transferred msg.saveToOutputStream(someOutputStream); ClientMessage msg2 = consumer.receive(...); // This will not wait the transfer to finish msg.setOutputStream(someOtherOutputStream); ...

... ClientMessage msg = session.createMessage(); msg.setInputStream(dataInputStream); ...

21.3.2. Streaming over JMS

Message.setObjectProperty to set the input and output streams.

InputStream can be defined through the JMS Object Property JMS_HQ_InputStream on messages being sent:

BytesMessage message = session.createBytesMessage();

FileInputStream fileInputStream = new FileInputStream(fileInput);

BufferedInputStream bufferedInput = new BufferedInputStream(fileInputStream);

message.setObjectProperty("JMS_HQ_InputStream", bufferedInput);

someProducer.send(message);OutputStream can be set through the JMS Object Property JMS_HQ_SaveStream on messages being received in a blocking way.

BytesMessage messageReceived = (BytesMessage)messageConsumer.receive(120000);

File outputFile = new File("huge_message_received.dat");

FileOutputStream fileOutputStream = new FileOutputStream(outputFile);

BufferedOutputStream bufferedOutput = new BufferedOutputStream(fileOutputStream);

// This will block until the entire content is saved on disk

messageReceived.setObjectProperty("JMS_HQ_SaveStream", bufferedOutput);OutputStream could also be done in a non-blocking way using the property JMS_HQ_OutputStream.

// This will not wait the stream to finish. You need to keep the consumer active.

messageReceived.setObjectProperty("JMS_HQ_OutputStream", bufferedOutput);Note

StreamMessage and BytesMessage.

21.4. Streaming Alternative

InputStream or OutputStream capability of HornetQ, the data can still be accessed directly in an alternative fashion.

ClientMessage msg = consumer.receive();

byte[] bytes = new byte[1024];

for (int i = 0 ; i < msg.getBodySize(); i += bytes.length)

{

msg.getBody().readBytes(bytes);

// Whatever you want to do with the bytes

}BytesMessage and StreamMessage also supports it transparently.

BytesMessage rm = (BytesMessage)cons.receive(10000);

byte data[] = new byte[1024];

for (int i = 0; i < rm.getBodyLength(); i += 1024)

{

int numberOfBytes = rm.readBytes(data);

// Do whatever you want with the data

}21.5. Cache Large Messages on client

cache-large-message-client in the connection factory. If you enable this property the client consumer will create a temporary file to hold the large message content, so it would be possible to resend large messages.

Note

Chapter 22. Paging

22.1. Page Files

page-size-bytes). When reading page-files all messages on the page-file are read, routed, and the file is deleted as soon as the messages are recovered.

22.2. Configuration

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml.

<configuration xmlns="urn:hornetq"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:hornetq /schema/hornetq-configuration.xsd">

...

<paging-directory>${jboss.server.data.dir}/hornetq/paging</paging-directory>

<page-max-concurrent-io>5</page-max-concurrent-io>

...-

paging-directory - This is where page files are stored. HornetQ will create one folder for each address under this configured location. The default for this is data/paging.

-

page-max-concurrent-io - The maximum number of concurrent reads the system can make on the paging files. This may be increased depending on the expected number of paged destinations and the limits on the storage infrastructure.

22.3. Paging Mode

Note

22.3.1. Configuration

<JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml.

<address-settings>

<address-setting match="jms.someaddress">

<max-size-bytes>104857600</max-size-bytes>

<page-size-bytes>10485760</page-size-bytes>

<address-full-policy>PAGE</address-full-policy>

</address-setting>

</address-settings>Table 22.1. Paging Address Settings

| Property Name | Description | Default |

|---|---|---|

max-size-bytes | The max memory the address could have before entering on page mode. | -1 (disabled) |

page-size-bytes | The size of each page file used on the paging system | 10MiB (10 * 1024 * 1024 bytes) |

address-full-policy | This must be set to PAGE for paging to enable.

| PAGE |

| page-max-cache-size | Specifies the number of page files kept in memory to optimize input/output cycles during paging navigation. | 5 |

22.4. Dropping messages

address-full-policy to DROP in the address settings

22.5. Blocking producers

address-full-policy to BLOCK in the address settings.

Important

22.6. Caution with Addresses with Multiple Queues

- An address has ten queues

- One of the queues does not deliver its messages (maybe because of a slow consumer).

- Messages continually arrive at the address and paging is started.

- The other nine queues are empty even though messages have been sent.

Important

Important

page-size-bytes (on the server) to a value lower than ack-batch-size (in the client) or your system may appear to hang.

Chapter 23. Queue Attributes

23.1. Predefined Queues

The following shows a queue predefined in the JBOSS_DIST/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-jms.xml configuration file.

<queue name="selectorQueue"> <entry name="/queue/selectorQueue"/> <selector string="color='red'"/> <durable>true</durable> </queue>

jms.queue.selectorQueue.

null.

true.

A queue can be predefined at a core level in the <JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml file.

<queues>

<queue name="jms.queue.selectorQueue">

<address>jms.queue.selectorQueue</address>

<filter string="color='red'"/>

<durable>true</durable>

</queues>- The name attribute of queue is the actual name used for the queue with no naming convention as in JMS.

- The address element defines what address is used for routing messages.

- There is no entry element.

- The filter uses the Core filter syntax (described in Chapter 12, Filter Expressions), not the JMS selector syntax.

23.2. Using the API

org.hornetq.api.core.client.ClientSession interface. There are multiple createQueue methods that support setting all of the previously mentioned attributes. There is one extra attribute that can be set via this API which is temporary. Setting this to true means the queue will be deleted once the session is disconnected.

23.3. Configuring Queues Through Address Settings

address-setting entry that would be found in the <JBOSS_HOME>/jboss-as/server/<PROFILE>/deploy/hornetq/hornetq-configuration.xml file.

<address-settings>

<address-setting match="jms.queue.exampleQueue">

<dead-letter-address>jms.queue.deadLetterQueue</dead-letter-address>

<max-delivery-attempts>3</max-delivery-attempts>

<redelivery-delay>5000</redelivery-delay>

<expiry-address>jms.queue.expiryQueue</expiry-address>

<last-value-queue>true</last-value-queue>

<max-size-bytes>100000</max-size-bytes>

<page-size-bytes>20000</page-size-bytes>

<redistribution-delay>0</redistribution-delay>

<send-to-dla-on-no-route>true</send-to-dla-on-no-route>

<address-full-policy>PAGE</address-full-policy>

</address-setting>

</address-settings>match attribute. In the above example the settings would only be applied to any addresses which exactly match the address jms.queue.exampleQueue, but you can also use wildcards to apply sets of configuration against many addresses. The wildcard syntax used is described in Chapter 11, Understanding the HornetQ Wildcard Syntax

match string jms.queue.# the settings would be applied to all addresses which start with jms.queue. which would be all JMS queues.

-

max-delivery-attempts - Defines how many times a canceled message can be redelivered before sending it to the

dead-letter-address. A full explanation can be found in Section 19.2.1, “Configuring Dead Letter Addresses”. -

redelivery-delay - Defines how long to wait before attempting redelivery of a canceled message. Refer to Section 19.1.1, “Configuring Delayed Redelivery”.

-

expiry-address - Defines where to send a message that has expired. Refer to Section 20.2, “Configuring Expiry Addresses”.

-

last-value-queue - Defines whether a queue only uses last values or not. Refer to Chapter 25, Last-Value Queues.

max-size-bytesandpage-size-bytes- Are used to set paging on an address. This is explained in Chapter 22, Paging.

-

redistribution-delay - Defines how long to wait when the last consumer is closed on a queue before redistributing any messages. See Section 36.6, “Message Redistribution”.

-

send-to-dla-on-no-route - If a message is sent to an address, but the server does not route it to any queues, (for example, there might be no queues bound to that address, or none of the queues have filters that match) then normally that message would be discarded. However if this parameter is set to true for that address, if the message is not routed to any queues it will instead be sent to the dead letter address (DLA) for that address, if it exists.

-

address-full-policy - This attribute can have one of the following values: PAGE, DROP or BLOCK and determines what happens when an address where

max-size-bytesis specified becomes full. The default value is PAGE. If the value is PAGE then further messages will be paged to disk.- If the value is DROP then further messages will be silently dropped.

- If the value is BLOCK then client message producers will block when they try and send further messages.

Chapter 24. Scheduled Messages

24.1. Scheduled Delivery Property

"_HQ_SCHED_DELIVERY" (or the constant Message.HDR_SCHEDULED_DELIVERY_TIME).

long corresponding to the time the message must be delivered (in milliseconds). An example of sending a scheduled message using the JMS API is as follows.

TextMessage message =

session.createTextMessage("This is a scheduled message which will be delivered

in 5 sec.");

message.setLongProperty("_HQ_SCHED_DELIVERY", System.currentTimeMillis() + 5000);

producer.send(message);

...

// message will not be received immediately but 5 seconds later

TextMessage messageReceived = (TextMessage) consumer.receive();

Chapter 25. Last-Value Queues

25.1. Configuring Last-Value Queues

<address-setting match="jms.queue.lastValueQueue"> <last-value-queue>true</last-value-queue> </address-setting>